Pre-read for exercise 1.2: Problem 1.2: Solution for problem 1.2: Pg 1: Pg 2: Pg 3: Pg 4:

Pages

- Index of Lessons in Technology

- Index of Book Summaries

- Index of Book Lists And Downloads

- Index For Job Interviews Preparation

- Index of "Algorithms: Design and Analysis"

- Python Course (Index)

- Data Analytics Course (Index)

- Index of Machine Learning

- Postings Index

- Index of BITS WILP Exam Papers and Content

- Lessons in Investing

- Index of Math Lessons

- Index of Management Lessons

- Book Requests

- Index of English Lessons

- Index of Medicines

- Index of Quizzes (Educational)

Sunday, January 7, 2024

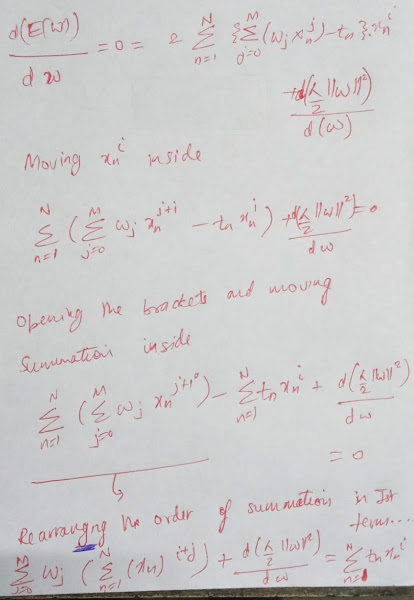

Exercise 1.2 On Regularization in Linear Regression - Pattern Recognition and ML - By Christopher Bishop

Exercise 1.3 on 'sum rule and product rule of probability' (From the book Pattern Recognition and ML by Christopher Bishop)

Let's quiz you for the basic concepts of probability theory by considering a simple example.

Imagine we have two boxes, one red and one blue, and in the red box we have 2 apples and 6 oranges, and in the blue box we have 3 apples and 1 orange.

This is illustrated in Figure 1.9. Now suppose we randomly pick one of the boxes and from that box we randomly select an item of fruit, and having observed which sort of fruit it is we replace it in the box from which it came. We could imagine repeating this process many times.

Let us suppose that in so doing we pick the red box 40% of the time and we pick the blue box 60% of the time, and that when we remove an item of fruit from a box we are equally likely to select any of the pieces of fruit in the box.

In this example, the identity of the box that will be chosen is a random variable, which we shall denote by B. This random variable can take one of two possible values, namely r (corresponding to the red box) or b (corresponding to the blue box). Similarly, the identity of the fruit is also a random variable and will be denoted by F . It can take either of the values a (for apple) or o (for orange).

To begin with, we shall define the probability of an event to be the fraction of times that event occurs out of the total number of trials, in the limit that the total number of trials goes to infinity. Thus the probability of selecting the red box is 4/10 and the probability of selecting the blue box is 6/10. We write these probabilities as:

p(B = r) = 4/10 and p(B = b) = 6/10.

Note that, by definition, probabilities must lie in the interval [0, 1]. Also, if the events are mutually exclusive and if they include all possible outcomes (for instance, in this example the box must be either red or blue), then we see that the probabilities for those events must sum to one.

We can now ask questions such as:

“what is the overall probability that the selection procedure will pick an apple?”,

or

“given that we have chosen an orange, what's the probability that the box we chose was the blue one?”

We can answer questions such as these, and indeed much more complex questions associated with

problems in pattern recognition, once we have equipped ourselves with the two elementary rules of probability, known as the sum rule and the product rule.

Sum Rule and Product Rule

Here p(X, Y ) is a joint probability and is verbalized as “the probability of X and Y ”. Similarly, the quantity p(Y |X) is a conditional probability and is verbalized as “the probability of Y given X”, whereas the quantity p(X) is a marginal probability and is simply “the probability of X”.

Let us now return to our example involving boxes of fruit. For the moment, we

shall once again be explicit about distinguishing between the random variables and

their instantiations. We have seen that the probabilities of selecting either the red or

the blue boxes are given by

p(B = r) = 4/10

p(B = b) = 6/10

respectively. Note that these satisfy p(B = r) + p(B = b) = 1.

Now suppose that we pick a box at random, and it turns out to be the blue box.

Then the probability of selecting an apple is just the fraction of apples in the blue

box which is 3/4, and so p(F = a|B = b) = 3/4. In fact, we can write out all four

conditional probabilities for the type of fruit, given the selected box

p(F = a|B = r) = 1/4

p(F = o|B = r) = 3/4

p(F = a|B = b) = 3/4

p(F = o|B = b) = 1/4

Practice Exercise

Suppose that we have three coloured boxes r (red), b (blue), and g (green).

Box r contains 3 apples, 4 oranges, and 3 limes, box b contains 1 apple, 1 orange, and 0 limes, and box g contains 3 apples, 3 oranges, and 4 limes. If a box is chosen at random with probabilities p(r) = 0.2, p(b) = 0.2, p(g) = 0.6, and a piece of fruit is removed from the box (with equal probability of selecting any of the items in the box), then what is the probability of selecting an apple?

If we observe that the selected fruit is in fact an orange, what is the probability that it came from the green box?

Solution

Ref: ChatGPT

Exercise 1.4 On Probability Densities - Pattern Recognition and ML - By Christopher Bishop

Pre-read

Fig:1 Fig:2 Fig:3 If we have several continuous variables x1,...,xD, denoted collectively by the vector x, then we can define a joint probability density p(x) = p(x1,...,xD) such Fig:4Question

Fig:5Solution

Exercise 1.6 On Covariance - Pattern Recognition and ML - By Christopher Bishop

Pre-read

Fig 1 Fig 21.6: Show that if two variables x and y are independent, then their covariance is zero.

Solution

Fig 3 Fig 4

Exercise 1.7 - Identifying normalization constant for Gaussian Distribution and Proving that it's integral equals 1 - From Pattern Recognition and ML - By Christopher Bishop

Pre-read

Fig 1: Fig 2:Question

Fig 3:Solution

Fig 4: Fig 5: Fig 6: Fig 7: Fig 8: Fig 9: Fig 10: Fig 11:Index of Machine Learning

THEORY

What is Machine Learning?

Logistic Regression

- Logistic Regression Equation

- Logistic Regression is a binary classification algorithm

- Practice problems on Logistic Regression for interview preparation

- Getting the Geometric Intuition Behind Logistic Regression

- Likelihood of a Single Observation For Logistic Regression Model

- Cost Function For Logistic Regression Model

Decision Tree

Deep Learning

Integration

- What happens when we reverse the limits of a definite integral?

- Can you give the example of an odd integrand and prove that it is odd?

- What is the differentiation and integration of f(x)=1 wrt dx

- Show step by step integration of exp(-x by a)

- Change in limits of integration when changing from cartesian to polar coordinates

- Gaussian Integral: Integrate exp(-x^2) from -infinite to +infinite

Vector Calculus

- y = w.x Where w and x are vectors. What is the derivative of y wrt w?

- x is a vector and y = euclidean_norm(x). What is the derivative of y wrt x?

- y = (norm(x))^2. What is the derivative of y wrt x?

- Can you show the derivative X-transpose.X wrt x?

- What is Jacobian of change of variables?

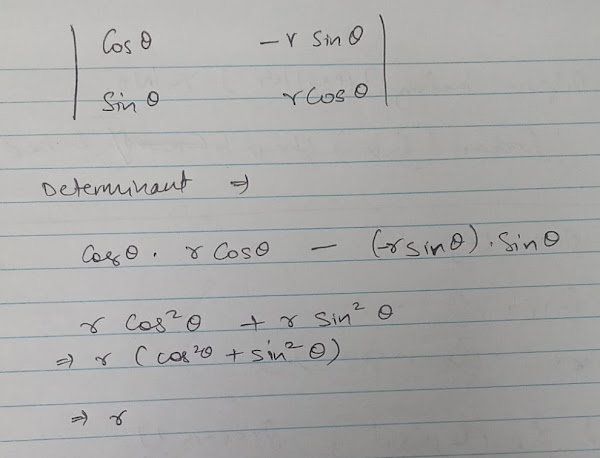

- Derive Jacobian when we are changing from 2D cartesian coordinates to polar coordinates.

- Derive generalized Gaussian Integral from the Special Case of Gaussian Integral for Mu = 0 and Sigma = 1-by-sqrt(2)

- Solving 2D Gaussian Integral w.r.t x and y and also w.r.t polar coordinates

Exercises from the book "Pattern Recognition and Machine Learning" by Christopher Bishop

- Exercise 1.1 On Linear Equations for Regression - From Pattern Recognition and ML - By Christopher Bishop

- Exercise 1.2 On Regularization in Linear Regression - Pattern Recognition and ML - By Christopher Bishop

- Exercise 1.3 on 'sum rule and product rule of probability' (From the book Pattern Recognition and ML by Christopher Bishop)

- Exercise 1.4 On Probability Densities - Pattern Recognition and ML - By Christopher Bishop

- Exercise 1.5 On Concept of Variance From Statistics - Pattern Recognition and ML - By Christopher Bishop

- Exercise 1.6 On Covariance - Pattern Recognition and ML - By Christopher Bishop

- Exercise 1.7 - Identifying normalization constant for Gaussian Distribution and Proving that it's integral equals 1 - From Pattern Recognition and ML - By Christopher Bishop

- Ex 1.8 - Show that the mean value of Gaussian distribution is µ. (Pattern Recognition and ML by Christopher Bishop)

- Ex 1.9 - Show that the mode of the multivariate Gaussian is given by µ. (Pattern Recognition and ML by Christopher Bishop)

- Ex 1.10 - On mean and variance of two variables (Pattern Recognition and ML by Christopher Bishop)

- Ex 1.12 - Pattern Recognition and ML by Christopher Bishop

- Ex 1.13 - Pattern Recognition and Machine Learning - By Christopher Bishop

- Ex 1.14 - Pattern Recognition and Machine Learning - By Christopher Bishop

Statistics

PRACTICALS

ML1: Category Encoding

- Installing 'Category Encoders' Python Package Using Pip And Conda

- Category Encoders Analysis (in Python)

- One Hot Encoding Using Pandas' get_dummies() Method on Titanic Dataset

- Do we need all the one hot features?

- One Hot Encoding from PySpark, Pandas, Category Encoders and skLearn

- Comparing StringIndexer (PySpark), LabelEncoder (skLearn), OrdinalEncoder (skLearn), OrdinalEncoder (category_encoders) [Tags: Machine Learning, Spark]

- Effect of PySpark's StringIndexer on clustering of data [Tags: Machine Learning, Spark]

- Two ways to get Frequency Based Order of Categorical Data (Python)

ML2: Data Preprocessing

- Binning (of a column or 1D Numerical Data)

- Feature Scaling in Machine Learning (when to use which among MinMaxScaler and StandardScaler)

- Similarity and Dissimilarity for Numerical (interval-scaled) variables, Asymmetric binary variables, Categorical variables, For text

- Correlation between continuous-numeric columns, and between categorical columns

- Importance of posing right question for machine learning, data analysis and data preprocessing [Tags: Machine Learning, Spark]

- Working with skLearn's MinMax scaler and defining our own

- Data Preprocessing Using Python package Pandas (Use case: Creating a Nifty50 SIP Simulator)

- Loading data from Pandas to PostgreSQL [Tags: Databases, Machine Learning]

- Exploring skLearn's CountVectorizer

- Using Snorkel to create test data and classifying using Scikit-Learn

- Pandas DataFrame Filtering Using eval()

- Three Types of Input Data Format For Apriori Algorithm (Association Analysis)

- Bagging in overcoming variance of a classifier, clustering algorithm or regressor

ML3: Data visualization

ML3.1: Misc

- Data Visualization's Basic Theory

- Box Plot and Anomaly Detection in 1D [Tags: Data Visualization, Anomaly Detection]

- Social Analysis (SOAN using Python 3) Report [Tags: Data Visualization, NLP]

- Plotting Correlation Matrix in Three Ways Using Pandas, Matplotlib and Seaborn

- Creating Heatmap from Pandas DataFrame correlation matrix

- Binomial Probability Distribution (visualization using Seaborn) [Tags: Machine Learning, NumPy]

- Google Analytics for Beginners (Assessments Dump, Oct 2020)

- survival8 Audience Around The World (Jun 2021)

-

Topic modeling using Latent Dirichlet Allocation from sklearn and visualization using pyLDAvis

[Tags: Data Visualization, Natural Language Processing]

ML3.2: Using PowerBI

- PowerBI's HTML Content Visualization [Tags: Data Visualization, PowerBI]

- Sorting an 'HTML Content' Visual in PowerBI [Tags: Data Visualization, PowerBI]

- Concatenate Two Tables using R in PowerBI [Tags: Data Visualization, PowerBI, R Language]

- Timeline View Using HTML Content Visual in PowerBI [Tags: Data Visualization, PowerBI, Python]

ML3.3: Line Chart

- Creating and Editing Line Chart in LibreOffice Calc [Tags: Data Visualization, FOSS]

- Line plot with multiple lines for page views for survival8 (Sep 2022)

ML3.4: Pie Plot

- Stratified sampling and fixed size sampling plus visualization using pie plot (Nov 2022)

- Plotting changes in Nifty50's top-5 sectors after last three market crashes Using Pie Plot, Bar Chart and Grouped Bar Chart

ML3.5: Choropleth and Cartography

Choropleth: A choropleth map is a type of statistical thematic map that uses pseudocolor, i.e., color corresponding with an aggregate summary of a geographic characteristic within spatial enumeration units, such as population density or per-capita income.

Cartography: the science or practice of drawing maps.

- Drawing a world heat map using 'cartopy'

- Ownership of Bicycle, 2-wheeler and car in India by percentages (2022)

- Global Footprint of Survival8 (Nov 2022)

ML3.6: Histogram

- Differences between 'bar graph' and histogram

- Histogram report and binning on Sales data

- An exercise in visualization (plotting line plot and multicolored histogram with -ve and +ve values) using Pandas and Matplotlib

ML4: Outlier Detection / Anomaly Detection

- Box Plot and Anomaly Detection in 1D [Tags: Data Visualization, Anomaly Detection]

- DCSO: Dynamic Combination of Detector Scores for Outlier Ensembles (Research paper, 2018)

- Unsupervised Outlier Detection Using PyOD

- Isolation based anomaly detection using iForest (2133360.2133363 / Research Paper)

- Density-based algorithm for anomaly detection (Adeel Hashmi / Research Paper)

- Isolation Forest Implementation using skLearn, PyOD, and spark-iForest

- Anomaly Detection using Scikit-Learn and "eif" PyPI package (for Extended Isolation Forest)

- Distributed Deep Learning Using Python Packages Elephas, Keras, Tensorflow and PySpark [Tags: Anomaly Detection, Deep Learning, Spark]

- Anomalies in 'survival8' Viewers' Stats (Mar 2022)

- Estimating the Contamination Factor For Unsupervised Anomaly Detection

ML5: Classification

ML5.1: Decision Tree

- Decision Tree Learning

- Interpretation of Decision Tree J48 Classifier output in Weka [Tags: FOSS, Machine Learning, Weka]

- Calculations for Info Gain and Gini Coefficient for Building Decision Tree

ML5.2: Miscellaneous of Classification

- Creating ML model, saving it, and creating Flask API [Tags: Flask, Machine Learning, Classification]

- kNN classification parallelized using MapReduce [Tags: Hadoop / Spark, Machine Learning]

- Elbow Method for identifying k in kMeans (clustering) and kNN (classification)

- Snorkel's Analysis Package Overview (v0.9.6, Sep 2020). This dicusses how to interpret classification results

- Improving a Classifier (ML) Using Snorkel's Slicing Technique

- Multi-label Classification using Python

- Naïve Bayes Classifier for Spam Filtering

- Weka classification experiment on Iris dataset [Tags: FOSS, Machine Learning, Weka]

ML6: Clustering

- Elbow Method for identifying k in kMeans (clustering) and kNN (classification)

- Weka clustering experiment on Iris dataset [Tags: FOSS, Machine Learning, Weka]

- 'Supervised classification-oriented measures' for Cluster Analysis

- Similarity-oriented measures for cluster analysis

ML7: Regression

- Linear Regression (Theory)

- Improvements over OLS (Forward Stepwise, Ridge, Lasso and LARS forms of Regression)

- Descriptive Statistics and Linear Regression Using 'statistics' module and 'statsmodels' module

- Hands-on 5 Regression Algorithms Using Scikit-Learn

- Demo of Linear Regression on Boston Housing Data Using Weka [Tags: FOSS, Machine Learning]

- Saving Model, Loading Model and Making Predictions for Linear Regression (in Weka)

- Linear Regression Using Java Code And Weka JAR [Tags: FOSS, Java, Machine Learning, Weka]

ML8: Association Mining Between Attributes

- Three Types of Input Data Format For Apriori Algorithm (Association Analysis)

- The Concept of Lift in Association Rules Mining

- Apriori Algorithm For Association Mining Using Weka's Supermarket Dataset

- Running Weka's Apriori on 9_TXN_5_ITEMS Dataset

- Interpretation of output from Weka for Apriori Algorithm

ML9: Weka Tool

- Demo of Linear Regression on Boston Housing Data Using Weka [Tags: FOSS, Machine Learning, Weka]

- Interpretation of Decision Tree J48 Classifier output in Weka [Tags: FOSS, Machine Learning, Weka]

- Weka classification experiment on Iris dataset [Tags: FOSS, Machine Learning, Weka]

- Weka clustering experiment on Iris dataset [Tags: FOSS, Machine Learning, Weka]

- Demo of Linear Regression on Boston Housing Data Using Weka [Tags: FOSS, Machine Learning]

- Saving Model, Loading Model and Making Predictions for Linear Regression (in Weka)

- Linear Regression Using Java Code And Weka JAR [Tags: FOSS, Java, Machine Learning, Weka]

- Apriori Algorithm For Association Mining Using Weka's Supermarket Dataset

- Running Weka's Apriori on 9_TXN_5_ITEMS Dataset

- Interpretation of output from Weka for Apriori Algorithm

- Machine Learning and Weka Interview (5 Questions) [Tags: Machine Learning Q&A, Weka Tool]

ML10: Traffic Prediction on my Blog

- Traffic Prediction on my Blog (Oct 2023)

- When not to use Poisson Distribution for prediction?

- Time Series Analysis and Forecasting Using Exponential Moving Average (A use case of traffic prediction on my blog)

ML11: Questions and Answers

- Machine Learning dose with ten Q&A (Set 1)

- Machine Learning Q&A (Set 2)

- Machine Learning Q&A (Set 3)

- LinkedIn Machine Learning Assessment Dump (Aug 2021)

- Machine Learning and Weka Interview (5 Questions) [Tags: Machine Learning Q&A, Weka Tool]

- ARIMA forecast for timeseries is one step ahead. Why? (Solved Interview Problem)

ML12: Miscellaneous

- Simple demonstration of how important data is for machine learning

- Reading a JSON file from the Google Drive in the Google Colab

- A case of cyclic dependencies between PyPI packages [Tags: Machine Learning, Python]

- Extracting Information From Search Engines [Tags: Machine Learning, Python]

-

Digging deeper into your toolbox (Viewing LDiA code of sklearn)

[Tags: FOSS, Machine Learning, Natural Language Processing]

ML13: Articles

- Machine Learning Resources (Dec 2019)

- Machine Learning Evolution (Jan 2020)

- Data Science Timeline (Aug 2020)

ML14: Our 'Machine Learning' Videos on YouTube

- Session 1 - Linear Regression (OLS Method and Theory) - 20210716

- Session 2 - Improvements over Linear Regression method of OLS (Forward Stepwise, Ridge, Lasso, LARS)

- Linear Regression Theory (2022-02-15)

- Pandas and Linear Regression in Code (Dated: 2022-Feb-16)

- Naive Bayes Classifier / Application: Spam Filtering / Dated: 2022 Feb 17

- Decision Trees Learning (2022 Feb 22)

- Perceptron in Machine Learning (24 Apr 2022)

Thursday, December 28, 2023

Mushroom Picker (A problem on Prefix Sums)

Problem

You are given a non-empty, zero-indexed array A of n (1 <= n <= 100000) integers a0, a1,..., an−1 (0 <= ai <= 1000). This array represents number of mushrooms growing on the consecutive spots along a road. You are also given integers k and m (0 <= k, m < n).

A mushroom picker is at spot number k on the road and should perform m moves. In one move she moves to an adjacent spot. She collects all the mushrooms growing on spots

she visits. The goal is to calculate the maximum number of mushrooms that the mushroom

picker can collect in m moves.

For example, consider array A such that:

A[0]=2, A[1]=3, A[2]=7, A[3]=5, A[4]=1, A[5]=3, A[6]=9

The mushroom picker starts at spot k = 4 and should perform m = 6 moves. She might

move to spots 3, 2, 3, 4, 5, 6 and thereby collect 1 + 5 + 7 + 3 + 9 = 25 mushrooms. This is the

maximal number of mushrooms she can collect.

Code

# Counting prefix sums

def prefix_sums(A):

n = len(A)

P = [0] * (n + 1)

for k in xrange(1, n + 1):

P[k] = P[k - 1] + A[k - 1]

return P

# Total of one slice

def count_total(P, x, y):

return P[y + 1] - P[x]

# Mushroom picker — O(n + m)

def mushrooms(A, k, m):

n = len(A)

result = 0

pref = prefix_sums(A)

# When we first take p steps on the left and return from their back in right direction.

for p in xrange(min(m, k) + 1):

left_pos = k - p

right_pos = min(n - 1, max(k, k + m - 2*p))

result = max(result, count_total(pref, left_pos, right_pos))

# When we first take p steps on the right and return from their back in left direction.

for p in xrange(min(m + 1, n - k)):

right_pos = k + p

left_pos = max(0, min(k, k - (m - 2*p)))

result=max(result, count_total(pref, left_pos, right_pos))

return result

Explanation (Part 1)

# When we first take p steps on the left and return from their back in right direction.

for p in xrange(min(m, k) + 1):

left_pos = k - p

right_pos = min(n - 1, max(k, k + m - 2*p))

result = max(result, count_total(pref, left_pos, right_pos))

Expression “for p in xrange(min(m, k) + 1)” has min(m, k) because we can only go m steps to the left or k steps to the left whichever number is minimum.

After going k steps, we cannot go past 0th position.

Or after taking m steps, we cannot take another step.

Expression “right_pos = min(n - 1, max(k, k + m – 2*p))” has ‘k+m-2*p’ because after taking p steps to the left, we are returning p steps back to position k, hence 2p.

And then number of steps left is: m – 2p

Then right position is identified by: m-2p steps after k ie, k+m-2p

Expression “right_pos = min(n - 1, max(k, k + m – 2*p))” has max(k, k + m – 2*p) because what if we take all the m steps to left and value of p becomes m.

Then there are no steps left to take to the right and right position is simply identified by k.

Similarly, for any value of p > m/2 (as in 0.6m or 0.75m), we would get same right position as k.

Explanation (Part 2)

# When we first take p steps on the right and return from their back in left direction.

for p in xrange(min(m + 1, n - k)):

right_pos = k + p

left_pos = max(0, min(k, k - (m - 2*p)))

result = max(result, count_total(pref, left_pos, right_pos))

Here in the expression “for p in xrange(min(m + 1, n – k))”, we have m+1 and not just m because xrange goes till m when passed the argument m+1. Similarly, we have n-k and not (n-1)-k because xrange goes till (n-1)-k when passed the argument n-k.

And we take minimum of m or (n-1)-k because that’s is the possible number of steps we can take on the right.

Here in the expression “left_pos = max(0, min(k, k - (m – 2*p)))”, we have k-(m-2p) because we take p steps on the right, then take those p steps back to k (on the left now).

Number of steps yet to take: m-2p

Left position would be identified by: k-(m-2p)

If p takes value m, which means we have taken m steps on the right, then we have no steps remaining to take to the left and left position is identified by k itself. (This logic is valid for any value of p > m/2)

Side Note

The way we calculating prefix sums and sums of subsections is without using the Python built-ins. But if we were to use Python built-ins, the code would look somewhat like this:def get_mushrooms_sum(A, start, end): return sum(A[start : end + 1])