Pre-read

Question

Solution from Book

Solution Using ChatGPT

Are you aware of the result E[xn, xm] = mu^2 + I . sigma ^ 2

I assume you meant E[Xn⋅Xm]=μ2+I⋅σ2, where I is the Kronecker delta, which is 1 when n=m and 0 otherwise.

In that case, E[Xn⋅Xm] simplifies to:

E[Xn⋅Xm]={μ2+σ2μ2if n=mif n=m

This is because when n=m, you have the product of a variable with itself, and the additional σ2 term comes from the covariance term. When n=m, the covariance term is zero, and you are left with the square of the mean.

If you have further questions or if there's anything specific you'd like to clarify, feel free to ask!

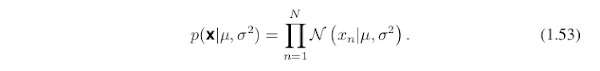

Certainly! Let's derive the expression for E[Xn⋅Xm] for two continuous random variables Xn and Xm from the same Gaussian distribution N(μ,σ2) with mean μ and variance σ2.

The expectation of the product is given by:

E[Xn⋅Xm]=∫−∞∞∫−∞∞xn⋅xm⋅f(xn)⋅f(xm)dxndxm

Since Xn and Xm are from the same distribution, let's denote f(x) as the probability density function (PDF) of X. The expression becomes:

E[Xn⋅Xm]=∫−∞∞∫−∞∞xn⋅xm⋅f(x)⋅f(x)dxndxm

Now, split the integral into two cases: n=m and n=m.

Case 1: n=m

E[Xn⋅Xm]=∫−∞∞∫−∞∞xn2⋅f(x)⋅f(x)dxndxm

=(∫−∞∞xn2⋅f(x)dxn)⋅(∫−∞∞f(x)dxm)

=μ2+σ2

This result comes from the fact that the integral of x2 over the entire range for a Gaussian distribution with mean μ is μ2+σ2.

Case 2: n=m

E[Xn⋅Xm]=∫−∞∞∫−∞∞xn⋅xm⋅f(x)⋅f(x)dxndxm

=(∫−∞∞xn⋅f(x)dxn)⋅(∫−∞∞xm⋅f(x)dxm)

=μ⋅μ

This result comes from the fact that the integral of x over the entire range for a Gaussian distribution with mean μ is μ.

Therefore, combining both cases:

E[Xn⋅Xm]=μ2+I⋅σ2

where I is the Kronecker delta, equal to 1 when n=m and 0 otherwise.

References

Tags: Mathematical Foundations for Data Science,Machine Learning,

No comments:

Post a Comment