1. BIOS BIOS (pronounced: /ˈbaɪɒs/, BY-oss; an acronym for Basic Input/Output System and also known as the System BIOS, ROM BIOS or PC BIOS) is firmware used to perform hardware initialization during the booting process (power-on startup), and to provide runtime services for operating systems and programs. The BIOS firmware comes pre-installed on a personal computer's system board, and it is the first software to run when powered on. The name originates from the Basic Input/Output System used in the CP/M operating system in 1975. The BIOS originally proprietary to the IBM PC has been reverse engineered by companies looking to create compatible systems. The interface of that original system serves as a de facto standard. The BIOS in modern PCs initializes and tests the system hardware components, and loads a boot loader from a mass memory device which then initializes an operating system. In the era of DOS, the BIOS provided a hardware abstraction layer for the keyboard, display, and other input/output (I/O) devices that standardized an interface to application programs and the operating system. More recent operating systems do not use the BIOS after loading, instead accessing the hardware components directly. Most BIOS implementations are specifically designed to work with a particular computer or motherboard model, by interfacing with various devices that make up the complementary system chipset. Originally, BIOS firmware was stored in a ROM chip on the PC motherboard. In modern computer systems, the BIOS contents are stored on flash memory so it can be rewritten without removing the chip from the motherboard. This allows easy, end-user updates to the BIOS firmware so new features can be added or bugs can be fixed, but it also creates a possibility for the computer to become infected with BIOS rootkits. Furthermore, a BIOS upgrade that fails can brick the motherboard permanently, unless the system includes some form of backup for this case. Unified Extensible Firmware Interface (UEFI) is a successor to the legacy PC BIOS, aiming to address its technical shortcomings. Ref: BIOS 2. Unified Extensible Firmware Interface (UEFI) The Unified Extensible Firmware Interface (UEFI) is a specification that defines a software interface between an operating system and platform firmware. UEFI replaces the legacy Basic Input/Output System (BIOS) firmware interface originally present in all IBM PC-compatible personal computers, with most UEFI firmware implementations providing support for legacy BIOS services. UEFI can support remote diagnostics and repair of computers, even with no operating system installed. Intel developed the original Extensible Firmware Interface (EFI) specifications. Some of the EFI's practices and data formats mirror those of Microsoft Windows. In 2005, UEFI deprecated EFI 1.10 (the final release of EFI). The Unified EFI Forum is the industry body that manages the UEFI specifications throughout. EFI's position in the software stack: History The original motivation for EFI came during early development of the first Intel–HP Itanium systems in the mid-1990s. BIOS limitations (such as 16-bit processor mode, 1 MB addressable space and PC AT hardware) had become too restrictive for the larger server platforms Itanium was targeting. The effort to address these concerns began in 1998 and was initially called Intel Boot Initiative. It was later renamed to Extensible Firmware Interface (EFI). In July 2005, Intel ceased its development of the EFI specification at version 1.10, and contributed it to the Unified EFI Forum, which has developed the specification as the Unified Extensible Firmware Interface (UEFI). The original EFI specification remains owned by Intel, which exclusively provides licenses for EFI-based products, but the UEFI specification is owned by the UEFI Forum. Version 2.0 of the UEFI specification was released on 31 January 2006. It added cryptography and "secure boot". Version 2.1 of the UEFI specification was released on 7 January 2007. It added network authentication and the user interface architecture ('Human Interface Infrastructure' in UEFI). The latest UEFI specification, version 2.8, was approved in March 2019. Tiano was the first open source UEFI implementation and was released by Intel in 2004. Tiano has since then been superseded by EDK and EDK2 and is now maintained by the TianoCore community. In December 2018, Microsoft announced Project Mu, a fork of TianoCore EDK2 used in Microsoft Surface and Hyper-V products. The project promotes the idea of Firmware as a Service. Advantages The interface defined by the EFI specification includes data tables that contain platform information, and boot and runtime services that are available to the OS loader and OS. UEFI firmware provides several technical advantages over a traditional BIOS system: 1. Ability to use large disks partitions (over 2 TB) with a GUID Partition Table (GPT) 2. CPU-independent architecture 3. CPU-independent drivers 4. Flexible pre-OS environment, including network capability 5. Modular design 6. Backward and forward compatibility Ref: UEFI 3. Serial ATA Serial ATA (SATA, abbreviated from Serial AT Attachment) is a computer bus interface that connects host bus adapters to mass storage devices such as hard disk drives, optical drives, and solid-state drives. Serial ATA succeeded the earlier Parallel ATA (PATA) standard to become the predominant interface for storage devices. Serial ATA industry compatibility specifications originate from the Serial ATA International Organization (SATA-IO) which are then promulgated by the INCITS Technical Committee T13, AT Attachment (INCITS T13). History SATA was announced in 2000 in order to provide several advantages over the earlier PATA interface such as reduced cable size and cost (seven conductors instead of 40 or 80), native hot swapping, faster data transfer through higher signaling rates, and more efficient transfer through an (optional) I/O queuing protocol. Serial ATA industry compatibility specifications originate from the Serial ATA International Organization (SATA-IO). The SATA-IO group collaboratively creates, reviews, ratifies, and publishes the interoperability specifications, the test cases and plugfests. As with many other industry compatibility standards, the SATA content ownership is transferred to other industry bodies: primarily INCITS T13 and an INCITS T10 subcommittee (SCSI), a subgroup of T10 responsible for Serial Attached SCSI (SAS). The remainder of this article strives to use the SATA-IO terminology and specifications. Before SATA's introduction in 2000, PATA was simply known as ATA. The "AT Attachment" (ATA) name originated after the 1984 release of the IBM Personal Computer AT, more commonly known as the IBM AT. The IBM AT's controller interface became a de facto industry interface for the inclusion of hard disks. "AT" was IBM's abbreviation for "Advanced Technology"; thus, many companies and organizations indicate SATA is an abbreviation of "Serial Advanced Technology Attachment". However, the ATA specifications simply use the name "AT Attachment", to avoid possible trademark issues with IBM. SATA host adapters and devices communicate via a high-speed serial cable over two pairs of conductors. In contrast, parallel ATA (the redesignation for the legacy ATA specifications) uses a 16-bit wide data bus with many additional support and control signals, all operating at a much lower frequency. To ensure backward compatibility with legacy ATA software and applications, SATA uses the same basic ATA and ATAPI command sets as legacy ATA devices. SATA has replaced parallel ATA in consumer desktop and laptop computers; SATA's market share in the desktop PC market was 99% in 2008. PATA has mostly been replaced by SATA for any use; with PATA in declining use in industrial and embedded applications that use CompactFlash (CF) storage, which was designed around the legacy PATA standard. A 2008 standard, CFast to replace CompactFlash is based on SATA. Features 1. Hot plug 2. Advanced Host Controller Interface Ref: Serial ATA 4. Hard disk drive interface Hard disk drives are accessed over one of a number of bus types, including parallel ATA (PATA, also called IDE or EIDE; described before the introduction of SATA as ATA), Serial ATA (SATA), SCSI, Serial Attached SCSI (SAS), and Fibre Channel. Bridge circuitry is sometimes used to connect hard disk drives to buses with which they cannot communicate natively, such as IEEE 1394, USB, SCSI and Thunderbolt. Ref: HDD Interface 5. Solid-state drive A solid-state drive (SSD) is a solid-state storage device that uses integrated circuit assemblies to store data persistently, typically using flash memory, and functioning as secondary storage in the hierarchy of computer storage. It is also sometimes called a solid-state device or a solid-state disk, even though SSDs lack the physical spinning disks and movable read–write heads used in hard drives ("HDD") or floppy disks. % While the price of SSDs has continued to decline over time, SSDs are (as of 2020) still more expensive per unit of storage than HDDs and are expected to remain so into the next decade. % SSDs based on NAND Flash will slowly leak charge over time if left for long periods without power. This causes worn-out drives (that have exceeded their endurance rating) to start losing data typically after one year (if stored at 30 °C) to two years (at 25 °C) in storage; for new drives it takes longer. Therefore, SSDs are not suitable for archival storage. 3D XPoint is a possible exception to this rule, however it is a relatively new technology with unknown long-term data-retention characteristics. Improvement of SSD characteristics over time Parameter Started with (1991) Developed to (2018) Improvement Capacity 20 megabytes 100 terabytes (Nimbus Data DC100) 5-million-to-one Price US$50 per megabyte US$0.372 per gigabyte (Samsung PM1643) 134,408-to-one Ref: Solid-state drive 6. CUDA CUDA (Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) model created by Nvidia. It allows software developers and software engineers to use a CUDA-enabled graphics processing unit (GPU) for general purpose processing – an approach termed GPGPU (General-Purpose computing on Graphics Processing Units). The CUDA platform is a software layer that gives direct access to the GPU's virtual instruction set and parallel computational elements, for the execution of compute kernels. The CUDA platform is designed to work with programming languages such as C, C++, and Fortran. This accessibility makes it easier for specialists in parallel programming to use GPU resources, in contrast to prior APIs like Direct3D and OpenGL, which required advanced skills in graphics programming. CUDA-powered GPUs also support programming frameworks such as OpenACC and OpenCL; and HIP by compiling such code to CUDA. When CUDA was first introduced by Nvidia, the name was an acronym for Compute Unified Device Architecture, but Nvidia subsequently dropped the common use of the acronym. Ref: CUDA 7. Graphics Processing Unit A graphics processing unit (GPU) is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. GPUs are used in embedded systems, mobile phones, personal computers, workstations, and game consoles. Modern GPUs are very efficient at manipulating computer graphics and image processing. Their highly parallel structure makes them more efficient than general-purpose central processing units (CPUs) for algorithms that process large blocks of data in parallel. In a personal computer, a GPU can be present on a video card or embedded on the motherboard. In certain CPUs, they are embedded on the CPU die. The term "GPU" was coined by Sony in reference to the PlayStation console's Toshiba-designed Sony GPU in 1994. The term was popularized by Nvidia in 1999, who marketed the GeForce 256 as "the world's first GPU". It was presented as a "single-chip processor with integrated transform, lighting, triangle setup/clipping, and rendering engines". Rival ATI Technologies coined the term "visual processing unit" or VPU with the release of the Radeon 9700 in 2002. GPU companies Many companies have produced GPUs under a number of brand names. In 2009, Intel, Nvidia and AMD/ATI were the market share leaders, with 49.4%, 27.8% and 20.6% market share respectively. However, those numbers include Intel's integrated graphics solutions as GPUs. Not counting those, Nvidia and AMD control nearly 100% of the market as of 2018. Their respective market shares are 66% and 33%. In addition, S3 Graphics and Matrox produce GPUs. Modern smartphones also use mostly Adreno GPUs from Qualcomm, PowerVR GPUs from Imagination Technologies and Mali GPUs from ARM. ~ ~ ~ % Modern GPUs use most of their transistors to do calculations related to 3D computer graphics. In addition to the 3D hardware, today's GPUs include basic 2D acceleration and framebuffer capabilities (usually with a VGA compatibility mode). Newer cards such as AMD/ATI HD5000-HD7000 even lack 2D acceleration; it has to be emulated by 3D hardware. GPUs were initially used to accelerate the memory-intensive work of texture mapping and rendering polygons, later adding units to accelerate geometric calculations such as the rotation and translation of vertices into different coordinate systems. Recent developments in GPUs include support for programmable shaders which can manipulate vertices and textures with many of the same operations supported by CPUs, oversampling and interpolation techniques to reduce aliasing, and very high-precision color spaces. Because most of these computations involve matrix and vector operations, engineers and scientists have increasingly studied the use of GPUs for non-graphical calculations; they are especially suited to other embarrassingly parallel problems. % With the emergence of deep learning, the importance of GPUs has increased. In research done by Indigo, it was found that while training deep learning neural networks, GPUs can be 250 times faster than CPUs. The explosive growth of Deep Learning in recent years has been attributed to the emergence of general purpose GPUs. There has been some level of competition in this area with ASICs, most prominently the Tensor Processing Unit (TPU) made by Google. However, ASICs require changes to existing code and GPUs are still very popular. Ref: GPU 8. Tensor processing unit A tensor processing unit (TPU) is an AI accelerator application-specific integrated circuit (ASIC) developed by Google specifically for neural network machine learning, particularly using Google's own TensorFlow software. Google began using TPUs internally in 2015, and in 2018 made them available for third party use, both as part of its cloud infrastructure and by offering a smaller version of the chip for sale. Edge TPU This article's use of external links may not follow Wikipedia's policies or guidelines. Please improve this article by removing excessive or inappropriate external links, and converting useful links where appropriate into footnote references. (March 2020) (Learn how and when to remove this template message) In July 2018, Google announced the Edge TPU. The Edge TPU is Google's purpose-built ASIC chip designed to run machine learning (ML) models for edge computing, meaning it is much smaller and consumes far less power compared to the TPUs hosted in Google datacenters (also known as Cloud TPUs). In January 2019, Google made the Edge TPU available to developers with a line of products under the Coral brand. The Edge TPU is capable of 4 trillion operations per second while using 2W. The product offerings include a single board computer (SBC), a system on module (SoM), a USB accessory, a mini PCI-e card, and an M.2 card. The SBC Coral Dev Board and Coral SoM both run Mendel Linux OS – a derivative of Debian. The USB, PCI-e, and M.2 products function as add-ons to existing computer systems, and support Debian-based Linux systems on x86-64 and ARM64 hosts (including Raspberry Pi). The machine learning runtime used to execute models on the Edge TPU is based on TensorFlow Lite. The Edge TPU is only capable of accelerating forward-pass operations, which means it's primarily useful for performing inferences (although it is possible to perform lightweight transfer learning on the Edge TPU). The Edge TPU also only supports 8-bit math, meaning that for a network to be compatible with the Edge TPU, it needs to be trained using TensorFlow quantization-aware training technique. On November 12, 2019, Asus announced a pair of single-board computer (SBCs) featuring the Edge TPU. The Asus Tinker Edge T and Tinker Edge R Board designed for IoT and edge AI. The SBCs support Android and Debian operating systems. ASUS has also demoed a mini PC called Asus PN60T featuring the Edge TPU. On January 2, 2020, Google announced the Coral Accelerator Module and Coral Dev Board Mini, to be demoed at CES 2020 later the same month. The Coral Accelerator Module is a multi-chip module featuring the Edge TPU, PCIe and USB interfaces for easier integration. The Coral Dev Board Mini is a smaller SBC featuring the Coral Accelerator Module and MediaTek 8167s SoC. Ref: TPU 9. PyTorch PyTorch is an open source machine learning library based on the Torch library, used for applications such as computer vision and natural language processing, primarily developed by Facebook's AI Research lab (FAIR). It is free and open-source software released under the Modified BSD license. Although the Python interface is more polished and the primary focus of development, PyTorch also has a C++ interface. A number of pieces of Deep Learning software are built on top of PyTorch, including Tesla, Uber's Pyro, HuggingFace's Transformers, and Catalyst. PyTorch provides two high-level features: # Tensor computing (like NumPy) with strong acceleration via graphics processing units (GPU) # Deep neural networks built on a tape-based automatic differentiation system PyTorch tensors: PyTorch defines a class called Tensor (torch.Tensor) to store and operate on homogeneous multidimensional rectangular arrays of numbers. PyTorch Tensors are similar to NumPy Arrays, but can also be operated on a CUDA-capable Nvidia GPU. PyTorch supports various sub-types of Tensors. History: Facebook operates both PyTorch and Convolutional Architecture for Fast Feature Embedding (Caffe2), but models defined by the two frameworks were mutually incompatible. The Open Neural Network Exchange (ONNX) project was created by Facebook and Microsoft in September 2017 for converting models between frameworks. Caffe2 was merged into PyTorch at the end of March 2018. Ref: PyTorch 10. TensorFlow TensorFlow is a free and open-source software library for dataflow and differentiable programming across a range of tasks. It is a symbolic math library, and is also used for machine learning applications such as neural networks. It is used for both research and production at Google. TensorFlow was developed by the Google Brain team for internal Google use. It was released under the Apache License 2.0 on November 9, 2015. ~ ~ ~ TensorFlow is Google Brain's second-generation system. Version 1.0.0 was released on February 11, 2017. While the reference implementation runs on single devices, TensorFlow can run on multiple CPUs and GPUs (with optional CUDA and SYCL extensions for general-purpose computing on graphics processing units). TensorFlow is available on 64-bit Linux, macOS, Windows, and mobile computing platforms including Android and iOS. Its flexible architecture allows for the easy deployment of computation across a variety of platforms (CPUs, GPUs, TPUs), and from desktops to clusters of servers to mobile and edge devices. TensorFlow computations are expressed as stateful dataflow graphs. The name TensorFlow derives from the operations that such neural networks perform on multidimensional data arrays, which are referred to as tensors. During the Google I/O Conference in June 2016, Jeff Dean stated that 1,500 repositories on GitHub mentioned TensorFlow, of which only 5 were from Google. In December 2017, developers from Google, Cisco, RedHat, CoreOS, and CaiCloud introduced Kubeflow at a conference. Kubeflow allows operation and deployment of TensorFlow on Kubernetes. In March 2018, Google announced TensorFlow.js version 1.0 for machine learning in JavaScript. In Jan 2019, Google announced TensorFlow 2.0. It became officially available in Sep 2019. In May 2019, Google announced TensorFlow Graphics for deep learning in computer graphics. Ref: TensorFlow 11. Theano (software) Theano is a Python library and optimizing compiler for manipulating and evaluating mathematical expressions, especially matrix-valued ones. In Theano, computations are expressed using a NumPy-esque syntax and compiled to run efficiently on either CPU or GPU architectures. Theano is an open source project primarily developed by a Montreal Institute for Learning Algorithms (MILA) at the Université de Montréal. The name of the software references the ancient philosopher Theano, long associated with the development of the golden mean. On 28 September 2017, Pascal Lamblin posted a message from Yoshua Bengio, Head of MILA: major development would cease after the 1.0 release due to competing offerings by strong industrial players. Theano 1.0.0 was then released on 15 November 2017. On 17 May 2018, Chris Fonnesbeck wrote on behalf of the PyMC development team that the PyMC developers will officially assume control of Theano maintenance once they step down. Ref: Theano

Pages

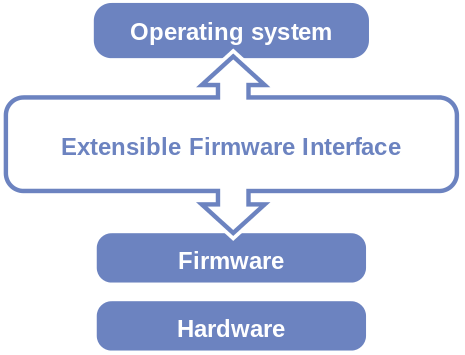

- Index of Lessons in Technology

- Index of Book Summaries

- Index of Book Lists And Downloads

- Index For Job Interviews Preparation

- Index of "Algorithms: Design and Analysis"

- Python Course (Index)

- Data Analytics Course (Index)

- Index of Machine Learning

- Postings Index

- Index of BITS WILP Exam Papers and Content

- Lessons in Investing

- Index of Math Lessons

- Index of Management Lessons

- Book Requests

- Index of English Lessons

- Index of Medicines

- Index of Quizzes (Educational)

Wednesday, June 17, 2020

Technology Listing related to Deep Learning (Jun 2020)

Labels:

Technology

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment