Pages

- Index of Lessons in Technology

- Index of Book Summaries

- Index of Book Lists And Downloads

- Index For Job Interviews Preparation

- Index of "Algorithms: Design and Analysis"

- Python Course (Index)

- Data Analytics Course (Index)

- Index of Machine Learning

- Postings Index

- Index of BITS WILP Exam Papers and Content

- Lessons in Investing

- Index of Math Lessons

- Index of Management Lessons

- Book Requests

- Index of English Lessons

- Index of Medicines

- Index of Quizzes (Educational)

Tuesday, July 12, 2022

Student Update (2022-Jul-12)

Index of Journals

Tags: Student Update,JournalCounting

Komal Kumari Class: 4 Till: 20 Kusum Kumari Class: 5 Till: 89 Md Nihal Class: 6 Till: 29Tables

Yash Kashyap Class: 5 Tables Till: 7BODMAS

Shiva Patel Class: 6 Level: 2 Roshan Null Class: 7B Level: 2 (Could not solve substraction of bigger number from smaller one)

Monday, July 11, 2022

Natural Language Processing Questions and Answers (Set 1 of 11 Ques)

1. Which of the following areas are ones where NLP can be used? - Automatic Text Summarization - Automatic question answering systems - Information retrieval from documents - News Categorization Ans: - Automatic Text Summarization - Automatic question answering systems - Information retrieval from documents - News Categorization 2. Which of the following is/are among the many challenges of NLP? - Ambiguity in the data - High variation in data - Insufficient tagged data - Lack of processing power Ans: - Ambiguity in the data - High variation in data - Insufficient tagged data 3. Single Choice Correct Tokenization is: - removal of punctuation in the data - extraction of unique words in the data - removal of stop words - splitting the text into tokens (correct) 4. Single Choice Correct The output of the nltk.word_tokenize() method is a: - Tuple - String - List (Correct) - Dictionary 5. Single Choice Correct Stemming is the process of: - Reducing a sentence to its summary - Reducing a word to its base form / dictionary form - Reducing a word to its root word (Correct) - Removing unwanted tokens from text 6. Single Choice Correct The output of lemmatize(“went”) is: - went - go (correct) 7. Single Choice Correct What would be the output of the below code: flight_details = “Flight Indigo Airlines a2138” if(re.search(r”Airlines”, flight_details) != None): print(“Match found: Airlines”) else: print(“Match not found”) - Match found: Airlines (Correct) - Match not found 8. Single Choice Correct Lemmatization is the process of: - Reducing a sentence to its summary - Reducing a word to its base form / dictionary form (Correct) - Reducing a word to its root word - Removing unwanted tokens from text Ref: https://nlp.stanford.edu/IR-book/html/htmledition/stemming-and-lemmatization-1.html 9. Multiple Choice Correct Which of these are benefits of Annotation (POS Tagging)? a. Provides context to text in NLP b. Adds metadata to the text c. Helps in entity recognition d. Reduces size of data Correct answers: a, b, c 10. Single Choice Correct Which one of the following from NLTK package assigns the same tag to all the words? a. Default Tagger (Correct) b. Unigram Tagger c. N-gram Tagger d. Regular Expression Tagger 11. Which of these from NLTK package is considered as a context dependent tagger? a. N-gram Tagger (Correct) b. Regular Expression Tagger c. Unigram TaggerTags: Natural Language Processing,

Sunday, July 10, 2022

Tuesday, July 5, 2022

Practice identifying number in a series

Notes

Arithmetic Progression

An arithmetic progression or arithmetic sequence is a sequence of numbers such that the difference between the consecutive terms is constant. For instance, the sequence 5, 7, 9, 11, 13, 15... is an arithmetic progression with a common difference of 2.

Geometric Progression

In mathematics, a geometric progression, also known as a geometric sequence, is a sequence of non-zero numbers where each term after the first is found by multiplying the previous one by a fixed, non-zero number called the common ratio. For example, the sequence 2, 6, 18, 54, ... is a geometric progression with common ratio 3. Similarly 10, 5, 2.5, 1.25, ... is a geometric sequence with common ratio 1/2.

Examples of a geometric sequence are powers rk of a fixed non-zero number r, such as 2k and 3k. The general form of a geometric sequence is:

a, ar, a.r^2, a.r^3, a.r^4,...

where r ≠ 0 is the common ratio and a ≠ 0 is a scale factor, equal to the sequence's start value.

The distinction between a progression and a series is that a progression is a sequence, whereas a series is a sum.

Select the series type:

Identify the series.

Number multiplied is: . Number added is:

Arithmetic progression added is:

A1 is:

Difference is:

The number for creating multiples is:

The Geometric Progression that's subtracted is:

A1 is:

Multiplier is:

The Geometric Progression that's added is:

A1 is:

Multiplier is:

Learn Subtraction by Counting Sticks

Note: We will subtract the smaller number from the bigger number.

Select first number:

Select second number:

Answer is:

Solved problems on identifying number in a series

Questions

Ques 1: 6, 36, 216, [_] Ques 2: 8, 19, 41, 85, [_] Ques 3: 17, 17, 34, 102, [_] Ques 4: 568, 579, 601, 634, [_] Ques 5: 858, 848, 828, 788, [_] Ques 6: 1123, 1128, 1138, 1158, [_]

Ques 1: 6, 36, 216, [_] Ans: 6^1 = 6 6^2 = 36 6^3 = 216 6^4 = 1296 Answer is: 1296 Ques 2: 8, 19, 41, 85, [_] Ans: 8 * 2 + 3 = 19 19 * 2 + 3 = 41 (i.e., 38 + 3) 41 * 2 + 3 = 85 85 * 2 + 3 = 173 Answer is: 173 Ques 3: 17, 17, 34, 102, [_] Answer: 17 * 1 = 17 17 * 2 = 34 34 * 3 = 102 102 * 4 = 408 Answer is: 408 Ques 4: 568, 579, 601, 634, [_] Answer: 568 + 11 = 579 579 + 22 = 601 601 + 33 = 634 634 + 44 = 678 Answer is: 678 Ques 5: 858, 848, 828, 788, [_] Answer: 858 - 10 = 848 848 - 20 = 828 828 - 40 = 788 788 - 80 = 708 Answer is: 708 Ques 6: 1123, 1128, 1138, 1158, [_] Answer: 1123 + 5 = 1128 1128 + 10 = 1138 1138 + 20 = 1158 1158 + 40 = 1198 Answer is: 1198

Digging deeper into your toolbox (Viewing LDiA code of sklearn)

Tags: Machine Learning,Natural Language Processing,FOSSDigging deeper into your toolbox

You can find the source code path in the __file__ attribute on any Python module, such as sklearn.__file__. And in ipython (jupyter console), you can view the source code for any function, class, or object with ??, like LDA??: >>> import sklearn >>> sklearn.__file__ '/Users/hobs/anaconda3/envs/conda_env_nlpia/lib/python3.6/site-packages/sklearn/__init__.py' >>> from sklearn.discriminant_analysis import LinearDiscriminantAnalysis as LDA >>> from sklearn.decomposition import LatentDirichletAllocation as LDiA >>> LDA?? Init signature: LDA(solver='svd', shrinkage=None, priors=None, n_components=None, store_covariance=False, tol=0.0001) Source: class LinearDiscriminantAnalysis(BaseEstimator, LinearClassifierMixin, TransformerMixin): """Linear Discriminant Analysis A classifier with a linear decision boundary, generated by fitting class conditional densities to the data and using Bayes' rule. The model fits a Gaussian density to each class, assuming that all classes share the same covariance matrix. ... This won’t work on functions and classes that are extensions, whose source code is hidden within a compiled C++ module.

Semantic Analysis of Words and Sentences in Natural Language Processing

Coming up with a numerical representation of the semantics (meaning) of words and sentences can be tricky. This is especially true for “fuzzy” languages like English, which has multiple dialects and many different interpretations of the same words. Even formal English text written by an English professor can’t avoid the fact that most English words have multiple meanings, a challenge for any new learner, including machine learners.Tags: Natural Language Processing,Polysemy

This concept of words with multiple meanings is called polysemy: The existence of words and phrases with more than one meaning Here are some ways in which polysemy can affect the semantics of a word or statement. We list them here for you to appreciate the power of LSA. You don’t have to worry about these challenges. LSA takes care of all this for us:# Homonyms

Words with the same spelling and pronunciation, but different meanings# Zeugma

Use of two meanings of a word simultaneously in the same sentence And LSA also deals with some of the challenges of polysemy in a voice interface - a chatbot that you can talk to, like Alexa or Siri.# Homographs

Words spelled the same, but with different pronunciations and meanings# Homophones

Words with the same pronunciation, but different spellings and meanings (an NLP challenge with voice interfaces). Imagine if you had to deal with a statement like the following, if you didn’t have tools like LSA to deal with it: She felt ... less. She felt tamped down. Dim. More faint. Feint. Feigned. Fain. --Patrick Rothfuss

Monday, July 4, 2022

Stemming (An Natural Language Processing Challenge)

Tags: Natural Language ProcessingChallenges (a preview of stemming)

As an example of why feature extraction from text is hard, consider stemming—grouping the various inflections of a word into the same “bucket” or cluster. Very smart people spent their careers developing algorithms for grouping inflected forms of words together based only on their spelling. Imagine how difficult that is. Imagine trying to remove verb endings like “ing” from “ending” so you’d have a stem called “end” to represent both words. And you’d like to stem the word “running” to “run,” so those two words are treated the same. And that’s tricky, because you have to remove not only the “ing” but also the extra “n.” But you want the word “sing” to stay whole. You wouldn’t want to remove the “ing” ending from “sing” or you’d end up with a single letter “s.” Or imagine trying to discriminate between a pluralizing “s” at the end of a word like “words” and a normal “s” at the end of words like “bus” and “lens.” Do isolated individual letters in a word or parts of a word provide any information at all about that word’s meaning? Can the letters be misleading? Yes and yes. In this chapter we show you how to make your NLP pipeline a bit smarter by dealing with these word spelling challenges using conventional stemming approaches. You can try for yourself statistical clustering approaches that only require you to amass a collection of natural language text containing the words you’re interested in. From that collection of text, the statistics of word usage will reveal “semantic stems” (actually, more useful clusters of words like lemmas or synonyms), without any handcrafted regular expressions or stemming rules.

Learn Addition by Counting Sticks

Select first number:

Select second number:

Answer is:

Sunday, July 3, 2022

Expansion of numbers into place values

Select Level:

Ques: Write the expanded form of following number using the Place Values:

Enter Place Values Here. Add More Input Boxes if Needed.

Tags: Mathematical Foundations for Data Science,

Ques: Write the expanded form of following number using the Place Values:

Enter Place Values Here. Add More Input Boxes if Needed.

Saturday, July 2, 2022

Practical applications of Natural Language Processing

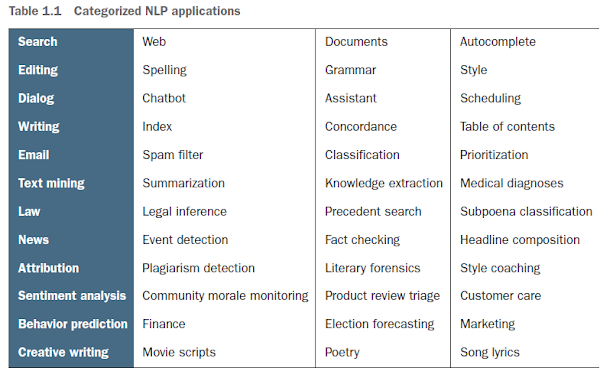

Natural language processing is everywhere. It’s so ubiquitous that some of the examples in table below may surprise you.Tags: Natural Language Processing,Categorized NLP applications

A search engine can provide more meaningful results if it indexes web pages or document archives in a way that takes into account the meaning of natural language text. Autocomplete uses NLP to complete your thought and is common among search engines and mobile phone keyboards. Many word processors, browser plugins, and text editors have spelling correctors, grammar checkers, concordance composers, and most recently, style coaches. Some dialog engines (chatbots) use natural language search to find a response to their conversation partner’s message. NLP pipelines that generate (compose) text can be used not only to compose short replies in chatbots and virtual assistants, but also to assemble much longer passages of text. The Associated Press uses NLP “robot journalists” to write entire financial news articles and sporting event reports.7 Bots can compose weather forecasts that sound a lot like what your hometown weather person might say, perhaps because human meteorologists use word processors with NLP features to draft scripts. NLP spam filters in early email programs helped email overtake telephone and fax communication channels in the '90s. And the spam filters have retained their edge in the cat and mouse game between spam filters and spam generators for email, but may be losing in other environments like social networks. An estimated 20% of the tweets about the 2016 US presidential election were composed by chatbots.8 These bots amplify their owners’ and developers’ viewpoints. And these “puppet masters” tend to be foreign governments or large corporations with the resources and motivation to influence popular opinion. NLP systems can generate more than just short social network posts. NLP can be used to compose lengthy movie and product reviews on Amazon and elsewhere. Many reviews are the creation of autonomous NLP pipelines that have never set foot in a movie theater or purchased the product they’re reviewing. There are chatbots on Slack, IRC, and even customer service websites—places where chatbots have to deal with ambiguous commands or questions. And chatbots paired with voice recognition and generation systems can even handle lengthy conversations with an indefinite goal or “objective function” such as making a reservation at a local restaurant.9 NLP systems can answer phones for companies that want something better than a phone tree but don’t want to pay humans to help their customers.NOTE

With its Duplex demonstration at Google IO, engineers and managers overlooked concerns about the ethics of teaching chatbots to deceive humans. We all ignore this dilemma when we happily interact with chatbots on Twitter and other anonymous social networks, where bots don’t share their pedigree. With bots that can so convincingly deceive us, the AI control problem looms, and Yuval Harari’s cautionary forecast of “Homo Deus” may come sooner than we think. NLP systems exist that can act as email “receptionists” for businesses or executive assistants for managers. These assistants schedule meetings and record summary details in an electronic Rolodex, or CRM (customer relationship management system), interacting with others by email on their boss’s behalf. Companies are putting their brand and face in the hands of NLP systems, allowing bots to execute marketing and messaging campaigns. And some inexperienced daredevil NLP textbook authors are letting bots author several sentences in their book.

Thursday, June 30, 2022

Practice Division

Select Level:

/ |

Enter only the integer part of the solution.

Tags: Mathematical Foundations for Data Science,

Wednesday, June 29, 2022

Practice for Place Value and Face Value

Select Level:

Ques: Write the Place Values and Face Values for the following number:

| Number | Place Value | Face Value |

Creating a Taxonomy for BBC News Articles (Part 10 - Topic modeling using Latent Dirichlet Allocation from sklearn and visualization using pyLDAvis)

from __future__ import print_function

import pyLDAvis

import pyLDAvis.sklearn

pyLDAvis.enable_notebook()

from sklearn.datasets import fetch_20newsgroups

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

from sklearn.decomposition import LatentDirichletAllocation

import spacy

import pandas as pd

import warnings

warnings.filterwarnings("ignore")

from preprocess import preprocess_text

import matplotlib.pyplot as plt

nlp = spacy.load("en_core_web_sm")

def remove_verbs_and_adjectives(text):

doc = nlp(text)

additional_stopwords = ["new", "like", "many", "also", "even", "get", "say", "according", "would", "could",

"know", "made", "make", "come", "didnt", "dont", "doesnt", "go", "may", "back",

"going", "including", "added", "set", "take", "want", "use",

"000", "1", "2", "3", "4", "5", "6", "7", "8", "9", "10", "11", "20", "u",

"one", "two", "three", "year", "first", "last", "good", "best", "well", "told", "said"]

days_of_week = ["monday", "tuesday", "wednesday", "thursday", "friday", "saturday", "sunday"]

additional_stopwords += days_of_week

words = [token.text for token in doc if (token.pos_ not in ["VERB", "NUM", "ADJ", "ADV", "ADP", "SCONJ", "DET",

"X", "INTJ", "CCONJ", "AUX", 'PART', 'PRON', 'PUNCT', 'SYM'])]

words = [x for x in words if len(x) > 2]

words = [x for x in words if x not in additional_stopwords]

doc = " ".join(words)

return doc

df1 = pd.read_csv('bbc_news_train.csv')

%%time

df1['Preprocess_text'] = df1['Text'].apply(preprocess_text)

df1['Preprocess_text'] = df1['Preprocess_text'].apply(remove_verbs_and_adjectives)

tf_vectorizer = CountVectorizer(strip_accents = 'unicode',

stop_words = 'english',

lowercase = True,

token_pattern = r'\b[a-zA-Z]{3,}\b',

max_df = 0.5,

min_df = 10)

dtm_tf = tf_vectorizer.fit_transform(df1['Preprocess_text'])

%%time

lda_tf = LatentDirichletAllocation(n_components=5, random_state=0)

lda_tf.fit(dtm_tf) # LatentDirichletAllocation(n_components=5, random_state=0)

pyLDAvis.sklearn.prepare(lda_tf, dtm_tf, tf_vectorizer)

1. High Level View

2. Exploring Sports Cluster

3. Exploring the term "Team"

def plot_top_words(model, feature_names, n_top_words, title):

#fig, axes = plt.subplots(2, 5, figsize=(30, 15), sharex=True)

fig, axes = plt.subplots(1, 5, figsize=(30, 15), sharex=True)

axes = axes.flatten()

for topic_idx, topic in enumerate(model.components_):

top_features_ind = topic.argsort()[: -n_top_words - 1 : -1]

top_features = [feature_names[i] for i in top_features_ind]

weights = topic[top_features_ind]

ax = axes[topic_idx]

ax.barh(top_features, weights, height=0.7)

ax.set_title(f"Topic {topic_idx +1}", fontdict={"fontsize": 30})

ax.invert_yaxis()

ax.tick_params(axis="both", which="major", labelsize=20)

for i in "top right left".split():

ax.spines[i].set_visible(False)

fig.suptitle(title, fontsize=40)

plt.subplots_adjust(top=0.90, bottom=0.05, wspace=0.90, hspace=0.3)

plt.show()

n_top_words = 20

plot_top_words(lda_tf, tf_vectorizer.get_feature_names(), n_top_words, "Topics in LDA model")

for topic_idx, topic in enumerate(lda_tf.components_):

top_features_ind = topic.argsort()[: -n_top_words - 1 : -1]

top_features = [tf_vectorizer.get_feature_names()[i] for i in top_features_ind]

weights = topic[top_features_ind]

print()

print(top_features)

print(weights)

['film', 'market', 'award', 'sale', 'growth', 'company', 'price', 'bank', 'rate', 'economy', 'share', 'director', 'month', 'actor', 'dollar', 'firm', 'china', 'star', 'profit', 'analyst']

[782.39034709 366.28139534 322.86071389 284.59708969 273.47636047

270.04200023 246.46217158 222.28393084 221.57275007 220.44223774

217.44204193 217.16230918 210.70770464 208.58603001 205.89147443

200.87216491 200.44549958 191.36606456 181.4342261 173.2076483 ]

['music', 'band', 'company', 'court', 'club', 'album', 'number', 'group', 'chart', 'song', 'record', 'sale', 'london', 'case', 'singer', 'charge', 'drug', 'day', 'deal', 'bid']

[252.53858311 188.25792769 185.23003226 148.14318776 145.85605702

144.19663752 140.08497961 135.82856133 129.27287007 128.42244733

125.84229174 116.22180106 116.07193359 109.62077913 109.5121517

108.17668145 105.2106134 104.29483314 102.85209462 101.69194202]

['game', 'time', 'england', 'player', 'world', 'team', 'match', 'win', 'cup', 'minute', 'season', 'champion', 'ireland', 'injury', 'wale', 'france', 'goal', 'chelsea', 'week', 'coach']

[602.21909322 378.09944132 341.67737838 336.05299822 279.3877861

255.26640777 243.15720494 242.95257795 214.19830526 191.78217721

188.27405349 185.72607709 181.66983554 178.32170657 174.85059546

166.49655125 159.49832106 159.12597256 156.66993433 155.74636717]

['government', 'election', 'people', 'party', 'minister', 'blair', 'labour', 'country', 'tax', 'plan', 'law', 'lord', 'leader', 'issue', 'time', 'secretary', 'home', 'britain', 'campaign', 'service']

[737.11131292 535.16527677 503.88220861 492.81224241 472.1748853

405.93862213 392.08668008 377.9692403 374.10296897 321.81905251

251.941626 228.98752682 224.14880061 208.55888715 194.76072149

194.1927585 192.50127191 186.69009254 181.17916067 180.81032583]

['people', 'phone', 'technology', 'service', 'game', 'user', 'computer', 'software', 'music', 'firm', 'site', 'time', 'network', 'video', 'mail', 'internet', 'way', 'consumer', 'number', 'virus']

[680.17474713 452.3961128 422.18599053 392.05349486 315.97985885

310.19888871 287.0726163 272.19648631 260.04783894 233.83933227

229.49176761 224.82279539 219.68765596 219.425011 215.04665086

214.76647326 209.25888074 202.79667119 198.7542187 197.672328 ]

df_test = pd.read_csv('bbc_news_test.csv')

%%time

df_test['Preprocess_text'] = df_test['Text'].apply(preprocess_text)

df_test['Preprocess_text'] = df_test['Preprocess_text'].apply(remove_verbs_and_adjectives)

df_test_tf = tf_vectorizer.transform(df_test['Preprocess_text'])

lda_tf.transform(df_test_tf)

array([[0.128288 , 0.00543088, 0.85561882, 0.00534719, 0.00531511],

[0.00191148, 0.00193182, 0.00193953, 0.28883497, 0.70538221],

[0.00360513, 0.00360238, 0.98555182, 0.00363063, 0.00361004],

...,

[0.11366724, 0.32884101, 0.00273285, 0.32595816, 0.22880073],

[0.52009706, 0.00362464, 0.03958206, 0.24591173, 0.1907845 ],

[0.0339508 , 0.00166348, 0.0016659 , 0.96107025, 0.00164957]])

What you are seeing above: Probability that a document contains that topic.

Tags: Machine Learning, Natural Language Processing, Technology, Data Visualization,

Tuesday, June 28, 2022

Subscribe to:

Comments (Atom)