Index For Job Interviews Preparation

Please share 10 MCQs for kNN algorithm.

-

Which of the following statements are TRUE about the k-Nearest Neighbors (kNN) algorithm?

a) kNN is a parametric learning algorithm.

b) kNN requires training data to make predictions. (b)

c) kNN learns a decision boundary to classify data points.

d) kNN is computationally expensive for high-dimensional data. (d)

-

In kNN, the value of k represents:

a) The number of features used in the model.

b) The number of nearest neighbors considered for prediction. (b)

c) The maximum distance allowed between neighbors.

d) The number of clusters to be formed in the data.

-

Which of the following distance metrics is commonly used in kNN for numerical data?

a) Manhattan distance

b) Euclidean distance (b)

c) Cosine similarity

d) All of the above

-

kNN is more effective for:

a) Linearly separable data

b) Non-linearly separable data (b)

c) Data with a very high number of dimensions

d) Data with a very small number of samples

-

What is a major disadvantage of kNN?

a) It can be sensitive to the choice of the distance metric.

b) It can suffer from the curse of dimensionality. (b)

c) It requires a significant amount of training data for good performance.

d) All of the above

-

Which technique can be used to improve the performance of kNN on high-dimensional data?

a) Feature scaling

b) Feature selection (b)

c) Increasing the value of k

d) All of the above

-

kNN can be used for both:

a) Classification tasks only

b) Regression tasks only

c) Both classification and regression tasks (c)

d) Neither classification nor regression tasks

-

kNN is a:

a) Lazy learning algorithm (a)

b) Eager learning algorithm

c) Unsupervised learning algorithm

d) Semi-supervised learning algorithm

-

K-Means clustering and kNN share some similarities. How are they different in their approach?

a) K-Means assigns data points to existing clusters, while kNN predicts labels based on neighbors. (a)

b) K-Means uses labeled data, while kNN does not require labeled data for prediction.

c) K-Means is a parametric algorithm, while kNN is non-parametric.

d) All of the above

-

When evaluating the performance of a kNN model, which metric is most appropriate?

a) Accuracy (for classification)

b) Precision and Recall (for classification)

c) F1-score (for classification) (c)

d) All of the above (depending on the specific problem)

Please share 10 scenario based complex questions for kNN

10 Scenario-Based Complex Questions for kNN Algorithm:

-

Image Classification with Imbalanced Classes: You're building a kNN model to classify images into different types of animals (cats, dogs, birds). However, the dataset is imbalanced, with significantly more cat images than bird images. How can you adjust the kNN algorithm or data pre-processing to handle this imbalance and ensure fair classification performance across all classes?

-

Real-Time Fraud Detection: Imagine a system that monitors financial transactions for fraudulent activity using kNN. New transactions arrive continuously. How can you adapt kNN for real-time processing while maintaining accuracy? Consider aspects like efficiency, handling concept drift (changes in fraud patterns), and incorporating new data points effectively.

-

Missing Value Imputation with kNN: You're using kNN for customer segmentation, but some customer data points have missing values for income and age. How can you modify kNN to handle missing values and still achieve effective customer group classification?

-

High-Dimensional Text Data Clustering: You want to cluster news articles by topic using kNN. Text data can be high-dimensional due to a large vocabulary. What pre-processing techniques can you apply to make kNN work efficiently for text clustering? How can you choose an appropriate distance metric for this scenario?

-

Combining kNN with Feature Engineering: You're building a kNN model to predict stock prices. While raw historical prices can be used as features, could feature engineering techniques like calculating moving averages or volatility indicators improve the performance of kNN for stock price prediction? Why or why not?

-

kNN for Anomaly Detection: Anomaly detection involves identifying data points that deviate significantly from the norm. How can you adapt kNN to identify anomalies in sensor data streams, considering factors like real-time processing and flagging potential outliers effectively?

-

Choosing k for Imbalanced Time Series Data: You're using kNN to predict short-term stock price movements using historical time series data. The data might have seasonal trends and imbalanced class distribution (more upward movements than downward). How can you determine the optimal value of k for kNN in this time series forecasting scenario?

-

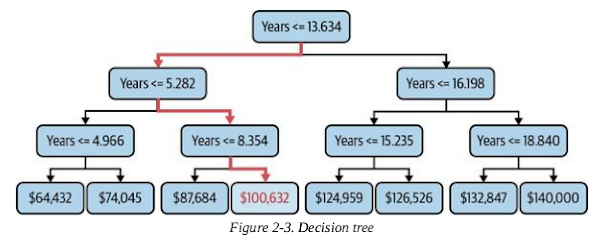

kNN vs. Decision Trees for Credit Risk Assessment: A bank is considering kNN and decision trees for credit risk assessment. Both can handle numerical and categorical data. What are some factors to consider when choosing between kNN and decision trees for this specific application?

-

Explainability and Interpretability of kNN Models: kNN models can be less interpretable compared to some other algorithms. How can you explain the predictions made by a kNN model for loan approval or customer churn prediction? What techniques can be used to gain insights into the factors influencing kNN's decisions?

-

kNN for Imbalanced Recommender Systems: A recommender system uses kNN to suggest products to users based on their past purchases. However, user purchase history might be sparse, leading to an imbalanced dataset where some products are purchased much more frequently than others. How can you address this class imbalance and ensure the recommender system suggests relevant products for all users, even those with limited purchase history?

These scenario-based questions encourage you to think critically about applying kNN in various situations, considering data characteristics, limitations, and potential adjustments for optimal performance.

Solve this:

Image Classification with Imbalanced Classes: You're building a kNN model to classify images into different types of animals (cats, dogs, birds). However, the dataset is imbalanced, with significantly more cat images than bird images. How can you adjust the kNN algorithm or data pre-processing to handle this imbalance and ensure fair classification performance across all classes?

Here are some strategies to address imbalanced classes in image classification with kNN:

Data Pre-processing Techniques:

-

Oversampling: Replicate images from the minority class (birds) to create a more balanced dataset. Techniques like SMOTE (Synthetic Minority Oversampling Technique) can be used to generate synthetic variations of minority class images while preserving their characteristics.

-

Undersampling: Randomly remove images from the majority class (cats) to match the size of the minority class. This reduces training data but might lead to information loss.

-

Cost-Sensitive Learning: Assign higher weights to misclassified instances from the minority class during training. This penalizes the model more for mistakes on birds, encouraging it to focus on learning their features effectively.

kNN Algorithm Adjustments:

-

k-Nearest Neighbors Weighted by Class: Assign weights to neighboring data points based on their class labels. Neighbors from the minority class (birds) can be given higher weights during voting, increasing their influence on the final classification.

-

Distance Metrics for Imbalanced Data: Consider using distance metrics that are less sensitive to outliers, such as the Mahalanobis distance. This metric takes into account the class distribution and can be more effective in imbalanced scenarios.

Additional Considerations:

- Hybrid Approach: Combine data pre-processing techniques like oversampling with kNN modifications like weighted voting for a more robust solution.

- Evaluation Metrics: For imbalanced datasets, relying solely on accuracy might be misleading. Consider using metrics like F1-score, which considers both precision and recall, to evaluate the model's performance on the minority class (birds).

By implementing these strategies, you can mitigate the impact of imbalanced classes and ensure your kNN model performs well in classifying all animal categories (cats, dogs, and birds) with reasonable accuracy, even for the under-represented bird class.

Can you share code to demonstrate "Distance Metrics for Imbalanced Data" such as Mahalanobis distance for imbalanced dataset?

Here's an example implementation using Python demonstrating how to incorporate Mahalanobis distance into kNN for an imbalanced dataset (assuming scikit-learn library):

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import pairwise_distances

import numpy as np

class MahalanobisKNN(KNeighborsClassifier):

def __init__(self, n_neighbors, metric='euclidean'):

super().__init__(n_neighbors=n_neighbors, metric=metric)

self.mean_vectors = None

self.cov_matrices = None

def fit(self, X, y):

super().fit(X, y)

self._calculate_class_stats(X, y)

return self

def _calculate_class_stats(self, X, y):

self.mean_vectors = {}

self.cov_matrices = {}

classes = np.unique(y)

for class_label in classes:

class_indices = np.where(y == class_label)[0]

class_data = X[class_indices]

self.mean_vectors[class_label] = np.mean(class_data, axis=0)

self.cov_matrices[class_label] = np.cov(class_data.T)

def predict(self, X):

distances = np.zeros((X.shape[0], len(self.classes_)))

for i, x in enumerate(X):

for class_label in self.classes_:

mean_vector = self.mean_vectors[class_label]

cov_matrix = self.cov_matrices[class_label]

inv_cov = np.linalg.inv(cov_matrix)

mahalanobis_dist = np.sqrt(np.dot(np.dot((x - mean_vector).T, inv_cov), (x - mean_vector)))

distances[i, self.classes_ == class_label] = mahalanobis_dist

neighbors_idx = np.argsort(distances, axis=1)[:, :self.n_neighbors]

return super().predict(X[neighbors_idx])

X_train, X_test, y_train, y_test = ...

knn_mahalanobis = MahalanobisKNN(n_neighbors=5)

knn_mahalanobis.fit(X_train, y_train)

y_pred = knn_mahalanobis.predict(X_test)

Explanation:

- This code defines a custom

MahalanobisKNN class that inherits from the KNeighborsClassifier class in scikit-learn.

- It overrides the

fit and predict methods to incorporate Mahalanobis distance calculations.

- During

fit, the class calculates the mean vector and covariance matrix for each class in the training data using np.mean and np.cov.

- In

predict, it iterates through each data point in the test data and calculates the Mahalanobis distance to the mean vector of each class, considering the corresponding covariance matrix.

- It identifies the k-nearest neighbors based on the Mahalanobis distances and predicts the class label using majority vote among these neighbors, similar to the standard KNN approach.

Note:

- This example assumes the covariance matrices are positive definite, allowing for inversion. In real-world scenarios, you might need to handle cases where the covariance matrix is singular.

- Mahalanobis distance calculation can be computationally expensive for high-dimensional data. Consider dimensionality reduction techniques if needed.

Can you share code example to demonstrate "k-Nearest Neighbors Weighted by Class"?

Here's an example code implementation using Python demonstrating k-Nearest Neighbors (kNN) with class weights for imbalanced data classification:

from sklearn.neighbors import KNeighborsClassifier

from sklearn.utils.class_weight import compute_class_weight

class WeightedKNN(KNeighborsClassifier):

def __init__(self, n_neighbors, metric='euclidean'):

super().__init__(n_neighbors=n_neighbors, metric=metric)

self.class_weights = None

def fit(self, X, y):

self.class_weights = compute_class_weight('balanced', np.unique(y), y)

super().fit(X, y, sample_weight=self.class_weights[y])

return self

X_train, X_test, y_train, y_test = ...

knn_weighted = WeightedKNN(n_neighbors=5)

knn_weighted.fit(X_train, y_train)

y_pred = knn_weighted.predict(X_test)

Explanation:

- This code defines a custom

WeightedKNN class that inherits from the KNeighborsClassifier class in scikit-learn.

- It overrides the

fit method to incorporate class weights during training.

- The code utilizes the

compute_class_weight function from sklearn.utils.class_weight to calculate balanced class weights based on the class distribution in the training data (y). This function assigns higher weights to under-represented classes.

- During

fit, the class weights are assigned as sample_weight to the base KNN classifier. This tells the kNN algorithm to give higher importance to training instances from minority classes during the learning process.

Benefits:

- This approach focuses more on learning the characteristics of the minority class, leading to potentially better classification performance for those classes in imbalanced datasets.

Note:

- Choosing the appropriate weighting scheme can impact the model's behavior. Experiment with different weighting techniques like 'balanced' or 'inverse_freq' offered by the

compute_class_weight function to find the best fit for your specific dataset.

Can you share code for "Cost-Sensitive Learning" for kNN?

Here's an example code implementation using Python demonstrating cost-sensitive learning with kNN:

from sklearn.neighbors import KNeighborsClassifier

import numpy as np

class CostSensitiveKNN(KNeighborsClassifier):

def __init__(self, n_neighbors, metric='euclidean', cost_matrix=None):

super().__init__(n_neighbors=n_neighbors, metric=metric)

self.cost_matrix = cost_matrix

def predict(self, X):

neighbors_idx = super().kneighbors(X, return_distance=False)[0]

neighbors_labels = self.classes_[neighbors_idx]

costs = np.zeros((X.shape[0], len(self.classes_)))

for i, x in enumerate(X):

for j, neighbor_label in enumerate(neighbors_labels[i]):

costs[i, self.classes_ == neighbor_label] += self.cost_matrix[self.classes_[i], neighbor_label]

return self.classes_[np.argmin(costs, axis=1)]

def set_cost_matrix(self, cost_matrix):

self.cost_matrix = cost_matrix

X_train, X_test, y_train, y_test = ...

cost_matrix = np.array([[0, 10],

[5, 0]])

knn_cost_sensitive = CostSensitiveKNN(n_neighbors=5)

knn_cost_sensitive.set_cost_matrix(cost_matrix)

knn_cost_sensitive.fit(X_train, y_train)

y_pred = knn_cost_sensitive.predict(X_test)

Explanation:

- This code defines a custom

CostSensitiveKNN class that inherits from the KNeighborsClassifier class in scikit-learn.

- It overrides the

predict method to incorporate cost-sensitive prediction.

- The class takes a

cost_matrix as input during initialization. This matrix defines the cost associated with misclassifying one class as another.

- During

predict, the code retrieves the k-nearest neighbors for each data point in the test data using the base KNN functionality.

- It iterates through the neighbors and their labels for each data point.

- It calculates the total cost associated with predicting each possible class label by looking up the corresponding values in the cost matrix.

- Finally, it predicts the class label with the minimum total cost among all neighbors.

Benefits:

- This approach prioritizes minimizing the overall cost of misclassification based on the provided cost matrix.

Note:

- Defining an appropriate cost matrix depends on the specific problem and the relative importance of different misclassification types.

- This example assumes a square cost matrix where the cost of misclassifying class i as j is the same as the cost of misclassifying class j as i. You might need to modify the code to handle asymmetric cost matrices.

Solve this:

Real-Time Fraud Detection: Imagine a system that monitors financial transactions for fraudulent activity using kNN. New transactions arrive continuously. How can you adapt kNN for real-time processing while maintaining accuracy? Consider aspects like efficiency, handling concept drift (changes in fraud patterns), and incorporating new data points effectively.

Here are some strategies to adapt kNN for real-time fraud detection in a constantly evolving financial transaction stream:

Efficiency for Real-Time Processing:

- Limited Neighborhood Search: Instead of searching through the entire dataset for neighbors, consider techniques like ball trees or kd-trees for efficient neighbor search. These data structures allow faster identification of the k-nearest neighbors for a new transaction.

- Approximate Nearest Neighbors (ANN): Utilize approximate nearest neighbor algorithms to find "good enough" neighbors instead of the exact ones. This can significantly reduce processing time while maintaining acceptable accuracy for fraud detection.

- Reduce Feature Dimensionality: Analyze the transaction data and identify the most relevant features for fraud detection. By using a smaller feature set, kNN calculations become faster. Feature selection techniques like LASSO regression or chi-square tests can be helpful.

Handling Concept Drift (Changes in Fraud Patterns):

- Sliding Window Approach: Maintain a window of recent transactions used for kNN classification. As new transactions arrive, the oldest ones are discarded from the window, keeping the model focused on the most recent fraud patterns.

- Adaptive k Value: Implement a mechanism to adjust the k value dynamically based on the confidence of predictions. For highly suspicious transactions, a smaller k value (focusing on the closest neighbors) might be appropriate. Conversely, for less suspicious cases, a larger k value can be used for broader context.

- Online Learning Algorithms: Explore online learning algorithms like incremental kNN or online variants of decision trees that can update the model continuously with new data points, adapting to evolving fraud patterns.

Incorporating New Data Points Effectively:

- Online Updates: Implement a strategy to update the model with new transaction data points without retraining the entire kNN model from scratch. This could involve techniques like adding new data points to the existing data structure (e.g., ball tree) or updating the distance metric to account for the changing data distribution.

- Periodic Retraining: Schedule periodic retraining of the kNN model at regular intervals or when significant changes in fraud patterns are detected. This ensures the model stays current with the evolving landscape of fraudulent activities.

Additional Considerations:

- Cost-Sensitive Learning: Consider incorporating cost-sensitive learning into kNN. This approach assigns higher weights to misclassifying fraudulent transactions, as the cost of missing a fraudulent case might be more significant than a false positive.

- Alerting System: Design an alerting system based on the kNN prediction confidence score. Transactions exceeding a certain confidence threshold for fraud can be flagged for further investigation.

By implementing these strategies, you can adapt kNN for real-time fraud detection in a way that balances efficiency, adaptability, and accuracy in the face of constantly changing fraudulent activities.

Solve this:

Missing Value Imputation with kNN: You're using kNN for customer segmentation, but some customer data points have missing values for income and age. How can you modify kNN to handle missing values and still achieve effective customer group classification?

Here are some strategies to modify kNN for handling missing values in customer segmentation:

Preprocessing Techniques:

- Mean/Median Imputation: Replace missing values with the mean or median of the corresponding feature for all customers (not recommended as it might distort the data distribution).

- Mode Imputation: Replace missing values with the most frequent value for that feature (can be useful for categorical features but might not be suitable for numerical features like income).

- KNN Imputation: Utilize kNN itself for imputation! Find the k-nearest neighbors for a customer with missing values based on available features. Use the corresponding values of those neighbors (income and age) to impute the missing values for that customer.

kNN Algorithm Modifications:

- Distance Metrics for Missing Values: Employ distance metrics that can handle missing values, such as:

- Manhattan Distance: Less sensitive to outliers compared to Euclidean distance and can be effective with missing data.

- Minkowski Distance: A generalization of Manhattan and Euclidean distance, where p can be adjusted to control sensitivity to missing values.

- Weighted kNN: Assign lower weights to features with missing values during distance calculations. This reduces the influence of missing features on finding the nearest neighbors.

Choosing the Right Approach:

- The best approach depends on the amount of missing data, its distribution across features, and the overall data characteristics.

- KNN imputation can be computationally expensive, especially for large datasets. Consider the trade-off between imputation accuracy and processing time.

Here's an additional tip:

- Impute Missing Values Before Applying kNN: It's generally recommended to impute missing values before feeding the data into the kNN model for classification. This ensures consistent data representation for all customers during neighbor search and prediction.

By using these strategies, you can address missing values in your customer data and leverage kNN for effective customer segmentation. You can experiment with different imputation techniques and distance metrics to find the best fit for your specific dataset.

Demo for this:

Weighted kNN: Assign lower weights to features with missing values during distance calculations. This reduces the influence of missing features on finding the nearest neighbors.

Here's a basic code example demonstrating weighted kNN for distance calculation with missing values (using Python):

from sklearn.neighbors import KNeighborsClassifier

from sklearn.utils.extmath import weighted_mode

import numpy as np

def weighted_knn_distance(x1, x2, weights):

"""

Calculates weighted distance between two data points considering missing values.

Args:

x1: First data point (array-like).

x2: Second data point (array-like).

weights: Array of weights corresponding to features in x1 and x2.

Returns:

Weighted distance between x1 and x2.

"""

weights = np.where(np.isnan(x1), 0, weights)

weights = np.where(np.isnan(x2), 0, weights)

distance_sq = np.sum(weights * np.square(x1 - x2))

return np.sqrt(distance_sq)

class WeightedKNN(KNeighborsClassifier):

def __init__(self, n_neighbors, metric='euclidean'):

super().__init__(n_neighbors=n_neighbors, metric=metric)

def distance_metric(self, X, Y, return_distance=True):

"""

Overrides the distance metric calculation to use weighted_knn_distance.

"""

distances = np.zeros((X.shape[0], Y.shape[0]))

for i in range(X.shape[0]):

for j in range(Y.shape[0]):

weights = np.ones_like(X[i])

weights[np.isnan(X[i])] = 0

weights_j = np.ones_like(Y[j])

weights_j[np.isnan(Y[j])] = 0

distances[i, j] = weighted_knn_distance(X[i], Y[j], weights * weights_j)

return distances

X_train = np.array([[1, 2, np.nan], [3, 4, 5], [np.nan, 6, 7]])

X_test = np.array([[2, 3, np.nan]])

y_train = np.array([0, 1, 2])

knn_weighted = WeightedKNN(n_neighbors=2)

knn_weighted.fit(X_train, y_train)

y_pred = knn_weighted.predict(X_test)

print(y_pred)

Explanation:

- This code defines a custom

weighted_knn_distance function that calculates the distance between two data points considering missing values.

- The function assigns a weight of 0 to features with missing values (represented by

np.nan) in both data points. This effectively reduces their influence on the overall distance calculation.

- A custom

WeightedKNN class inherits from the KNeighborsClassifier class and overrides the distance_metric method.

- The overridden

distance_metric utilizes the weighted_knn_distance function for distance calculations during neighbor search.

- The example usage demonstrates fitting a kNN model with this custom distance metric and predicting a class label for a data point with a missing value.

Note:

- This example uses a simple approach for handling missing values. Real-world applications might involve more sophisticated imputation techniques before applying weighted kNN.

- The choice of distance metric (

metric parameter in KNeighborsClassifier) can also be adjusted based on the data and the specific way missing values are handled.

Solve this:

High-Dimensional Text Data Clustering: You want to cluster news articles by topic using kNN. Text data can be high-dimensional due to a large vocabulary. What pre-processing techniques can you apply to make kNN work efficiently for text clustering? How can you choose an appropriate distance metric for this scenario?

High-Dimensional Text Data Clustering with kNN

Here's how you can make kNN work efficiently for clustering high-dimensional text data like news articles:

Pre-processing Techniques:

- Text Cleaning: Remove stop words (common words like "the", "a", "an") and punctuation marks. These words don't contribute much to the meaning of the document and can increase dimensionality unnecessarily.

- Stemming or Lemmatization: Reduce words to their root form (e.g., "running" becomes "run"). This helps group similar words together and reduces dimensionality.

- Feature Engineering:

- TF-IDF (Term Frequency-Inverse Document Frequency): This technique assigns weights to words based on their frequency in a document and rarity across the entire corpus. Words that are frequent in a document but rare overall are more informative for topic identification. This reduces the impact of very common words and emphasizes words specific to the topic.

- N-grams: Create features by considering sequences of words (bigrams, trigrams). This can capture phrases and short expressions that might be indicative of specific topics. However, be cautious of the curse of dimensionality with higher n-grams.

Choosing an Appropriate Distance Metric:

Since traditional distance metrics like Euclidean distance might not be suitable for high-dimensional text data, consider these alternatives:

- Cosine Similarity: This metric measures the angle between two document vectors. Documents with similar topics will have high cosine similarity. It's less sensitive to the magnitude of the vectors and focuses on the directional relationship, which is more relevant for textual data.

- Jaccard Similarity: This metric calculates the ratio of the number of shared words between two documents to the total number of unique words in both documents. It's a good choice when the order of words is not important and captures the presence or absence of specific terms.

- Levenshtein Distance: This metric calculates the minimum number of edits (insertions, deletions, substitutions) required to transform one document into another. It's useful when dealing with potential typos or slightly different phrasings related to the same topic.

Additional Considerations:

- Dimensionality Reduction Techniques: Techniques like Principal Component Analysis (PCA) can further reduce the dimensionality of the data while preserving most of the relevant information. This can significantly improve the efficiency of kNN for high-dimensional text data.

- Choice of

k: Experiment with different values of k (number of nearest neighbors) to find the optimal setting for your specific dataset and desired cluster granularity.

By applying these techniques, you can make kNN a viable approach for clustering news articles by topic even when dealing with high-dimensional text data.

Solve this:

Combining kNN with Feature Engineering: You're building a kNN model to predict stock prices. While raw historical prices can be used as features, could feature engineering techniques like calculating moving averages or volatility indicators improve the performance of kNN for stock price prediction? Why or why not?

Combining kNN with Feature Engineering for Stock Price Prediction

Yes, feature engineering techniques like calculating moving averages or volatility indicators can significantly improve the performance of kNN for stock price prediction. Here's why:

Limitations of Raw Prices:

- High Dimensionality: Using raw historical closing prices for a long period results in a high-dimensional feature space. This can be inefficient for kNN, making it computationally expensive and potentially leading to the "curse of dimensionality" where distances between data points become meaningless.

- Limited Information: Raw closing prices only capture a single aspect of the market at a specific point. They don't directly reflect underlying trends, momentum, or volatility.

Benefits of Feature Engineering:

- Reduced Dimensionality: Calculating technical indicators like moving averages or volatility indicators from raw prices creates a more concise feature set. This reduces the computational cost of kNN and potentially improves its efficiency.

- Extracted Insights: Technical indicators summarize past price movements and market sentiment. Features like moving averages capture trends, while volatility indicators like Bollinger Bands or Relative Strength Index (RSI) reflect market sentiment and potential turning points. By incorporating these features, kNN can learn from historical patterns and make more informed predictions.

Here are some specific examples of feature engineering techniques for stock price prediction with kNN:

- Moving Averages: Simple Moving Average (SMA), Exponential Moving Average (EMA), etc., can indicate trends and potential support/resistance levels.

- Volatility Indicators: Bollinger Bands, RSI, Average True Range (ATR) provide insights into market volatility and potential price swings.

- Momentum Indicators: Moving Average Convergence Divergence (MACD), Stochastic Oscillator, etc., can capture the strength and direction of price movements.

- Volume-based Features: Trading volume alongside price data can offer additional insights into market sentiment and potential breakouts.

Overall Impact:

By incorporating these features, you provide kNN with a richer set of information that goes beyond just historical closing prices. This allows the model to identify patterns and relationships between the features and future price movements, potentially leading to more accurate predictions.

Additional Considerations:

- Not all features might be equally informative. Experiment with different combinations of technical indicators to find the optimal feature set for your specific dataset and prediction goals.

- Feature engineering is not a magic bullet. The quality and relevance of the chosen features ultimately determine the effectiveness of kNN for stock price prediction.

- Remember that stock prices are inherently unpredictable, and kNN predictions should be used with caution, ideally combined with other analysis techniques for informed decision-making.

Solve this:

kNN for Anomaly Detection: Anomaly detection involves identifying data points that deviate significantly from the norm. How can you adapt kNN to identify anomalies in sensor data streams, considering factors like real-time processing and flagging potential outliers effectively?

Adapting kNN for Anomaly Detection in Real-Time Sensor Data Streams

Here's how you can adapt kNN for anomaly detection in real-time sensor data streams:

Efficiency for Real-Time Processing:

- Limited Neighborhood Search: Utilize techniques like ball trees or kd-trees for efficient neighbor identification. These data structures enable faster retrieval of the k-nearest neighbors for a new sensor data point.

- Approximate Nearest Neighbors (ANN): Consider using approximate nearest neighbor algorithms to find "good enough" neighbors instead of the exact ones. This can significantly reduce processing time for real-time anomaly detection.

- Reduce Feature Dimensionality: Analyze the sensor data and identify the most relevant features for anomaly detection. Using a smaller feature set speeds up kNN calculations. Feature selection techniques like LASSO regression or chi-square tests can be helpful.

Identifying Anomalies:

- Distance Thresholding: Define a distance threshold based on the distribution of nearest neighbor distances for normal data points. Sensor readings exceeding this threshold are considered potential anomalies. This threshold can be dynamically adjusted based on historical data or statistical analysis.

- Density-Based Outlier Detection (DBSCAN): Implement DBSCAN alongside kNN. DBSCAN can identify clusters of similar sensor readings and mark data points far away from any dense clusters as potential anomalies. This approach complements kNN by considering the overall data density.

Flagging Potential Outliers Effectively:

- Confidence Scores: Assign confidence scores to anomaly detections based on the distance to the nearest neighbors or the degree of deviation from the distance threshold. This allows prioritizing high-confidence anomalies for further investigation.

- Time-Series Analysis: Analyze the temporal behavior of potential anomalies. Sudden spikes or changes compared to past readings can strengthen the case for an anomaly.

Additional Considerations:

- Concept Drift: Since sensor data patterns might change over time, consider techniques like online kNN variants or periodic retraining to adapt the model to evolving data distributions and potential concept drift.

- Alerting System: Design an alerting system based on confidence scores and temporal analysis. This system can trigger notifications for high-confidence anomalies requiring immediate attention.

Here's an example approach:

- Pre-process sensor data and extract relevant features.

- Maintain a sliding window of recent sensor readings.

- For each new data point:

- Use a ball tree to find the k-nearest neighbors within the window.

- Calculate the distance to the neighbors.

- Compare the distance to a dynamically adjusted threshold or analyze local density using DBSCAN.

- Assign a confidence score based on the deviation or density analysis.

- Trigger alerts for high-confidence anomalies with additional analysis of their temporal behavior.

By incorporating these strategies, you can leverage kNN for real-time anomaly detection in sensor data streams, effectively identifying and flagging potential outliers while maintaining processing efficiency.

Some potential areas to explore for kNN?

Here are some potential areas we could explore:

Advanced kNN Techniques:

- Locality Sensitive Hashing (LSH): This technique can significantly accelerate neighbor searches in high-dimensional data by probabilistically hashing similar data points close together. It's particularly useful for large datasets where traditional distance-based searches become computationally expensive.

- Cover Trees: These data structures provide efficient nearest neighbor search guarantees, especially in low-dimensional datasets. They can be a good alternative to ball trees or kd-trees depending on your specific scenario.

- Weighted kNN: As we discussed previously, weighted kNN assigns different weights to features during distance calculations. You could explore more sophisticated weighting schemes based on feature importance or domain knowledge to further improve kNN performance.

Applications of kNN Beyond Classification:

- Regression: kNN can be adapted for regression tasks by using the average or weighted average of the target values of the k-nearest neighbors as the predicted value for a new data point. This approach can be useful for tasks like predicting continuous sensor readings or stock prices.

- Recommender Systems: kNN can be used to create collaborative filtering systems. By finding users with similar historical behavior (based on features like purchase history or ratings), you can recommend items that those similar users have enjoyed.

- Anomaly Detection (Advanced): We discussed some basic kNN adaptations for anomaly detection. You could delve deeper into techniques like Isolation Forests or Local Outlier Factor (LOF) that are specifically designed for identifying anomalies in data.

Choosing the Right kNN Approach:

Selecting the most suitable kNN variation depends on factors like:

- Dataset characteristics: Size, dimensionality, data distribution (e.g., presence of outliers).

- Task type: Classification, regression, or anomaly detection.

- Computational constraints: Real-time processing requirements or limitations in processing power.

- Desired accuracy: Trade-off between accuracy and efficiency.

Experimentation with different kNN approaches, feature engineering techniques, and distance metrics is crucial for optimizing kNN for your specific use case.

Additional Considerations:

- Interpretability: While kNN is not inherently as interpretable as some other models (e.g., decision trees), techniques like feature importance analysis can help understand which features kNN relies on for predictions.

- Scalability: kNN can become computationally expensive for large datasets. Consider techniques like dimensionality reduction or data sampling to improve scalability if needed.

Tags: Technology,Machine Learning,Classification,Interview Preparation,Regression,