Pages

- Index of Lessons in Technology

- Index of Book Summaries

- Index of Book Lists And Downloads

- Index For Job Interviews Preparation

- Index of "Algorithms: Design and Analysis"

- Python Course (Index)

- Data Analytics Course (Index)

- Index of Machine Learning

- Postings Index

- Index of BITS WILP Exam Papers and Content

- Lessons in Investing

- Index of Math Lessons

- Index of Management Lessons

- Book Requests

- Index of English Lessons

- Index of Medicines

- Index of Quizzes (Educational)

Sunday, March 6, 2022

Weka classification experiment on Iris dataset

Tags: Technology,Machine Learning,Classification,1: Weka Explorer: Preprocess Tab: Iris dataset

2: Weka Experiment Environment: New experiment

3: Weka Experiment Environment: Selecting Naive Bayes' Classifier

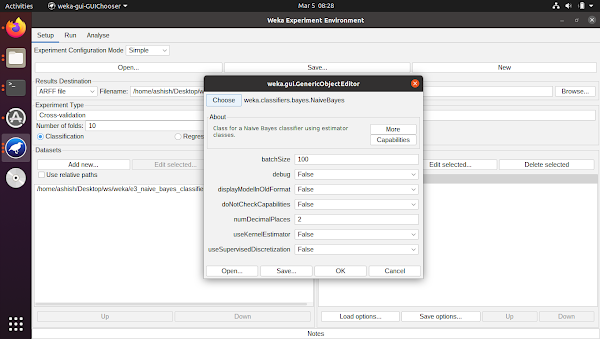

4: Weka Experiment Environment: Selecting kNN for comparison with Naive Bayes' Classifier

5: Weka Experiment Environment: Go to 'Run' tab and click on 'start'

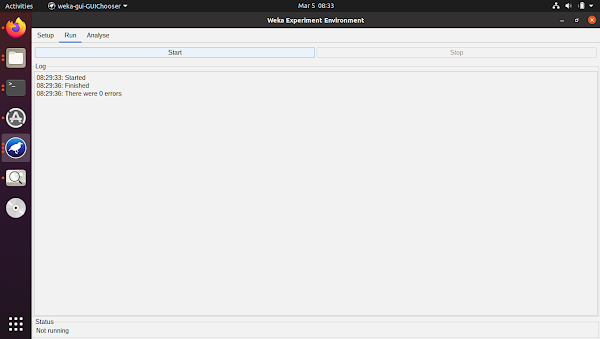

6: Weka Experiment Environment: Go to 'Analyze' tab and compare three algorithms

7: Weka Experiment Environment: Analyze tab: Perform test

Thursday, March 3, 2022

Saving Model, Loading Model and Making Predictions for Linear Regression (in Weka)

1: Weka Explorer. Preprocess Tab.

2: Weka Explorer. Classify Tab.

3: Weka Explorer. Visualize Tab

4: Weka Experiment. Setup Tab. Advanced Configuration.

5: Weka Experiment Environment. Analyze Tab

6: Weka Experiment Environment. Comparing ZeroR with Linear Regression.

7: Saving and Loading models from SimpleCLI (documentation)

8: Use of TAB key in Weka SimpleCLI (Doc)

9: Use of Tab key in Weka SimpleCLI (Demo)

10: Training and Saving Linear Regression model from SimpleCLI.

Weka by default, picks up the last column as the target. So, Weka on reading from our file considered 'Day Count' as dependent variable and 'Close Price' as independent variable for 'COALINDIA' ticker data.

11: Dataset Corrected For Column Ordering

12: Training and Saving Linear Regression model after Correction in Dataset

13: Error during prediction for having only one col instead of two

14: Correction in test.csv

15: Error during prediction (string is not numeric)

16: Load Previously Saved Model in The Weka Explorer: Classify Tab

17: Select test data and select output predictions format

18: Select our previously saved model

19: View our saved model (Linear Regression) configuration

20: Re-evaluate model on current test set

21: View Classifier Output with saved model and extrapolated test data

Tags: Technology,Machine Learning,FOSSPandas DataFrame Filtering Using eval()

import pandas as pd

df = pd.DataFrame({

"col1": [1, 2, 3, 1, 2, 3],

"col2": ["A", "B", "A", "B", "A", "B"]

})

c = r"(df['col1'] == 1) | (df['col2'] == 'A')"

df[eval(c)]

df[df['col1'] == 1]

d = "df['col1'] == 1"

df[eval(d)]

Tags: Technology,Machine Learning,Data Visualization,

Wednesday, March 2, 2022

Demo of Linear Regression on Boston Housing Data Using Weka

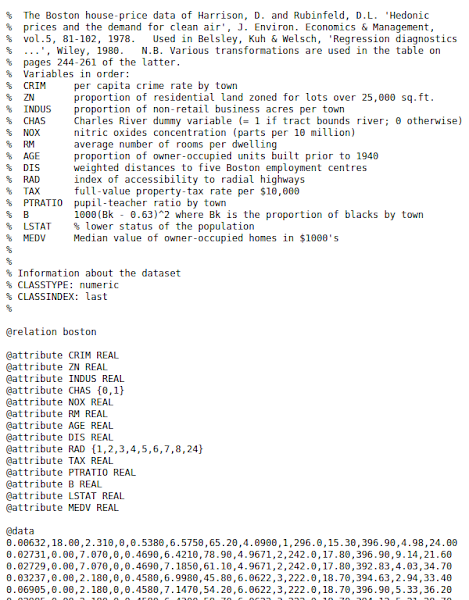

Tags: Technology,FOSS,Machine Learning,1 - Boston Housing ARFF file

2 - Weka Home Screen

3 - Weka Explorer

4 - Weka Explorer - Preprocess Tab - Boston Relation - MEDV Attribute

5 - Weka Explorer - Classify Tab

6 - Weka Explorer - Classify Tab - Linear Reg Equation

7 - Weka Explorer - Classify Tab - Select Linear Regression

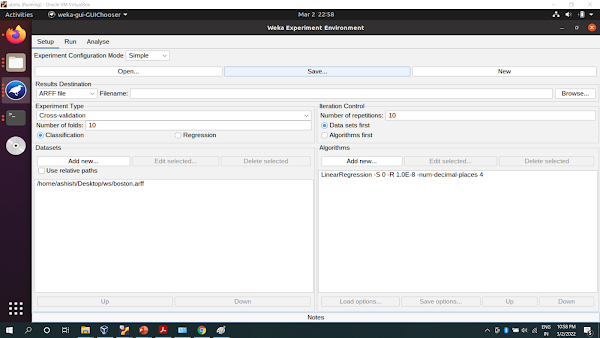

8 - Weka Experiment Environment

9 - Weka Experiment Environment - Add New Algorithm

10 - Weka Experiment Environment - Add New Algo - Linear Regression

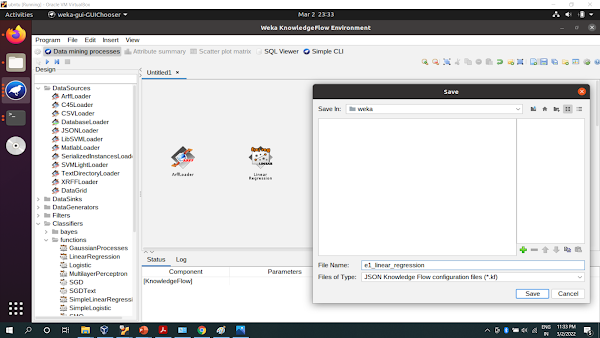

11 - Save Weka Experiment

12 - Weka SimpleCLI

13 - Weka KnowledgeFlow Environment

14 - Weka JSON KnowledgeFlow Configuration

15 - Weka Workbench - Classify Tab - Linear Regression

Subscribe to:

Comments (Atom)