Let us try to understand why NLP (Natural Language Processing) is considered hard using a few examples: 1. "There was not a single man at the party". - Does it mean that there were no men at the party? Or - Does it mean that there was no one at the party? Or - Here does man refer to the gender "man" or "mankind"? 2. "The chicken is ready to eat." - Does this mean that the bird (chicken) is ready to feed on some grains? Or - Does it mean that the meat is cooked well and is ready to be eaten by a human? 3. "Google is a great company" and "Google this word and find its meaning". - Google is being used as a noun in the first statement and as a verb in the second. Google (noun) and Google (verb) are homonyms. 4. The man saw a girl with a telescope. - Did the man use a telescope to see the girl? Or - Did the man see a girl who was holding a telescope? 5. Consider saying this to a voice interface like Siri and Alexa: She felt... less. She felt tamped down. Dim. More faint. Feint. Feigned. Fain. --Patrick Rothfuss 6. Why we need a bidirectional parsing model for Natural Language Processing? Sentences where future words tell about the words spoken in the past. Consider these two sentences: a. She says, "Teddy bears are my favorite toy." b. She says, "Teddy Roosevelt was the 26th President of the United States." On a high level what a unidirectional LSTM model will see: She says, "Teddy." On the other hand, a bidirectional LSTM will be able to see the information further down the road as well. See the illustration below: Forward LSTM will see: "She says, 'Teddy'." Backward LSTM will see: "was the the 26th President of the United States." 7. Word Sense Disambiguation As an example of the contextual effect between words, consider the word "by", which has several meanings, for example: # the book by Chesterton (agentive - Chesterton was the author of the book); # the cup by the stove (locative - the stove is where the cup is); and # submit by Friday (temporal - Friday is the time of the submitting). Observe below that the meaning of the italicized word helps us interpret the meaning of by. a. The lost children were found by the searchers (agentive) b. The lost children were found by the mountain (locative) c. The lost children were found by the afternoon (temporal) 8. Pronoun Resolution Consider three possible following sentences, and try to determine what was sold, caught, and found (one case is ambiguous). a. The thieves stole the paintings. They were subsequently sold. b. The thieves stole the paintings. They were subsequently caught. c. The thieves stole the paintings. They were subsequently found. Answering this question involves finding the antecedent of the pronoun they, either thieves or paintings. Computational techniques for tackling this problem include: Anaphora resolution - identifying what a pronoun or noun phrase refers to. And: Semantic role labeling - identifying how a noun phrase relates to the verb (as agent, patient, instrument, and so on).Tags: Natural Language Processing,

Pages

- Index of Lessons in Technology

- Index of Book Summaries

- Index of Book Lists And Downloads

- Index For Job Interviews Preparation

- Index of "Algorithms: Design and Analysis"

- Python Course (Index)

- Data Analytics Course (Index)

- Index of Machine Learning

- Postings Index

- Index of BITS WILP Exam Papers and Content

- Lessons in Investing

- Index of Math Lessons

- Downloads

- Index of Management Lessons

- Book Requests

- Index of English Lessons

- Index of Medicines

- Index of Quizzes (Educational)

Monday, July 18, 2022

Sentences that show Natural Language Processing of English is hard

Friday, July 15, 2022

Pitara 2 (Story 2 - Rani Learns Fast)

Rani Learns Fast Rani said: My home work is done. Papa, now I want to water the plants. Papa: First, switch off the fan, Rani. Rani turns off the fan. Papa said: Now go water the plants. Rani: Ok papa, Thank you. Rani said: All the plants look happy! May I please go out to play, Maa? Maa: Turn the tap off before you go, Rani. Rani turns the tap off. Maa said: Now, you can go to the park. Rani said: Ok, maa. Thank you. Later... Rani: Maa! Papa! I am back! I want to watch TV with you. Maa and Papa said: Come Rani, let us watch TV together. After some time... Rani said: I want to draw now. Papa said: Let us cook dinner. Maa: Let us go to the kitchen. Rani said: Switch off the TV. Maa, papa. Maa, papa said: Oh! Sorry!

Pitara 2 (Story 1 - Tillu Cares for the Books)

Tillu Cares for the Books Teacher: Hello kids! Let us take a book and read. Billu said: I like this drawing in the book. I will take out this page. Tillu: Stop! Stop! Don’t tear the page. Billu: I always keep drawings with me. Tillu said: Please don’t tear the page. Billu: I don’t care. I want the drawing. Tillu: Oh no! Give it here, Billu please. Teacher: Well done, Tillu. I am proud of you. Teacher said: Everyone clap for Tillu. He takes care of books. Billu said: Thank you! I’ll not tear any pages again. I will care for books. “I’ll” is a contraction of “I will”

Pitara 1 (Story 3 - Rani and Soni meets Sheru)

Rani and Soni meets Sheru Rani: Soni, look there. Soni: Oh! There is a little puppy under the tree. Rani: He is so cute! Soni: But he looks so weak. Rani: He wants food. Rani and Soni said to their mother: Mummy, please give us some roti for the little puppy. Rani said to puppy: Please come. Eat this. This is for you. Soni: Shall we call him Sheru? Rani: Oh, Sheru! Wow! This is a good name.

Pitara 1 (Story 2 - Shiny, Tillu and Billu)

SHINY TILLU Shiny: It is a good day. Tillu: I want to sing. Shiny: La, La, La, La... Tillu: You smell bad. Shiny: Hmmm! I have to brush my teeth. Tillu runs home. # “Runs” is a verb. Tillu brushes his teeth. Brush (verb) : Brush (noun) Brush is a homonym. Homonyms Words with the same spelling and pronunciation, but different meanings. # What is the verb in the above sentence? # How many nouns are there in this sentence? Tillu: Now I feel good. Billu: Hello! Tillu. Billu: Wow! You smell so good.

Pitara 1 (Story 1 - Kaku, Rani and Friends)

Kaku: I am Kaku. Rani: I am Rani. Kaku: I am in class 1A. Rani: I am in class 1C. Kaku: I like dancing. Rani: I like singing. Kaku: I like to eat orange. Rani: I like bananas more. Kaku: I do not like to eat tori. Rani: I do not like to eat egg. Kaku: I am happy to meet you. Rani: Same here.

Thursday, July 14, 2022

Learn Multi-Digit Addition by Counting Sticks

Enter the first number:

Enter the second number:

Answer is:

Tuesday, July 12, 2022

Student Update (2022-Jul-12)

Index of Journals

Tags: Student Update,JournalCounting

Komal Kumari Class: 4 Till: 20 Kusum Kumari Class: 5 Till: 89 Md Nihal Class: 6 Till: 29Tables

Yash Kashyap Class: 5 Tables Till: 7BODMAS

Shiva Patel Class: 6 Level: 2 Roshan Null Class: 7B Level: 2 (Could not solve substraction of bigger number from smaller one)

Monday, July 11, 2022

Natural Language Processing Questions and Answers (Set 1 of 11 Ques)

1. Which of the following areas are ones where NLP can be used? - Automatic Text Summarization - Automatic question answering systems - Information retrieval from documents - News Categorization Ans: - Automatic Text Summarization - Automatic question answering systems - Information retrieval from documents - News Categorization 2. Which of the following is/are among the many challenges of NLP? - Ambiguity in the data - High variation in data - Insufficient tagged data - Lack of processing power Ans: - Ambiguity in the data - High variation in data - Insufficient tagged data 3. Single Choice Correct Tokenization is: - removal of punctuation in the data - extraction of unique words in the data - removal of stop words - splitting the text into tokens (correct) 4. Single Choice Correct The output of the nltk.word_tokenize() method is a: - Tuple - String - List (Correct) - Dictionary 5. Single Choice Correct Stemming is the process of: - Reducing a sentence to its summary - Reducing a word to its base form / dictionary form - Reducing a word to its root word (Correct) - Removing unwanted tokens from text 6. Single Choice Correct The output of lemmatize(“went”) is: - went - go (correct) 7. Single Choice Correct What would be the output of the below code: flight_details = “Flight Indigo Airlines a2138” if(re.search(r”Airlines”, flight_details) != None): print(“Match found: Airlines”) else: print(“Match not found”) - Match found: Airlines (Correct) - Match not found 8. Single Choice Correct Lemmatization is the process of: - Reducing a sentence to its summary - Reducing a word to its base form / dictionary form (Correct) - Reducing a word to its root word - Removing unwanted tokens from text Ref: https://nlp.stanford.edu/IR-book/html/htmledition/stemming-and-lemmatization-1.html 9. Multiple Choice Correct Which of these are benefits of Annotation (POS Tagging)? a. Provides context to text in NLP b. Adds metadata to the text c. Helps in entity recognition d. Reduces size of data Correct answers: a, b, c 10. Single Choice Correct Which one of the following from NLTK package assigns the same tag to all the words? a. Default Tagger (Correct) b. Unigram Tagger c. N-gram Tagger d. Regular Expression Tagger 11. Which of these from NLTK package is considered as a context dependent tagger? a. N-gram Tagger (Correct) b. Regular Expression Tagger c. Unigram TaggerTags: Natural Language Processing,

Sunday, July 10, 2022

Tuesday, July 5, 2022

Practice identifying number in a series

Notes

Arithmetic Progression

An arithmetic progression or arithmetic sequence is a sequence of numbers such that the difference between the consecutive terms is constant. For instance, the sequence 5, 7, 9, 11, 13, 15... is an arithmetic progression with a common difference of 2.

Geometric Progression

In mathematics, a geometric progression, also known as a geometric sequence, is a sequence of non-zero numbers where each term after the first is found by multiplying the previous one by a fixed, non-zero number called the common ratio. For example, the sequence 2, 6, 18, 54, ... is a geometric progression with common ratio 3. Similarly 10, 5, 2.5, 1.25, ... is a geometric sequence with common ratio 1/2.

Examples of a geometric sequence are powers rk of a fixed non-zero number r, such as 2k and 3k. The general form of a geometric sequence is:

a, ar, a.r^2, a.r^3, a.r^4,...

where r ≠ 0 is the common ratio and a ≠ 0 is a scale factor, equal to the sequence's start value.

The distinction between a progression and a series is that a progression is a sequence, whereas a series is a sum.

Select the series type:

Identify the series.

Number multiplied is: . Number added is:

Arithmetic progression added is:

A1 is:

Difference is:

The number for creating multiples is:

The Geometric Progression that's subtracted is:

A1 is:

Multiplier is:

The Geometric Progression that's added is:

A1 is:

Multiplier is:

Learn Subtraction by Counting Sticks

Note: We will subtract the smaller number from the bigger number.

Select first number:

Select second number:

Answer is:

Solved problems on identifying number in a series

Questions

Ques 1: 6, 36, 216, [_] Ques 2: 8, 19, 41, 85, [_] Ques 3: 17, 17, 34, 102, [_] Ques 4: 568, 579, 601, 634, [_] Ques 5: 858, 848, 828, 788, [_] Ques 6: 1123, 1128, 1138, 1158, [_]

Ques 1: 6, 36, 216, [_] Ans: 6^1 = 6 6^2 = 36 6^3 = 216 6^4 = 1296 Answer is: 1296 Ques 2: 8, 19, 41, 85, [_] Ans: 8 * 2 + 3 = 19 19 * 2 + 3 = 41 (i.e., 38 + 3) 41 * 2 + 3 = 85 85 * 2 + 3 = 173 Answer is: 173 Ques 3: 17, 17, 34, 102, [_] Answer: 17 * 1 = 17 17 * 2 = 34 34 * 3 = 102 102 * 4 = 408 Answer is: 408 Ques 4: 568, 579, 601, 634, [_] Answer: 568 + 11 = 579 579 + 22 = 601 601 + 33 = 634 634 + 44 = 678 Answer is: 678 Ques 5: 858, 848, 828, 788, [_] Answer: 858 - 10 = 848 848 - 20 = 828 828 - 40 = 788 788 - 80 = 708 Answer is: 708 Ques 6: 1123, 1128, 1138, 1158, [_] Answer: 1123 + 5 = 1128 1128 + 10 = 1138 1138 + 20 = 1158 1158 + 40 = 1198 Answer is: 1198

Digging deeper into your toolbox (Viewing LDiA code of sklearn)

Tags: Machine Learning,Natural Language Processing,FOSSDigging deeper into your toolbox

You can find the source code path in the __file__ attribute on any Python module, such as sklearn.__file__. And in ipython (jupyter console), you can view the source code for any function, class, or object with ??, like LDA??: >>> import sklearn >>> sklearn.__file__ '/Users/hobs/anaconda3/envs/conda_env_nlpia/lib/python3.6/site-packages/sklearn/__init__.py' >>> from sklearn.discriminant_analysis import LinearDiscriminantAnalysis as LDA >>> from sklearn.decomposition import LatentDirichletAllocation as LDiA >>> LDA?? Init signature: LDA(solver='svd', shrinkage=None, priors=None, n_components=None, store_covariance=False, tol=0.0001) Source: class LinearDiscriminantAnalysis(BaseEstimator, LinearClassifierMixin, TransformerMixin): """Linear Discriminant Analysis A classifier with a linear decision boundary, generated by fitting class conditional densities to the data and using Bayes' rule. The model fits a Gaussian density to each class, assuming that all classes share the same covariance matrix. ... This won’t work on functions and classes that are extensions, whose source code is hidden within a compiled C++ module.

Semantic Analysis of Words and Sentences in Natural Language Processing

Coming up with a numerical representation of the semantics (meaning) of words and sentences can be tricky. This is especially true for “fuzzy” languages like English, which has multiple dialects and many different interpretations of the same words. Even formal English text written by an English professor can’t avoid the fact that most English words have multiple meanings, a challenge for any new learner, including machine learners.Tags: Natural Language Processing,Polysemy

This concept of words with multiple meanings is called polysemy: The existence of words and phrases with more than one meaning Here are some ways in which polysemy can affect the semantics of a word or statement. We list them here for you to appreciate the power of LSA. You don’t have to worry about these challenges. LSA takes care of all this for us:# Homonyms

Words with the same spelling and pronunciation, but different meanings# Zeugma

Use of two meanings of a word simultaneously in the same sentence And LSA also deals with some of the challenges of polysemy in a voice interface - a chatbot that you can talk to, like Alexa or Siri.# Homographs

Words spelled the same, but with different pronunciations and meanings# Homophones

Words with the same pronunciation, but different spellings and meanings (an NLP challenge with voice interfaces). Imagine if you had to deal with a statement like the following, if you didn’t have tools like LSA to deal with it: She felt ... less. She felt tamped down. Dim. More faint. Feint. Feigned. Fain. --Patrick Rothfuss

Monday, July 4, 2022

Stemming (An Natural Language Processing Challenge)

Tags: Natural Language ProcessingChallenges (a preview of stemming)

As an example of why feature extraction from text is hard, consider stemming—grouping the various inflections of a word into the same “bucket” or cluster. Very smart people spent their careers developing algorithms for grouping inflected forms of words together based only on their spelling. Imagine how difficult that is. Imagine trying to remove verb endings like “ing” from “ending” so you’d have a stem called “end” to represent both words. And you’d like to stem the word “running” to “run,” so those two words are treated the same. And that’s tricky, because you have to remove not only the “ing” but also the extra “n.” But you want the word “sing” to stay whole. You wouldn’t want to remove the “ing” ending from “sing” or you’d end up with a single letter “s.” Or imagine trying to discriminate between a pluralizing “s” at the end of a word like “words” and a normal “s” at the end of words like “bus” and “lens.” Do isolated individual letters in a word or parts of a word provide any information at all about that word’s meaning? Can the letters be misleading? Yes and yes. In this chapter we show you how to make your NLP pipeline a bit smarter by dealing with these word spelling challenges using conventional stemming approaches. You can try for yourself statistical clustering approaches that only require you to amass a collection of natural language text containing the words you’re interested in. From that collection of text, the statistics of word usage will reveal “semantic stems” (actually, more useful clusters of words like lemmas or synonyms), without any handcrafted regular expressions or stemming rules.

Learn Addition by Counting Sticks

Select first number:

Select second number:

Answer is:

Sunday, July 3, 2022

Expansion of numbers into place values

Select Level:

Ques: Write the expanded form of following number using the Place Values:

Enter Place Values Here. Add More Input Boxes if Needed.

Tags: Mathematical Foundations for Data Science,

Ques: Write the expanded form of following number using the Place Values:

Enter Place Values Here. Add More Input Boxes if Needed.

Saturday, July 2, 2022

Practical applications of Natural Language Processing

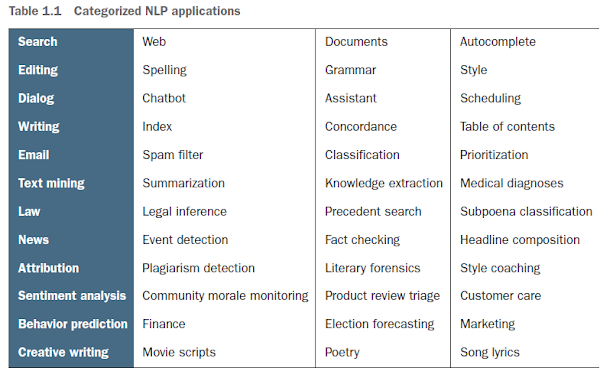

Natural language processing is everywhere. It’s so ubiquitous that some of the examples in table below may surprise you.Tags: Natural Language Processing,Categorized NLP applications

A search engine can provide more meaningful results if it indexes web pages or document archives in a way that takes into account the meaning of natural language text. Autocomplete uses NLP to complete your thought and is common among search engines and mobile phone keyboards. Many word processors, browser plugins, and text editors have spelling correctors, grammar checkers, concordance composers, and most recently, style coaches. Some dialog engines (chatbots) use natural language search to find a response to their conversation partner’s message. NLP pipelines that generate (compose) text can be used not only to compose short replies in chatbots and virtual assistants, but also to assemble much longer passages of text. The Associated Press uses NLP “robot journalists” to write entire financial news articles and sporting event reports.7 Bots can compose weather forecasts that sound a lot like what your hometown weather person might say, perhaps because human meteorologists use word processors with NLP features to draft scripts. NLP spam filters in early email programs helped email overtake telephone and fax communication channels in the '90s. And the spam filters have retained their edge in the cat and mouse game between spam filters and spam generators for email, but may be losing in other environments like social networks. An estimated 20% of the tweets about the 2016 US presidential election were composed by chatbots.8 These bots amplify their owners’ and developers’ viewpoints. And these “puppet masters” tend to be foreign governments or large corporations with the resources and motivation to influence popular opinion. NLP systems can generate more than just short social network posts. NLP can be used to compose lengthy movie and product reviews on Amazon and elsewhere. Many reviews are the creation of autonomous NLP pipelines that have never set foot in a movie theater or purchased the product they’re reviewing. There are chatbots on Slack, IRC, and even customer service websites—places where chatbots have to deal with ambiguous commands or questions. And chatbots paired with voice recognition and generation systems can even handle lengthy conversations with an indefinite goal or “objective function” such as making a reservation at a local restaurant.9 NLP systems can answer phones for companies that want something better than a phone tree but don’t want to pay humans to help their customers.NOTE

With its Duplex demonstration at Google IO, engineers and managers overlooked concerns about the ethics of teaching chatbots to deceive humans. We all ignore this dilemma when we happily interact with chatbots on Twitter and other anonymous social networks, where bots don’t share their pedigree. With bots that can so convincingly deceive us, the AI control problem looms, and Yuval Harari’s cautionary forecast of “Homo Deus” may come sooner than we think. NLP systems exist that can act as email “receptionists” for businesses or executive assistants for managers. These assistants schedule meetings and record summary details in an electronic Rolodex, or CRM (customer relationship management system), interacting with others by email on their boss’s behalf. Companies are putting their brand and face in the hands of NLP systems, allowing bots to execute marketing and messaging campaigns. And some inexperienced daredevil NLP textbook authors are letting bots author several sentences in their book.

Subscribe to:

Posts (Atom)