Chatbot Recirculating (Recurrent) Pipeline

Bag of Words

Abv.: BOW

from nltk.tokenize import TreebankWordTokenizer sentence = """The faster Harry got to the store, the faster Harry, the faster, would get home.""" tokenizer = TreebankWordTokenizer() tokens = tokenizer.tokenize(sentence.lower()) print(tokens) ['the', 'faster', 'harry', 'got', 'to', 'the', 'store', ',', 'the', 'faster', 'harry', ',', 'the', 'faster', ',', 'would', 'get', 'home', '.'] from collections import Counter bag_of_words = Counter(tokens) bag_of_words Counter({'the': 4, 'faster': 3, 'harry': 2, 'got': 1, 'to': 1, 'store': 1, ',': 3, 'would': 1, 'get': 1, 'home': 1, '.': 1})Term Frequency

= # times the word appears in the text / # words in the text

v = list(bag_of_words.values()) k = list(bag_of_words.keys()) l = len(k) tf_dict = {} for i, elem in enumerate(bag_of_words.keys()): tf_dict[k[i]] = round(v[i]/l, 3) tf_dict OUT: { 'the': 0.364, 'faster': 0.273, 'harry': 0.182, 'got': 0.091, 'to': 0.091, 'store': 0.091, ',': 0.273, 'would': 0.091, 'get': 0.091, 'home': 0.091, '.': 0.091 }Cosine Similarity

In Python this would be a.dot(b) == np.linalg.norm(a) * np.linalg.norm(b) * np.cos(theta) Solving this relationship for cos(theta), you can derive the cosine similarity using Or you can do it in pure Python without numpy, as in the following listing. import math def cosine_sim(vec1, vec2): """ Let's convert our dictionaries to lists for easier matching.""" vec1 = [val for val in vec1.values()] vec2 = [val for val in vec2.values()] dot_prod = 0 for i, v in enumerate(vec1): dot_prod += v * vec2[i] mag_1 = math.sqrt(sum([x**2 for x in vec1])) mag_2 = math.sqrt(sum([x**2 for x in vec2])) return dot_prod / (mag_1 * mag_2)Zipf's Law

Zipf's law states that given some corpus of natural language utterances, the frequency of any word is inversely proportional to its rank in the frequency table. freq(word) = k / r Where k is a constant and r is the rank of the word.IDF (Inverse Document Frequency) and TF-IDF

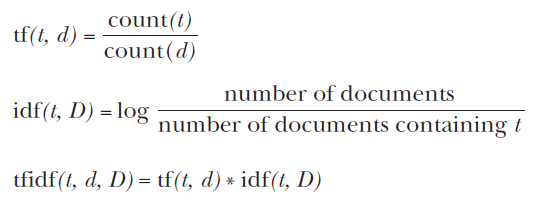

For a given term, t, in a given document, d, in a corpus, D, you get:Summary

# Any web-scale search engine with millisecond response times has the power of a TF-IDF term document matrix hidden under the hood. # Term frequencies must be weighted by their inverse document frequency to ensure the most important, most meaningful words are given the heft they deserve. # Zipf's law can help you predict the frequencies of all sorts of things, including words, characters, and people. # The rows of a TF-IDF term document matrix can be used as a vector representation of the meanings of those individual words to create a vector space model of word semantics. # Euclidean distance and similarity between pairs of high dimensional vectors doesn't adequately represent their similarity for most NLP applications. # Cosine distance, the amount of "overlap" between vectors, can be calculated efficiently by just multiplying the elements of normalized vectors together and summing up those products. # Cosine distance is the go-to similarity score for most natural language vector representations.Practical

txt1 = """ A kite is traditionally a tethered heavier-than-air craft with wing surfaces that react against the air to create lift and drag. A kite consists of wings, tethers, and anchors. Kites often have a bridle to guide the face of the kite at the correct angle so the wind can lift it. A kite's wing also may be so designed so a bridle is not needed; when kiting a sailplane for launch, the tether meets the wing at a single point. A kite may have fixed or moving anchors. Untraditionally in technical kiting, a kite consists of tether-set-coupled wing sets; even in technical kiting, though, a wing in the system is still often called the kite. The lift that sustains the kite in flight is generated when air flows around the kite's surface, producing low pressure above and high pressure below the wings. The interaction with the wind also generates horizontal drag along the direction of the wind. The resultant force vector from the lift and drag force components is opposed by the tension of one or more of the lines or tethers to which the kite is attached. The anchor point of the kite line may be static or moving (such as the towing of a kite by a running person, boat, free-falling anchors as in paragliders and fugitive parakites or vehicle). The same principles of fluid flow apply in liquids and kites are also used under water. A hybrid tethered craft comprising both a lighter-than-air balloon as well as a kite lifting surface is called a kytoon. Kites have a long and varied history, and many different types are flown individually and at festivals worldwide. Kites may be flown for recreation, art or other practical uses. Sport kites can be flown in aerial ballet, sometimes as part of a competition. Power kites are multi-line steerable kites designed to generate large forces which can be used to power activities such as kite surfing, kite landboarding, kite fishing, kite buggying and a new trend snow kiting. Even Man-lifting kites have been made. """ txt2 = """ Kites were invented in China, where materials ideal for kite building were readily available: silk fabric for sail material; fine, high-tensile-strength silk for flying line; and resilient bamboo for a strong, lightweight framework. The kite has been claimed as the invention of the 5th-century BC Chinese philosophers Mozi (also Mo Di) and Lu Ban (also Gongshu Ban). By 549 AD paper kites were certainly being flown, as it was recorded that in that year a paper kite was used as a message for a rescue mission. Ancient and medieval Chinese sources describe kites being used for measuring distances, testing the wind, lifting men, signaling, and communication for military operations. The earliest known Chinese kites were flat (not bowed) and often rectangular. Later, tailless kites incorporated a stabilizing bowline. Kites were decorated with mythological motifs and legendary figures; some were fitted with strings and whistles to make musical sounds while flying. From China, kites were introduced to Cambodia, Thailand, India, Japan, Korea and the western world. After its introduction into India, the kite further evolved into the fighter kite, known as the patang in India, where thousands are flown every year on festivals such as Makar Sankranti. Kites were known throughout Polynesia, as far as New Zealand, with the assumption being that the knowledge diffused from China along with the people. Anthropomorphic kites made from cloth and wood were used in religious ceremonies to send prayers to the gods. Polynesian kite traditions are used by anthropologists get an idea of early "primitive" Asian traditions that are believed to have at one time existed in Asia. """ from sklearn.feature_extraction.text import TfidfVectorizer corpus = [txt1, txt2] vectorizer = TfidfVectorizer() X = vectorizer.fit_transform(corpus) print(X) OUTPUT: <2x300 sparse matrix of type '<class 'numpy.float64'>' with 333 stored elements in Compressed Sparse Row format> print(len(vectorizer.get_feature_names())) print(vectorizer.get_feature_names()[0:10]) OUTPUT: 300 ['549', '5th', 'above', 'activities', 'ad', 'aerial', 'after', 'against', 'air', 'along'] from sklearn.metrics.pairwise import cosine_similarity t1 = vectorizer.transform([txt1]) t2 = vectorizer.transform([txt2]) cosine_similarity(t1, t2) # array([[0.50239949]])Finding meaning in word counts: Semantic analysis

The problem with TF-IDF and "Words that mean the same": TF-IDF vectors and lemmatization

TF-IDF vectors count the exact spellings of terms in a document. So, texts that restate the same meaning will have completely different TF-IDF vector representations if they spell things differently or use different words. This messes up search engines and document similarity comparisons that rely on counts of tokens.POLYSEMY

This is the opposite of problem (many words and one meaning) discussed in previous slide, here we have "one word and many meanings". Coming up with a numerical representation of the semantics (meaning) of words and sentences can be tricky. This is especially true for "fuzzy" languages like English, which has multiple dialects and many different interpretations of the same words. Even formal English text written by an English professor can't avoid the fact that most English words have multiple meanings, a challenge for any new learner, including machine learners. This concept of words with multiple meanings is called polysemy: # Polysemy - The existence of words and phrases with more than one meaningMore like Polysemy

# Homonyms Words with the same spelling and pronunciation, but different meanings # Zeugma Use of two meanings of a word simultaneously in the same sentence # Homographs Words spelled the same, but with different pronunciations and meanings # Homophones Words with the same pronunciation, but different spellings and meanings (an NLP challenge with voice interfaces) Imagine if you had to deal with a statement like the following: She felt... less. She felt tamped down. Dim. More faint. Feint. Feigned. Fain. (Patrick Rothfuss)Beginning to solve the problem: Linear discriminant analysis (LDA)

LDA breaks down a document into only one topic. To get more topics, use LDiA instead. LDiA (Latent Dirichlet allocation) can break down documents into many topics as you'd like. 1.1) It's one dimensional. You can just compute the centroid (average or mean) of all your TF-IDF vectors for each side of a binary class, like spam and non-spam. 1.2) Your dimension then becomes the line between those two centroids. 1.3) The further a TF-IDF vector is along that line (the dot product of the TF-IDF vector with that line) tells you how close you are to one class or another. LDA classifier is a supervised algorithm, so you do need labels for your document classes. But LDA requires far fewer samples than fancier algorithms. For this example, we show you a simplified implementation of LDA that you can't find in scikit-learn. The model "training" has only three steps: 2.1) Compute the average position (centroid) of all the TF-IDF vectors within the class (such as spam SMS messages). 2.2) Compute the average position (centroid) of all the TF-IDF vectors not in the class (such as non-spam SMS messages). 2.3) Compute the vector difference between the centroids (the line that connects them). All you need to "train" an LDA model is to find the vector (line) between the two centroids for your binary class. LDA is a supervised algorithm, so you need labels for your messages. To do inference or prediction with that model, you just need to find out if a new TF-IDF vector is closer to the in-class (spam) centroid than it is to the out-of-class (non-spam) centroid.

Pages

- Index of Lessons in Technology

- Index of Book Summaries

- Index of Book Lists And Downloads

- Index For Job Interviews Preparation

- Index of "Algorithms: Design and Analysis"

- Python Course (Index)

- Data Analytics Course (Index)

- Index of Machine Learning

- Postings Index

- Index of BITS WILP Exam Papers and Content

- Lessons in Investing

- Index of Math Lessons

- Index of Management Lessons

- Book Requests

- Index of English Lessons

- Index of Medicines

- Index of Quizzes (Educational)

Saturday, January 8, 2022

Math With Words - A lesson in Natural Language Processing

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment