For Web Security, prevent website from opening in an IFrame as 'WhatsApp Web' does.

<div>

<p>WhatsApp Error: Prevention from opening WhatsApp Web in an IFrame.</p>

</div>

<iframe src="https://web.whatsapp.com/" title="My WhatsApp" width=900 height=400></iframe>

View in Mozilla Firefox:

Pages

- Index of Lessons in Technology

- Index of Book Summaries

- Index of Book Lists And Downloads

- Index For Job Interviews Preparation

- Index of "Algorithms: Design and Analysis"

- Python Course (Index)

- Data Analytics Course (Index)

- Index of Machine Learning

- Postings Index

- Index of BITS WILP Exam Papers and Content

- Lessons in Investing

- Index of Math Lessons

- Downloads

- Index of Management Lessons

- Book Requests

- Index of English Lessons

- Index of Medicines

- Index of Quizzes (Educational)

Sunday, September 20, 2020

Web Security - Prevent Website from opening in an IFrame

Setting up Ubuntu 20.04 for Flutter based Android app development

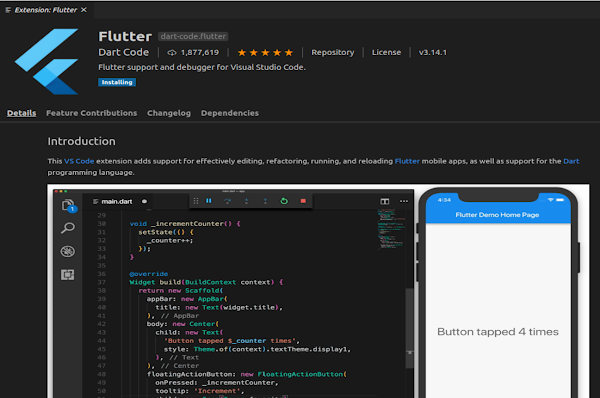

1. Install Git. $ sudo apt install git 2. Create a directory where we download the 'flutter': (base) ashish@ashish-VirtualBox:~/Desktop/ws/programfiles/flutter$ pwd /home/ashish/Desktop/ws/programfiles/flutter_box 3. Download 'flutter': $ pwd /home/ashish/Desktop/ws/programfiles/flutter_box $ git clone https://github.com/flutter/flutter.git 4. Add the flutter tool to your path: $export PATH="$PATH:`pwd`/flutter/bin" OR Update this in "~/.bashrc" file. $ nano ~/.bashrc $ source ~/.bashrc 5. Optionally, pre-download development binaries: The flutter tool downloads platform-specific development binaries as needed. For scenarios where pre-downloading these artifacts is preferable (for example, in hermetic build environments, or with intermittent network availability), iOS and Android binaries can be downloaded ahead of time by running: $ flutter precache 6. Install "Android SDK" from 'Terminal'. $ sudo apt update && sudo apt install android-sdk 7. Install "Android Studio" from "Ubuntu Software". 8. When you launch 'Android Studio' for the first time, it gives the prompt for 'Import Android Studio Settings': Set it to "Do not import 'Settings'." 9. It will next launch the 'Android Studio Setup Wizard'. 10. Default JDK location: 11. Next, it downloads SDK components: 12. Prompt for 'Emulator Settings for Hardware Acceleration' 13. Undate Android license status. Run `flutter doctor --android-licenses` to accept the SDK licenses. See https://flutter.dev/docs/get-started/install/linux#android-setup for more details. $ flutter doctor --android-licenses 14. Launch "Settings" as shown below. Then go to "Plugins". If we launch installation of 'Flutter' plugin, it automatically prompts for the installation for 'Dart'. Then, give 'Android Studio' a restart. 15. Installing 'Flutter Extension' in Visual Studio Code. Go to 'Extensions' as shown below and search for 'flutter'. ... 16. Test installation: (base) ashish@ashish-VirtualBox:~/.../flutter_box$ flutter doctor Doctor summary (to see all details, run flutter doctor -v): [✓] Flutter (Channel master, 1.22.0-10.0.pre.264, on Linux, locale en_IN) [✓] Android toolchain - develop for Android devices (Android SDK version 30.0.2) [✓] Android Studio (version 4.0) [✓] VS Code (version 1.49.1) [!] Connected device ! No devices available ! Doctor found issues in 1 category. 17. Common Issues that we notice from 'flutter doctor': As of Flutter’s 1.19.0 dev release, the Flutter SDK contains the dart command alongside the flutter command so that you can more easily run Dart command-line programs. Downloading the Flutter SDK also downloads the compatible version of Dart, but if you’ve downloaded the Dart SDK separately, make sure that the Flutter version of dart is first in your path, as the two versions might not be compatible. $ flutter doctor Doctor summary (to see all details, run flutter doctor -v): 17.1. [!] Android toolchain - develop for Android devices (Android SDK version 27.0.1) ✗ Flutter requires Android SDK 29 and the Android BuildTools 28.0.3 To update the Android SDK visit Flutter.dev: Android Setup on Linux for detailed instructions. 17.2. ✗ Android license status unknown. Run `flutter doctor --android-licenses` to accept the SDK licenses. See Flutter.dev: Android Setup on Linux for more details. 17.3. ✗ Android licenses not accepted. To resolve this, run: flutter doctor --android-licenses 17.4. [!] Android Studio (not installed) 17.5. [!] Android Studio (version 4.0) ✗ Flutter plugin not installed; this adds Flutter specific functionality. 17.6 [!] Android Studio (version 4.0) ✗ Dart plugin not installed; this adds Dart specific functionality. 17.7. [!] VS Code (version 1.49.1) ✗ Flutter extension not installed; install from https://marketplace.visualstudio.com/items?itemName=Dart-Code.flutter 17.8. [!] Connected device ! No devices available ! Doctor found issues in 4 categories. Dated: Sep 2020 Ref: https://flutter.dev/docs/get-started/install/linux

Thursday, September 17, 2020

Binomial Probability Distribution (visualization using Seaborn)

Binomial Probability Distribution "pmf" is "Probability Mass Function" or "Probability Distribution". "rv" is "Random Variable". Note: Binomial Distribution is a Discrete Distribution. Visualization of Binomial Distribution Difference Between Normal and Binomial Distribution The main difference is that normal distribution is continous whereas binomial is discrete, but if there are enough data points it will be quite similar to normal distribution with certain loc and scale. We have code that produces overlapped "Normal" and "Binomial" distributions. We will show some of the best and some of the worst overlaps. number_of_trials = 150 for s in range(1000, 100000000, 1000000): print("size:", s) sns.distplot(random.binomial(n = number_of_trials, p=0.5, size=s), hist=False, label='binomial') sns.distplot(random.normal(loc = number_of_trials / 2, scale=5, size=s), hist=False, label='normal') plt.show() Best Overlaps Worst Overlaps References % numpy.org

Improving a Classifier (ML) Using Snorkel's Slicing Technique

The dataset we are using is the '150 datapoints strong' Iris flower species dataset (Download from here). We have a dependency here to draw the confusion matrix. The code file name is: DrawConfusionMatrix.py Content: # Ref: Scikit-Learn import itertools import numpy as np import matplotlib.pyplot as plt import seaborn as sns from sklearn import svm, datasets from sklearn.model_selection import train_test_split from sklearn.metrics import confusion_matrix def plot_confusion_matrix(cm, classes, normalize = False, title = 'Confusion matrix', cmap = plt.cm.Blues, use_seaborn = False): """ This function prints and plots the confusion matrix. Normalization can be applied by setting `normalize=True`. """ if normalize: cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis] print("Normalized confusion matrix") else: print('Confusion matrix, without normalization') print(cm) if use_seaborn == False: plt.imshow(cm, interpolation='nearest', cmap=cmap) plt.colorbar() fmt = '.2f' if normalize else 'd' thresh = cm.max() / 2. for i, j in itertools.product(range(cm.shape[0]), range(cm.shape[1])): plt.text(j, i, format(cm[i, j], fmt), horizontalalignment="center", color="white" if cm[i, j] > thresh else "black") tick_marks = np.arange(len(classes) + 0) else: ax = sns.heatmap(cm, annot=True, fmt='d') #notation: "annot" not "annote" # fmt='d': print values as decimals bottom, top = ax.get_ylim() ax.set_ylim(bottom + 0.5, top - 0.5) tick_marks = np.arange(len(classes) + 1) plt.title(title) plt.xticks(tick_marks, classes, rotation=45) plt.yticks(tick_marks, classes) plt.ylabel('True label') plt.xlabel('Predicted label') Now, the main problem: # Import libraries. import DrawConfusionMatrix as dcm import importlib # The imp module was deprecated in Python 3.4 in favor of the importlib module. importlib.reload(dcm) import pandas as pd import numpy as np from collections import Counter from snorkel.augmentation import transformation_function from snorkel.augmentation import RandomPolicy from snorkel.augmentation import PandasTFApplier from sklearn import svm from sklearn.metrics import accuracy_score from sklearn.metrics import confusion_matrix df = pd.read_csv('datasets_19_420_Iris.csv') for i in set(df.Species): # ['count', 'mean', 'std', 'min', '25%', '50%', '75%', 'max'] print(i) print(df[df.Species == i].describe().loc[['mean', 'std'], :], '\n') Iris-versicolor Id SepalLengthCm SepalWidthCm PetalLengthCm PetalWidthCm mean 75.50000 5.936000 2.770000 4.260000 1.326000 std 14.57738 0.516171 0.313798 0.469911 0.197753 Iris-virginica Id SepalLengthCm SepalWidthCm PetalLengthCm PetalWidthCm mean 125.50000 6.58800 2.974000 5.552000 2.02600 std 14.57738 0.63588 0.322497 0.551895 0.27465 Iris-setosa Id SepalLengthCm SepalWidthCm PetalLengthCm PetalWidthCm mean 25.50000 5.00600 3.418000 1.464000 0.24400 std 14.57738 0.35249 0.381024 0.173511 0.10721 features = ['SepalLengthCm', 'SepalWidthCm', 'PetalLengthCm', 'PetalWidthCm'] classes = ['Iris-setosa', 'Iris-virginica', 'Iris-versicolor'] desc_dict = {} for i in classes: desc_dict[i] = df[df.Species == i].describe() df['Train'] = 'Train' # random.randint returns a random integer N such that a <= N <= b @transformation_function(pre = []) def get_new_instance_for_this_class(x): x.SepalLengthCm = np.random.normal(round(desc_dict[x.Species].loc[['mean'], ['SepalLengthCm']].iloc[0,0], 2) * 100, round(desc_dict[x.Species].loc[['std'], ['SepalLengthCm']].iloc[0,0], 2) * 100) / 100 x.SepalWidthCm = np.random.normal(round(desc_dict[x.Species].loc[['mean'], ['SepalWidthCm']].iloc[0,0], 2) * 100, round(desc_dict[x.Species].loc[['std'], ['SepalWidthCm']].iloc[0,0], 2) * 100) / 100 x.PetalLengthCm = np.random.normal(round(desc_dict[x.Species].loc[['mean'], ['PetalLengthCm']].iloc[0,0], 2) * 100, round(desc_dict[x.Species].loc[['std'], ['PetalLengthCm']].iloc[0,0], 2) * 100) / 100 x.PetalWidthCm = np.random.normal(round(desc_dict[x.Species].loc[['mean'], ['PetalWidthCm']].iloc[0,0], 2) * 100, round(desc_dict[x.Species].loc[['std'], ['PetalWidthCm']].iloc[0,0], 2) * 100) / 100 x.Train = 'Test' return x tfs = [ get_new_instance_for_this_class ] random_policy = RandomPolicy( len(tfs), sequence_length=2, n_per_original=5, keep_original=True # n_per_original (int) – Number of transformed data points per original ) tf_applier = PandasTFApplier(tfs, random_policy) df_train_augmented = tf_applier.apply(df) print(f"Original training set size: {len(df)}") print(f"Augmented training set size: {len(df_train_augmented)}") Original training set size: 150 Augmented training set size: 900 df_test = df_train_augmented[df_train_augmented.Train == 'Test'] pred = clf.predict(df_test[features]) pred_probs = clf.predict_proba(df_test[features]) # Make Note Of >> AttributeError: predict_proba is not available when 'probability=False' print(Counter(pred)) print("Accuracy: {:.3f}".format(accuracy_score(df_test['Species'], pred))) cm = confusion_matrix(df_test['Species'], pred) print("Confusion matrix:\n{}".format(cm)) Counter({'Iris-versicolor': 252, 'Iris-setosa': 250, 'Iris-virginica': 248}) Accuracy: 0.968 Confusion matrix: [[250 0 0] [ 0 239 11] [ 0 13 237]] classes = ['setosa', 'versicolor', 'virginica'] dcm.plot_confusion_matrix(cm, classes = classes, use_seaborn = True) # This plot is for 'Support Vector Machine' based classifier. # This plot is for 'Random Forest' based classifier. Here we see that there are some misclassified data points for classes 'Versicolor' and 'Verginica'. 'Setosa' has not been misclassified by either SVM or RandomForest. Next, we would slice the dataframe into 'setosa' and 'not setosa' dataframes. Because we are not having issues with 'setosa' data points, we would re-train a classifier on the other two classes viz. 'versicolor' and 'virginica'. import re from snorkel.slicing import slicing_function @slicing_function() def not_setosa(x): return x.Species != 'Iris-setosa' sfs = [not_setosa] # ~ ~ ~ #Store slice metadata in S from snorkel.slicing import PandasSFApplier applier = PandasSFApplier(sfs) S_test = applier.apply(df_test) # ~ ~ ~ from snorkel.analysis import Scorer scorer = Scorer(metrics=["f1_micro", "f1_macro"]) # Make Note Of >> ValueError: f1 not supported for multiclass. # Try f1_micro or f1_macro instead. # ~ ~ ~ from sklearn import preprocessing le = preprocessing.LabelEncoder() le.fit(df_test['Species']) scorer.score_slices( S=S_test, golds=le.transform(df_test['Species']), preds=le.transform(pred), probs=pred_probs, as_dataframe=True ) from snorkel.slicing import slice_dataframe df_not_setosa = slice_dataframe(df_train_augmented, not_setosa) from sklearn.ensemble import RandomForestClassifier rfc = RandomForestClassifier(max_depth=4, random_state=0, n_estimators = 100) RandomForestClassifier(bootstrap=True, class_weight=None, criterion='gini', max_depth=4, max_features='auto', max_leaf_nodes=None, min_impurity_decrease=0.0, min_impurity_split=None, min_samples_leaf=1, min_samples_split=2, min_weight_fraction_leaf=0.0, n_estimators=100, n_jobs=None, oob_score=False, random_state=0, verbose=0, warm_start=False) df_test_rfc = df_not_setosa[df_not_setosa.Train == 'Test'] pred_rfc = rfc.predict(df_test_rfc[features]) print(Counter(pred_rfc)) print("Accuracy: {:.3f}".format(accuracy_score(df_test_rfc['Species'], pred_rfc))) cm = confusion_matrix(df_test_rfc['Species'], pred_rfc) print("Confusion matrix:\n{}".format(cm)) Counter({'Iris-versicolor': 251, 'Iris-virginica': 249}) Accuracy: 0.990 Confusion matrix: [[248 2] [ 3 247]] dcm.plot_confusion_matrix(cm, classes = ['versicolor', 'virginica'], use_seaborn = True) Using RandomForestClassifier on sliced dataset: We also have the score for SVC, it is not as good as RandomForestClassifier: svc = svm.SVC(gamma = 'auto', probability=True) svc.fit(df_not_setosa[features], df_not_setosa['Species']) SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0, decision_function_shape='ovr', degree=3, gamma='auto', kernel='rbf', max_iter=-1, probability=True, random_state=None, shrinking=True, tol=0.001, verbose=False) pred_svc = svc.predict(df_test_rfc[features]) print(Counter(pred_svc)) print("Accuracy: {:.3f}".format(accuracy_score(df_test_rfc['Species'], pred_svc))) cm = confusion_matrix(df_test_rfc['Species'], pred_svc) print("Confusion matrix:\n{}".format(cm)) Counter({'Iris-versicolor': 251, 'Iris-virginica': 249}) Accuracy: 0.986 Confusion matrix: [[247 3] [ 4 246]] Reference % Slice-based Learning: a Programming Model for Residual Learning in Critical Data Slices

Wednesday, September 16, 2020

Snorkel's Analysis Package Overview (v0.9.6, Sep 2020)

Current version of Snorkel is v0.9.6 (as on 16-Sep-2020). Link to GitHub Snorkel has 8 packages. Package Reference: 1. Snorkel Analysis Package 2. Snorkel Augmentation Package 3. Snorkel Classification Package 4. Snorkel Labeling Package 5. Snorkel Map Package 6. Snorkel Preprocess Package 7. Snorkel Slicing Package 8. Snorkel Utils Package What is Snorkel's Analysis Package for? This package dicusses how to interpret classification results. Generic model analysis utilities shared across Snorkel. 1: Scorer Calculate one or more scores from user-specified and/or user-defined metrics. This defines a class 'Scorer' with two methods: 'score()' and 'score_slices()'. You have specify input arguments such as metrics (this is related to the 'metric_score()' discussed below), true labels, predicted labels and predicted probabilities. It is through this that we make use of code in 'metrics.py' Code Snippet: ~~~ ~~~ ~~~ 2: get_label_buckets Return data point indices bucketed by label combinations. This is a function written in the error_analysis.py file. Code: import snorkel import numpy as np from snorkel.analysis import get_label_buckets print("Snorkel version:", snorkel.__version__) Snorkel version: 0.9.3 A common use case is calling ``buckets = label_buckets(Y_gold, Y_pred)`` where ``Y_gold`` is a set of gold (i.e. ground truth) labels and ``Y_pred`` is a corresponding set of predicted labels. Y_gold = np.array([1, 1, 1, 0, 0, 0, 1]) Y_pred = np.array([1, 1, -1, -1, 1, 0, 1]) buckets = get_label_buckets(Y_gold, Y_pred) # If gold and pred have different number of elements >> ValueError: Arrays must all have the same number of elements The returned ``buckets[(i, j)]`` is a NumPy array of data point indices with true label i and predicted label j. More generally, the returned indices within each bucket refer to the order of the labels that were passed in as function arguments. print(buckets[(1, 1)]) # true positives where both are 1 Out: array([0, 1, 6]) buckets[(0, 0)] # true positives where both are 0 Out: array([5]) # false positives, false negatives and true negatives print((1, 0) in buckets, '/', (0, 1) in buckets, '/', (0, 0) in buckets) Out: False / True / True buckets[(1, -1)] # abstained positives Out: array([2]) buckets[(0, -1)] # abstained negatives Out: array([3]) ~~~ ~~~ ~~~ 3: metric_score() Evaluate a standard metric on a set of predictions/probabilities. Code for metric_score() is in: target="_blank">metrics.py Using this you can evaluate a standard metric on a set of predictions (True Labels and Predicted Labels) / probabilities. Scores available are: 1. _coverage_score 2. _roc_auc_score 3. _f1_score 4. _f1_micro_score 5. _f1_macro_score It is a wrapper around "sklearn.metrics" and adds to it by giving the above five metrics. METRICS = { "accuracy": Metric(sklearn.metrics.accuracy_score), "coverage": Metric(_coverage_score, ["preds"]), "precision": Metric(sklearn.metrics.precision_score), "recall": Metric(sklearn.metrics.recall_score), "f1": Metric(_f1_score, ["golds", "preds"]), "f1_micro": Metric(_f1_micro_score, ["golds", "preds"]), "f1_macro": Metric(_f1_macro_score, ["golds", "preds"]), "fbeta": Metric(sklearn.metrics.fbeta_score), "matthews_corrcoef": Metric(sklearn.metrics.matthews_corrcoef), "roc_auc": Metric(_roc_auc_score, ["golds", "probs"]), }

Monday, September 14, 2020

Starting With Selenium's Python Package (Installation)

We have a YAML file to setup our conda environment. The file 'selenium.yml' has contents: name: selenium channels: - conda-forge - defaults dependencies: - selenium - jupyterlab - ipykernel To setup the environment, we run the command: (base) CMD> conda env create -f selenium.yml (selenium) CMD> conda activate selenium After that, if we want to see which all packages got installed, we run the command: (selenium) CMD> conda env export Next, we setup a kernel from this environment: (selenium) CMD> python -m ipykernel install --user --name selenium Installed kernelspec selenium in C:\Users\Ashish Jain\AppData\Roaming\jupyter\kernels\selenium To view the list of kernels: (selenium) CMD> jupyter kernelspec list Available kernels: selenium C:\Users\Ashish Jain\AppData\Roaming\jupyter\kernels\selenium python3 E:\programfiles\Anaconda3\envs\selenium\share\jupyter\kernels\python3 ... A basic piece of code would start the browser. We have tried and tested it for Chrome and Firefox. To do this, we need the web driver file or we get the following exception: CODE: from selenium import webdriver import time from selenium.webdriver.common.keys import Keys driver = webdriver.Chrome() ERROR: ---------------------------------------------------------------------- FileNotFoundError Traceback (most recent call last) E:\programfiles\Anaconda3\envs\selenium\lib\site-packages\selenium\webdriver\common\service.py in start(self) 71 cmd.extend(self.command_line_args()) ---> 72 self.process = subprocess.Popen(cmd, env=self.env, 73 close_fds=platform.system() != 'Windows', E:\programfiles\Anaconda3\envs\selenium\lib\subprocess.py in __init__(self, args, bufsize, executable, stdin, stdout, stderr, preexec_fn, close_fds, shell, cwd, env, universal_newlines, startupinfo, creationflags, restore_signals, start_new_session, pass_fds, encoding, errors, text) 853 --> 854 self._execute_child(args, executable, preexec_fn, close_fds, 855 pass_fds, cwd, env, E:\programfiles\Anaconda3\envs\selenium\lib\subprocess.py in _execute_child(self, args, executable, preexec_fn, close_fds, pass_fds, cwd, env, startupinfo, creationflags, shell, p2cread, p2cwrite, c2pread, c2pwrite, errread, errwrite, unused_restore_signals, unused_start_new_session) 1306 try: -> 1307 hp, ht, pid, tid = _winapi.CreateProcess(executable, args, 1308 # no special security FileNotFoundError: [WinError 2] The system cannot find the file specified During handling of the above exception, another exception occurred: WebDriverException Traceback (most recent call last) ... WebDriverException: Message: 'chromedriver' executable needs to be in PATH. Please see https://sites.google.com/a/chromium.org/chromedriver/home We got the file from here: chromedriver.storage.googleapis.com For v86 chromedriver_win32.zip ---> chromedriver.exe Error for WebDriver and Browser version mismatch: SessionNotCreatedException: Message: session not created: This version of ChromeDriver only supports Chrome version 86 Current browser version is 85.0.4183.102 with binary path C:\Program Files (x86)\Google\Chrome\Application\chrome.exe Download from here for Chrome v85: chromedriver.storage.googleapis.com For v85 One point to note about ChromeDriver as in September 2020: ChromeDriver only supports characters in the BMP (Basic Multilingual Plane) is a known issue with Chromium team as ChromeDriver still doesn't support characters with a Unicode after FFFF. Hence it is impossible to send any character beyond FFFF via ChromeDriver. As a result any attempt to send SMP (Supplementary Multilingual Plane) characters (e.g. CJK, Emojis, Symbols, etc) raises the error. While Firefox supports Emoji's sent via 'send_keys()' method. As of Unicode 13.0, the SMP comprises the following 134 blocks: Archaic Greek and Other Left-to-right scripts: Linear B Syllabary (10000–1007F) Linear B Ideograms (10080–100FF). ~ ~ ~ ~ ~ If you working with Firefox browser, you need the Gecko WebDriver available at the Windows 'PATH' variable. Without WebDriver file: FileNotFoundError: [WinError 2] The system cannot find the file specified WebDriverException: Message: 'geckodriver' executable needs to be in PATH. Download Gecko driver from here: GitHub Repo of Mozilla The statement to launch the web browser will be: driver = webdriver.Firefox() By default, browsers open in a partial size window. To maximize the window: driver.maximize_window() Now, we open a link: driver.get("http://survival8.blogspot.com/")

Wednesday, September 9, 2020

Sentiment Analysis using BERT, DistilBERT and ALBERT

We will do Sentiment Analysis using the code from this repo: GitHub Check out the code from above repository to get started. For creating Conda environment, we have a file "sentiment_analysis.yml" with content: name: e20200909 channels: - defaults - conda-forge - pytorch dependencies: - pytorch - pandas - numpy - pip: - transformers==3.0.1 - flask - flask_cors - scikit-learn - ipykernel (base) C:\>conda env create -f sentiment_analysis.yml It will install the above mentioned dependencies and the nested dependencies. (base) C:\Users\Ashish Jain>conda env list # conda environments: # base * E:\programfiles\Anaconda3 e20200909 E:\programfiles\Anaconda3\envs\e20200909 env_py_36 E:\programfiles\Anaconda3\envs\env_py_36 temp E:\programfiles\Anaconda3\envs\temp temp202009 E:\programfiles\Anaconda3\envs\temp202009 tf E:\programfiles\Anaconda3\envs\tf (base) C:\Users\Ashish Jain>conda activate e20200909 (e20200909) C:\Users\Ashish Jain>conda env export name: e20200909 channels: - conda-forge - defaults dependencies: - _pytorch_select=0.1=cpu_0 - backcall=0.2.0=py_0 - blas=1.0=mkl - ca-certificates=2020.7.22=0 - certifi=2020.6.20=py38_0 - cffi=1.14.2=py38h7a1dbc1_0 - click=7.1.2=py_0 - colorama=0.4.3=py_0 - decorator=4.4.2=py_0 - flask=1.1.2=py_0 - flask_cors=3.0.9=pyh9f0ad1d_0 - icc_rt=2019.0.0=h0cc432a_1 - intel-openmp=2019.4=245 - ipykernel=5.3.4=py38h5ca1d4c_0 - ipython=7.18.1=py38h5ca1d4c_0 - ipython_genutils=0.2.0=py38_0 - itsdangerous=1.1.0=py_0 - jedi=0.17.2=py38_0 - jinja2=2.11.2=py_0 - joblib=0.16.0=py_0 - jupyter_client=6.1.6=py_0 - jupyter_core=4.6.3=py38_0 - libmklml=2019.0.5=0 - libsodium=1.0.18=h62dcd97_0 - markupsafe=1.1.1=py38he774522_0 - mkl=2019.4=245 - mkl-service=2.3.0=py38hb782905_0 - mkl_fft=1.1.0=py38h45dec08_0 - mkl_random=1.1.0=py38hf9181ef_0 - ninja=1.10.1=py38h7ef1ec2_0 - numpy=1.19.1=py38h5510c5b_0 - numpy-base=1.19.1=py38ha3acd2a_0 - openssl=1.1.1g=he774522_1 - pandas=1.1.1=py38ha925a31_0 - parso=0.7.0=py_0 - pickleshare=0.7.5=py38_1000 - pip=20.2.2=py38_0 - prompt-toolkit=3.0.7=py_0 - pycparser=2.20=py_2 - pygments=2.6.1=py_0 - python=3.8.5=h5fd99cc_1 - python-dateutil=2.8.1=py_0 - pytorch=1.6.0=cpu_py38h538a6d7_0 - pytz=2020.1=py_0 - pywin32=227=py38he774522_1 - pyzmq=19.0.1=py38ha925a31_1 - scikit-learn=0.23.2=py38h47e9c7a_0 - scipy=1.5.0=py38h9439919_0 - setuptools=49.6.0=py38_0 - six=1.15.0=py_0 - sqlite=3.33.0=h2a8f88b_0 - threadpoolctl=2.1.0=pyh5ca1d4c_0 - tornado=6.0.4=py38he774522_1 - traitlets=4.3.3=py38_0 - vc=14.1=h0510ff6_4 - vs2015_runtime=14.16.27012=hf0eaf9b_3 - wcwidth=0.2.5=py_0 - werkzeug=1.0.1=py_0 - wheel=0.35.1=py_0 - wincertstore=0.2=py38_0 - zeromq=4.3.2=ha925a31_2 - zlib=1.2.11=h62dcd97_4 - pip: - chardet==3.0.4 - filelock==3.0.12 - idna==2.10 - packaging==20.4 - pyparsing==2.4.7 - regex==2020.7.14 - requests==2.24.0 - sacremoses==0.0.43 - sentencepiece==0.1.91 - tokenizers==0.8.0rc4 - tqdm==4.48.2 - transformers==3.0.1 - urllib3==1.25.10 prefix: E:\programfiles\Anaconda3\envs\e20200909 (e20200909) C:\Users\Ashish Jain> Next, we run the 'analyser' code: (e20200909) C:\SentimentAnalysis-master>python analyze.py Please wait while the analyser is being prepared. Input sentiment to analyze: I am feeling good. Positive with probability 99%. Input sentiment to analyze: I am feeling bad. Negative with probability 99%. Input sentiment to analyze: I am Ashish. Positive with probability 81%. Input sentiment to analyze: Next, we run it in browser: We pass the same sentences as above. Here are server logs: (e20200909) C:\SentimentAnalysis-master>python server.py * Serving Flask app "server" (lazy loading) * Environment: production WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead. * Debug mode: off * Running on http://127.0.0.1:5000/ (Press CTRL+C to quit) 127.0.0.1 - - [09/Sep/2020 21:35:48] "GET / HTTP/1.1" 400 - 127.0.0.1 - - [09/Sep/2020 21:35:48] "GET /favicon.ico HTTP/1.1" 404 - 127.0.0.1 - - [09/Sep/2020 21:36:02] "GET /?text=hello HTTP/1.1" 200 - 127.0.0.1 - - [09/Sep/2020 21:36:38] "GET /?text=shut%20up HTTP/1.1" 200 - 127.0.0.1 - - [09/Sep/2020 21:36:50] "GET /?text=i%20am%20feeling%20good HTTP/1.1" 200 - 127.0.0.1 - - [09/Sep/2020 21:36:54] "GET /?text=i%20am%20feeling%20bad HTTP/1.1" 200 - 127.0.0.1 - - [09/Sep/2020 21:37:00] "GET /?text=i%20am%20ashish HTTP/1.1" 200 - The browser screens:

Tuesday, September 8, 2020

2 X 2 Idempotent matrix

I had to provide an example of an idempotent matrix. That's the kind of matrix that yields itself when multiplied to itself. Much like 0 and 1 in scalar multiplication (1 x 1 = 1).

It is not so easy to predict the result of a matrix multiplication, especially for large matrices. So, instead of settling with the naïve method of guessing with trial and error, I explored the properties of a square matrix of the order 2.

Since 0 cannot be divided by 0, I could not divide 0 by either term unless it was a non-zero term. Thus, I had two possibilities, to which I called case A and B.

As you can see, I could not use the elimination method in an advantageous manner for this case.

I couldn't get a unique solution in either case. That is because there are many possible square matrices that are idempotent. However, I don't feel comfortable to intuit that every 2 X 2 idempotent matrix has one of only two possible numbers as its first and last elements.

Others’ take on it

So given any 2 X 2 idempotent matrix and its first three elements, you can find the last element unequivocally with this formula.

Conclusion

I wonder if multiples of matrices that satisfy either case are also idempotent. Perhaps I will see if I can prove that in another post.

In the next lecture, professor Venkata Ratnam suggested using the sure-shot approach of a zero matrix. And I was like “Why didn’t I think of that”?

Sunday, September 6, 2020

Setting up Conda Environment for Swagger and Scrapy based project

We have a file that reads "my_yml.yml": name: swagger2 channels: - conda-forge - defaults dependencies: - beautifulsoup4 - connexion - flask - flask_cors - scrapy It will do these three things: 1. It will create an environment "swagger2". 2. For downloading packages, it will use the channels: "conda-forge" and "defaults" 3. The packages it will install are mentioned as "dependencies". Checking our current environments: (base) C:\Users\Ashish Jain>conda env list # conda environments: base * E:\programfiles\Anaconda3 env_py_36 E:\programfiles\Anaconda3\envs\env_py_36 tf E:\programfiles\Anaconda3\envs\tf (base) C:\experiment_with_conda>conda env create -f my_yml.yml Collecting package metadata (repodata.json): done Solving environment: done Downloading and Extracting Packages pysocks-1.7.1 | 27 KB | ### | 100% flask_cors-3.0.9 | 15 KB | ### | 100% chardet-3.0.4 | 189 KB | ### | 100% clickclick-1.2.2 | 9 KB | ### | 100% cssselect-1.1.0 | 18 KB | ### | 100% importlib-metadata-1 | 45 KB | ### | 100% attrs-20.2.0 | 41 KB | ### | 100% protego-0.1.16 | 2.6 MB | ### | 100% twisted-20.3.0 | 5.1 MB | ### | 100% pywin32-227 | 6.9 MB | ### | 100% pyrsistent-0.16.0 | 91 KB | ### | 100% beautifulsoup4-4.9.1 | 86 KB | ### | 100% connexion-2.7.0 | 51 KB | ### | 100% pyhamcrest-2.0.2 | 29 KB | ### | 100% libxslt-1.1.33 | 499 KB | ### | 100% libxml2-2.9.10 | 3.5 MB | ### | 100% incremental-17.5.0 | 14 KB | ### | 100% flask-1.1.2 | 70 KB | ### | 100% scrapy-2.3.0 | 640 KB | ### | 100% automat-20.2.0 | 30 KB | ### | 100% python-3.8.5 | 18.9 MB | ### | 100% bcrypt-3.2.0 | 41 KB | ### | 100% service_identity-18. | 12 KB | ### | 100% win_inet_pton-1.1.0 | 7 KB | ### | 100% cryptography-3.1 | 587 KB | ### | 100% libiconv-1.16 | 680 KB | ### | 100% jmespath-0.10.0 | 21 KB | ### | 100% markupsafe-1.1.1 | 29 KB | ### | 100% parsel-1.6.0 | 15 KB | ### | 100% constantly-15.1.0 | 9 KB | ### | 100% pydispatcher-2.0.5 | 12 KB | ### | 100% zope.interface-5.1.0 | 299 KB | ### | 100% pyasn1-modules-0.2.7 | 60 KB | ### | 100% hyperlink-20.0.1 | 42 KB | ### | 100% inflection-0.5.1 | 9 KB | ### | 100% pyasn1-0.4.8 | 53 KB | ### | 100% w3lib-1.22.0 | 21 KB | ### | 100% pathlib2-2.3.5 | 34 KB | ### | 100% jinja2-2.11.2 | 93 KB | ### | 100% setuptools-49.6.0 | 968 KB | ### | 100% queuelib-1.5.0 | 13 KB | ### | 100% itemloaders-1.0.2 | 14 KB | ### | 100% pyyaml-5.3.1 | 158 KB | ### | 100% soupsieve-2.0.1 | 30 KB | ### | 100% brotlipy-0.7.0 | 368 KB | ### | 100% wincertstore-0.2 | 13 KB | ### | 100% lxml-4.5.2 | 1.1 MB | ### | 100% cffi-1.14.1 | 227 KB | ### | 100% itsdangerous-1.1.0 | 16 KB | ### | 100% click-7.1.2 | 64 KB | ### | 100% certifi-2020.6.20 | 151 KB | ### | 100% python_abi-3.8 | 4 KB | ### | 100% zlib-1.2.11 | 126 KB | ### | 100% openapi-spec-validat | 23 KB | ### | 100% jsonschema-3.2.0 | 108 KB | ### | 100% itemadapter-0.1.0 | 10 KB | ### | 100% Preparing transaction: done Verifying transaction: done Executing transaction: done # # To activate this environment, use # # $ conda activate swagger2 # # To deactivate an active environment, use # # $ conda deactivate (base) C:\experiment_with_conda>conda activate swagger2 (swagger2) C:\experiment_with_conda>conda env export name: swagger2 channels: - conda-forge - defaults dependencies: - attrs=20.2.0=pyh9f0ad1d_0 - automat=20.2.0=py_0 - bcrypt=3.2.0=py38h1e8a9f7_0 - beautifulsoup4=4.9.1=py_1 - brotlipy=0.7.0=py38h1e8a9f7_1000 - ca-certificates=2020.6.20=hecda079_0 - certifi=2020.6.20=py38h32f6830_0 - cffi=1.14.1=py38hba49e27_0 - chardet=3.0.4=py38h32f6830_1006 - click=7.1.2=pyh9f0ad1d_0 - clickclick=1.2.2=py_1 - connexion=2.7.0=py_0 - constantly=15.1.0=py_0 - cryptography=3.1=py38hba49e27_0 - cssselect=1.1.0=py_0 - flask=1.1.2=pyh9f0ad1d_0 - flask_cors=3.0.9=pyh9f0ad1d_0 - hyperlink=20.0.1=pyh9f0ad1d_0 - idna=2.10=pyh9f0ad1d_0 - importlib-metadata=1.7.0=py38h32f6830_0 - importlib_metadata=1.7.0=0 - incremental=17.5.0=py_0 - inflection=0.5.1=pyh9f0ad1d_0 - itemadapter=0.1.0=py_0 - itemloaders=1.0.2=py_0 - itsdangerous=1.1.0=py_0 - jinja2=2.11.2=pyh9f0ad1d_0 - jmespath=0.10.0=pyh9f0ad1d_0 - jsonschema=3.2.0=py38h32f6830_1 - libiconv=1.16=he774522_0 - libxml2=2.9.10=h1006b36_2 - libxslt=1.1.33=h579f668_1 - lxml=4.5.2=py38he3d0fc9_0 - markupsafe=1.1.1=py38h9de7a3e_1 - openapi-spec-validator=0.2.9=pyh9f0ad1d_0 - openssl=1.1.1g=he774522_1 - parsel=1.6.0=py_0 - pathlib2=2.3.5=py38h32f6830_1 - pip=20.2.2=py_0 - protego=0.1.16=py_0 - pyasn1=0.4.8=py_0 - pyasn1-modules=0.2.7=py_0 - pycparser=2.20=pyh9f0ad1d_2 - pydispatcher=2.0.5=py_1 - pyhamcrest=2.0.2=py_0 - pyopenssl=19.1.0=py_1 - pyrsistent=0.16.0=py38h9de7a3e_0 - pysocks=1.7.1=py38h32f6830_1 - python=3.8.5=h60c2a47_7_cpython - python_abi=3.8=1_cp38 - pywin32=227=py38hfa6e2cd_0 - pyyaml=5.3.1=py38h9de7a3e_0 - queuelib=1.5.0=pyh9f0ad1d_0 - requests=2.24.0=pyh9f0ad1d_0 - scrapy=2.3.0=py38h32f6830_0 - service_identity=18.1.0=py_0 - setuptools=49.6.0=py38h32f6830_0 - six=1.15.0=pyh9f0ad1d_0 - soupsieve=2.0.1=py_1 - sqlite=3.33.0=he774522_0 - twisted=20.3.0=py38h9de7a3e_0 - urllib3=1.25.10=py_0 - vc=14.1=h869be7e_1 - vs2015_runtime=14.16.27012=h30e32a0_2 - w3lib=1.22.0=pyh9f0ad1d_0 - werkzeug=1.0.1=pyh9f0ad1d_0 - wheel=0.35.1=pyh9f0ad1d_0 - win_inet_pton=1.1.0=py38_0 - wincertstore=0.2=py38_1003 - yaml=0.2.5=he774522_0 - zipp=3.1.0=py_0 - zlib=1.2.11=h62dcd97_1009 - zope.interface=5.1.0=py38h9de7a3e_0 prefix: E:\programfiles\Anaconda3\envs\swagger2 (swagger2) C:\experiment_with_conda>conda deactivate (base) C:\experiment_with_conda>conda env remove --name swagger2 Remove all packages in environment E:\programfiles\Anaconda3\envs\swagger2: Alternatively: conda remove --name myenv --all (base) C:\experiment_with_conda>conda info --envs # conda environments: # base * E:\programfiles\Anaconda3 env_py_36 E:\programfiles\Anaconda3\envs\env_py_36 tf E:\programfiles\Anaconda3\envs\tf Ref: conda.io

Saturday, September 5, 2020

Prediction of Nifty50 index using LSTM based model

Here we will use LSTM layers to develop time series forecasting model for the prediction of Nifty50 index's closing value.

Our environment:

(py383) ashish@ashish-VirtualBox:~/Desktop$ conda list keras

# packages in environment at /home/ashish/anaconda3/envs/py383:

#

# Name Version Build Channel

keras 2.4.3 pypi_0 pypi

keras-preprocessing 1.1.2 pypi_0 pypi

(py383) ashish@ashish-VirtualBox:~/Desktop$ conda list tensorflow

# packages in environment at /home/ashish/anaconda3/envs/py383:

#

# Name Version Build Channel

tensorflow 2.2.0 pypi_0 pypi

tensorflow-estimator 2.2.0 pypi_0 pypi

(py383) ashish@ashish-VirtualBox:~/Desktop$ conda list matplotlib

# packages in environment at /home/ashish/anaconda3/envs/py383:

#

# Name Version Build Channel

matplotlib 3.2.2 0

matplotlib-base 3.2.2 py38hef1b27d_0

(py383) ashish@ashish-VirtualBox:~/Desktop$ conda list scikit-learn

# packages in environment at /home/ashish/anaconda3/envs/py383:

#

# Name Version Build Channel

scikit-learn 0.23.1 py38h423224d_0

(py383) ashish@ashish-VirtualBox:~/Desktop$ conda list seaborn

# packages in environment at /home/ashish/anaconda3/envs/py383:

#

# Name Version Build Channel

seaborn 0.10.1 py_0

Python Code:

from __future__ import print_function

import os

import sys

import pandas as pd

import numpy as np

%matplotlib inline

from matplotlib import pyplot as plt

import seaborn as sns

import datetime

from dateutil.parser import parse

from sklearn.metrics import mean_absolute_error

# Read the dataset

l = []

for i in os.listdir('files_2'):

l.append(pd.read_csv(os.path.join('files_2', i)))

df = pd.concat(l, axis = 0)

We have data that looks like:

def convert_str_to_date(in_date):

return parse(in_date)

df['Date'] = df['Date'].apply(convert_str_to_date)

df.sort_values(by = ['Date'], axis = 0, ascending = True, inplace = True, na_position = 'last')

df.reset_index(drop=True, inplace=True)

Gradient descent algorithms perform better (for example converge faster) if the variables are wihtin range [-1, 1]. Many sources relax the boundary to even [-3, 3]. The 'close' variable is mixmax scaled to bound the tranformed variable within [0,1].

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler(feature_range=(0, 1))

df['scaled_close'] = scaler.fit_transform(np.array(df['Close']).reshape(-1, 1))

Before training the model, the dataset is split in two parts - train set and validation set. The neural network is trained on the train set. This means computation of the loss function, back propagation and weights updated by a gradient descent algorithm is done on the train set. The validation set is used to evaluate the model and to determine the number of epochs in model training. Increasing the number of epochs will further decrease the loss function on the train set but might not neccesarily have the same effect for the validation set due to overfitting on the train set. Hence, the number of epochs is controlled by keeping a tap on the loss function computed for the validation set. We use Keras with Tensorflow backend to define and train the model. All the steps involved in model training and validation is done by calling appropriate functions of the Keras API.

# Let's start by splitting the dataset into train and validation.

split_date = datetime.datetime(year=2020, month=8, day=1, hour=0)

df_train = df.loc[df['Date'] < split_date]

df_val = df.loc[df['Date'] >= split_date]

# Reset the indices of the validation set

df_val.reset_index(drop=True, inplace=True)

Now we need to generate regressors (X) and target variable (y) for train and validation. 2-D array of regressor and 1-D array of target is created from the original 1-D array of columm 'Close' in the DataFrames. For the time series forecasting model, Past seven days of observations are used to predict for the next day. This is equivalent to a AR(7) model. We define a function which takes the original time series and the number of timesteps in regressors as input to generate the arrays of X and y.

The makeXy function is used to generate arrays of regressors and targets-X_train, X_val, y_train and y_val. X_train, and X_val, as generated by the makeXy function, are 2D arrays of shape (number of samples, number of timesteps). However, the input to RNN layers must be of shape (number of samples, number of timesteps, number of features per timestep). In this case, we are dealing with only 'Close', hence number of features per timestep is one. Number of timesteps is seven and number of samples is the same as the number of samples in X_train and X_val, which are reshaped to 3D arrays:

def makeXy(ts, nb_timesteps):

"""

Input:

ts: original time series

nb_timesteps: number of time steps in the regressors

Output:

X: 2-D array of regressors

y: 1-D array of target

"""

X = []

y = []

for i in range(nb_timesteps, ts.shape[0]):

X.append(list(ts.loc[i-nb_timesteps:i-1]))

y.append(ts.loc[i])

X, y = np.array(X), np.array(y)

return X, y

X_train, y_train = makeXy(df_train['scaled_close'], 7)

X_val, y_val = makeXy(df_val['scaled_close'], 7)

#X_train and X_val are reshaped to 3D arrays

X_train, X_val = X_train.reshape((X_train.shape[0], X_train.shape[1], 1)), X_val.reshape((X_val.shape[0], X_val.shape[1], 1))

Now we define the MLP using the Keras Functional API. In this approach a layer can be declared as the input of the following layer at the time of defining the next layer.

from keras.layers import Dense, Input, Dropout

from keras.layers.recurrent import LSTM

from keras.optimizers import SGD

from keras.models import Model

from keras.models import load_model

from keras.callbacks import ModelCheckpoint

#Define input layer which has shape (None, 7) and of type float32. None indicates the number of instances

input_layer = Input(shape=(7,1), dtype='float32')

The LSTM layers are defined for seven timesteps. In this example, two LSTM layers are stacked. The first LSTM returns the output from each all seven timesteps. This output is a sequence and is fed to the second LSTM which returns output only from the last step. The first LSTM has sixty four hidden neurons in each timestep. Hence the sequence returned by the first LSTM has sixty four features.

lstm_layer1 = LSTM(64, input_shape=(7,1), return_sequences=True)(input_layer)

lstm_layer2 = LSTM(32, input_shape=(7,64), return_sequences=False)(lstm_layer1)

dropout_layer = Dropout(0.2)(lstm_layer2)

#Finally the output layer gives prediction.

output_layer = Dense(1, activation='linear')(dropout_layer)

The input, dense and output layers will now be packed inside a Model, which is wrapper class for training and making predictions. In case of presence of outliers, mean absolute error (MAE) is used as absolute deviations suffer less fluctuations compared to squared deviations.

The network's weights are optimized by the Adam algorithm. Adam stands for adaptive moment estimation and has been a popular choice for training deep neural networks. Unlike, stochastic gradient descent, adam uses different learning rates for each weight and separately updates the same as the training progresses. The learning rate of a weight is updated based on exponentially weighted moving averages of the weight's gradients and the squared gradients.

ts_model = Model(inputs=input_layer, outputs=output_layer)

ts_model.compile(loss='mean_absolute_error', optimizer='adam')#SGD(lr=0.001, decay=1e-5))

ts_model.summary()

Model: "model_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 7, 1)] 0

_________________________________________________________________

lstm (LSTM) (None, 7, 64) 16896

_________________________________________________________________

lstm_1 (LSTM) (None, 32) 12416

_________________________________________________________________

dropout (Dropout) (None, 32) 0

_________________________________________________________________

dense (Dense) (None, 1) 33

=================================================================

Total params: 29,345

Trainable params: 29,345

Non-trainable params: 0

_________________________________________________________________

The model is trained by calling the fit function on the model object and passing the X_train and y_train. The training is done for a predefined number of epochs. Additionally, batch_size defines the number of samples of train set to be used for a instance of back propagation.The validation dataset is also passed to evaluate the model after every epoch completes. A ModelCheckpoint object tracks the loss function on the validation set and saves the model for the epoch, at which the loss function has been minimum.

save_weights_at = os.path.join('files_1', 'models', 'p5', 'p5_nifty50_LSTM_weights.{epoch:02d}-{val_loss:.4f}.hdf5')

save_best = ModelCheckpoint(save_weights_at, monitor='val_loss', verbose=0, save_best_only=True, save_weights_only=False, mode='min', period=1)

ts_model.fit(x=X_train, y=y_train, batch_size=16, epochs=30, verbose=1, callbacks=[save_best], validation_data=(X_val, y_val), shuffle=True)

WARNING:tensorflow:`period` argument is deprecated. Please use `save_freq` to specify the frequency in number of batches seen.

Epoch 1/30

381/381 [==============================] - 13s 33ms/step - loss: 0.0181 - val_loss: 0.0258

...

381/381 [==============================] - 10s 25ms/step - loss: 0.0175 - val_loss: 0.0384

[tensorflow.python.keras.callbacks.History at 0x7fed1c0a05b0]

Prediction are made from the best saved model. The model's predictions, which are on the standardized 'Rate', are inverse transformed to get predictions of original 'Rate'.

best_model = load_model(os.path.join('files_1', 'models', 'p5', 'p5_nifty50_LSTM_weights.12-0.0057.hdf5'))

preds = best_model.predict(X_val)

pred = scaler.inverse_transform(preds)

pred = np.squeeze(pred)

mae = mean_absolute_error(df_val['Close'].loc[7:], pred)

print('MAE for the validation set:', round(mae, 4))

MAE for the validation set: 65.7769

#Let's plot the actual and predicted values.

plt.figure(figsize=(5.5, 5.5))

plt.plot(range(len(df_val['Close'].loc[7:])), df_val['Close'].loc[7:], linestyle='-', marker='*', color='r')

plt.plot(range(len(df_val['Close'].loc[7:])), pred[:df_val.shape[0]], linestyle='-', marker='.', color='b')

plt.legend(['Actual','Predicted'], loc=2)

plt.title('Actual vs Predicted')

plt.ylabel('Close')

plt.xlabel('Index')

from sklearn.metrics import r2_score

r2 = r2_score(df_val['Close'].loc[7:], pred)

print('R-squared for the validation set:', round(r2,4))

R-squared for the validation set: 0.3702

Subscribe to:

Posts (Atom)