Installing a couple of packages

$ conda install sqlalchemy -c conda-forge $ conda install psycopg2 -c conda-forgeData Ingestion Via PostGRE SQL terminal

PostGRE is case insensitive: These two statements are same: 1: CREATE TABLE states_and_capitals( state_name TEXT NOT NULL, capital_name TEXT NOT NULL, PRIMARY KEY( state_name, capital_name ) ); 2: create table states_and_capitals (state_name text not null, capital_name text not null, primary key(state_name, capital_name)); postgres=# CREATE TABLE states_and_capitals( state_name TEXT NOT NULL, capital_name TEXT NOT NULL, PRIMARY KEY( state_name, capital_name ) ); CREATE TABLE postgres=# create table states_and_capitals (state_name text not null, capital_name text not null, primary key(state_name, capital_name)); ERROR: relation "states_and_capitals" already exists postgres=# postgres=# select * from states_and_capitals; state_name | capital_name ------------+-------------- (0 rows) You can also view your table in the output of this command: select * from information_schema.tables; INSERT INTO states_and_capitals (state_name, capital_name) VALUES ('haryana', 'chandigarh'); INSERT INTO states_and_capitals (state_name, capital_name) VALUES ('punjab', 'chandigarh'); Let's say you made a spelling mistake in a record. Then you can delete that record using the following statement: DELETE FROM states_and_capitals WHERE state_name = 'harayana'; postgres=# select * from states_and_capitals; state_name | capital_name ------------+-------------- punjab | chandigarh haryana | chandigarh (2 rows) postgres=# postgres=# \password postgres Enter new password for user "postgres": postgres Enter it again: postgres postgres=#Reading data from PostgreSQL into Plain Pandas

from sqlalchemy import create_engine import pandas as pd engine = create_engine('postgresql://postgres:postgres@localhost/postgres') df = pd.read_sql("""SELECT * FROM states_and_capitals""", engine) dfIssue while trying with pyspark.pandas

jdbc_engine = 'jdbc:postgresql://postgres:postgres@localhost/postgres' query = """SELECT * FROM states_and_capitals""" import pyspark.pandas as pspd from pyspark import SparkContext from pyspark.sql import SQLContext sc = SparkContext.getOrCreate() sqlCtx = SQLContext(sc) df_pspd = pspd.read_sql(query, jdbc_engine) Py4JJavaError: An error occurred while calling o48.load. : java.sql.SQLException: No suitable driver

Showing posts with label Database. Show all posts

Showing posts with label Database. Show all posts

Tuesday, December 13, 2022

Accessing PostgreSQL from PySpark.Pandas

PostgreSQL Installation on Ubuntu (2022 Dec 13)

Tags: Technology,Database,Contents of install_postgresql.sh

sudo sh -c 'echo "deb http://apt.postgresql.org/pub/repos/apt $(lsb_release -cs)-pgdg main" > /etc/apt/sources.list.d/pgdg.list' wget --quiet -O - https://www.postgresql.org/media/keys/ACCC4CF8.asc | sudo apt-key add - sudo apt-get update sudo apt-get -y install postgresql Ref: postgresql.org $ bash install_postgresql.shFind the file "pg_hba.conf"

(base) ashish@ashish-Lenovo-ideapad-130-15IKB:~/Desktop$ locate pg_hba.conf /etc/postgresql/15/main/pg_hba.conf /home/ashish/anaconda3/pkgs/libpq-12.9-h16c4e8d_3/share/pg_hba.conf.sample /home/ashish/anaconda3/share/pg_hba.conf.sample /usr/share/postgresql/15/pg_hba.conf.sampleEdit the file "pg_hba.conf"

$ sudo nano /etc/postgresql/15/main/pg_hba.conf $ sudo cat /etc/postgresql/15/main/pg_hba.conf ... # Database administrative login by Unix domain socket local all postgres md5 # TYPE DATABASE USER ADDRESS METHOD # "local" is for Unix domain socket connections only local all all trustTest the installed DB

$ sudo -i -u postgres postgres@ashish-Lenovo-ideapad-130-15IKB:~$ psql psql (15.1 (Ubuntu 15.1-1.pgdg22.10+1)) Type "help" for help. postgres=# \du List of roles Role name | Attributes | Member of -----------+------------------------------------------------------------+----------- postgres | Superuser, Create role, Create DB, Replication, Bypass RLS | {} postgres=# postgres=# SELECT CURRENT_DATE ; current_date -------------- 2022-12-13 (1 row) postgres=# select version(); version ----------------------------------------------------------------------------------------------------------------------------- PostgreSQL 15.1 (Ubuntu 15.1-1.pgdg22.10+1) on x86_64-pc-linux-gnu, compiled by gcc (Ubuntu 12.2.0-3ubuntu1) 12.2.0, 64-bit (1 row)

Saturday, October 15, 2022

MongoDB and Node.js Installation on Ubuntu (Oct 2022)

Tags: Technology,Database,JavaScript,Linux,Part 1: MongoDB

(base) ashish@ashishlaptop:~/Desktop$ sudo apt-get install -y mongodb-org=6.0.2 mongodb-org-database=6.0.2 mongodb-org-server=6.0.2 mongodb-org-mongos=6.0.2 mongodb-org-tools=6.0.2 Reading package lists... Done Building dependency tree... Done Reading state information... Done Some packages could not be installed. This may mean that you have requested an impossible situation or if you are using the unstable distribution that some required packages have not yet been created or been moved out of Incoming. The following information may help to resolve the situation: The following packages have unmet dependencies: mongodb-org-mongos : Depends: libssl1.1 (>= 1.1.1) but it is not installable mongodb-org-server : Depends: libssl1.1 (>= 1.1.1) but it is not installable E: Unable to correct problems, you have held broken packages. References 1) Install MongoDB (latest) on Ubuntu 2) Install MongoDB (v6.0) on Ubuntu 3) Install MongoDB (v5.0) on UbuntuResolution

Dated: 2022-Oct-14 MongoDb has no official build for ubuntu 22.04 at the moment. Ubuntu 22.04 has upgraded libssl to 3 and does not propose libssl1.1 You can force the installation of libssl1.1 by adding the ubuntu 20.04 source: $ echo "deb http://security.ubuntu.com/ubuntu focal-security main" | sudo tee /etc/apt/sources.list.d/focal-security.list $ sudo apt-get update $ sudo apt-get install libssl1.1 Then use your commands to install mongodb-org. Then delete the focal-security list file you just created: $ sudo rm /etc/apt/sources.list.d/focal-security.list [ Ref ]Part 2: Node.js

(base) ashish@ashishlaptop:~/Desktop/node$ node Command 'node' not found, but can be installed with: sudo apt install nodejs (base) ashish@ashishlaptop:~/Desktop/node$ sudo apt install nodejs (base) ashish@ashishlaptop:~/Desktop/node$ node -v v12.22.9 (base) ashish@ashishlaptop:~/Desktop/node$ npm Command 'npm' not found, but can be installed with: sudo apt install npm $ sudo apt install npm (base) ashish@ashishlaptop:~/Desktop/node$ npm -v 8.5.1Issue when MongoDB client is not installed

(base) ashish@ashishlaptop:~/Desktop/node$ node Welcome to Node.js v12.22.9. Type ".help" for more information. > var mongo = require('mongodb'); Uncaught Error: Cannot find module 'mongodb' Require stack: - <repl> at Function.Module._resolveFilename (internal/modules/cjs/loader.js:815:15) at Function.Module._load (internal/modules/cjs/loader.js:667:27) at Module.require (internal/modules/cjs/loader.js:887:19) at require (internal/modules/cjs/helpers.js:74:18) { code: 'MODULE_NOT_FOUND', requireStack: [ '<repl>' ] } > (base) ashish@ashishlaptop:~/Desktop/node$ npm install mongodb added 20 packages, and audited 21 packages in 35s 3 packages are looking for funding run `npm fund` for details found 0 vulnerabilitiesAfter mongoDB client has been installed

> var mongo = require('mongodb'); undefined >

Friday, October 14, 2022

Node.js and MongoDB Solved Interview Problem (Oct 2022)

Question: I'm making 2 queries (or a single query) to the database. What I want to achieve: If one of them is null, I want to add the value 'nil'. Example: field1: nil, field2: 'value' If both are null, then I want it to respond with the 'not found' message. What's a good approach for this? Answer:Tags: Technology,Database,JavaScript,First, we setup the local database.

Running a local instance

(base) ashish@ashishlaptop:~/Desktop$ mongosh Current Mongosh Log ID: 63498870b0f4b94029f7b626 Connecting to: mongodb://127.0.0.1:27017/?directConnection=true&serverSelectionTimeoutMS=2000&appName=mongosh+1.6.0 Using MongoDB: 6.0.2 Using Mongosh: 1.6.0 For mongosh info see: https://docs.mongodb.com/mongodb-shell/ To help improve our products, anonymous usage data is collected and sent to MongoDB periodically (https://www.mongodb.com/legal/privacy-policy). You can opt-out by running the disableTelemetry() command. ------ The server generated these startup warnings when booting 2022-10-14T21:33:50.958+05:30: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine. See http://dochub.mongodb.org/core/prodnotes-filesystem 2022-10-14T21:33:52.643+05:30: Access control is not enabled for the database. Read and write access to data and configuration is unrestricted 2022-10-14T21:33:52.644+05:30: vm.max_map_count is too low ------ ------ Enable MongoDB's free cloud-based monitoring service, which will then receive and display metrics about your deployment (disk utilization, CPU, operation statistics, etc). The monitoring data will be available on a MongoDB website with a unique URL accessible to you and anyone you share the URL with. MongoDB may use this information to make product improvements and to suggest MongoDB products and deployment options to you. To enable free monitoring, run the following command: db.enableFreeMonitoring() To permanently disable this reminder, run the following command: db.disableFreeMonitoring() ------ test> test> db test test> show dbs admin 40.00 KiB config 12.00 KiB local 72.00 KiB test> test> test> db.createCollection("ccn1") { ok: 1 } test> db.ccn1.insertOne({"name":"Ashish Jain","address":"Delhi"}) { acknowledged: true, insertedId: ObjectId("634989627d163a41b75e1e13") } test> Next Command db.ccn1.insertMany([ {"name":"","address":"India"}, {"name":"","address":""}, {"name":"Ash","address":""} ]) test> db.ccn1.insertMany([ ... {"name":"","address":"India"}, ... {"name":"","address":""}, ... {"name":"Ash","address":""} ... ]) { acknowledged: true, insertedIds: { '0': ObjectId("634989cc7d163a41b75e1e14"), '1': ObjectId("634989cc7d163a41b75e1e15"), '2': ObjectId("634989cc7d163a41b75e1e16") } } test>Our Database Looks Like This

[ { _id: new ObjectId("634989627d163a41b75e1e13"), name: 'Ashish Jain', address: 'Delhi' }, { _id: new ObjectId("634989cc7d163a41b75e1e14"), name: '', address: 'India' }, { _id: new ObjectId("634989cc7d163a41b75e1e15"), name: '', address: '' }, { _id: new ObjectId("634989cc7d163a41b75e1e16"), name: 'Ash', address: '' } ]This is our Node.js code in file "script.js"

var MongoClient = require('mongodb').MongoClient; var url = "mongodb://localhost:27017/"; MongoClient.connect(url, function(err, db) { if (err) throw err; var dbo = db.db("test"); var all_docs = dbo.collection("ccn1").find({}).toArray(function(err, result) { if (err) throw err; for (i in result){ if(result[i]['name'] && result[i]['address']) { console.log("name: " + result[i]['name']) console.log("address: " + result[i]['address']) } else if (result[i]['name'] && !result[i]['address']){ console.log("name: " + result[i]['name']) console.log("address: nil") } else if (!result[i]['name'] && result[i]['address']){ console.log("name: nil") console.log("address: " + result[i]['address']) } else { console.log("Not Found") } console.log() } db.close(); }); });This is our output

(base) ashish@ashishlaptop:~/Desktop/software/node$ node script.js name: Ashish Jain address: Delhi name: nil address: India Not Found name: Ash address: nil

Monday, September 5, 2022

Creating a dummy database and collection in MongoDB Cloud and reading the dummy document using PyMongo

Login to MongoDB Atlas using your Google credentials. And perform the following actions as shown in screenshots below: 1: 2: 3: 4:Tags: Database,CloudNext, we check for "pymongo"

(base) ashish@ashish-Lenovo-ideapad-130-15IKB:~/Desktop$ python Python 3.9.12 (main, Apr 5 2022, 06:56:58) [GCC 7.5.0] :: Anaconda, Inc. on linux Type "help", "copyright", "credits" or "license" for more information. >>> import pymongo Traceback (most recent call last): File "<stdin>", line 1, in <module> ModuleNotFoundError: No module named 'pymongo' >>> exit() ENV.YML FILE: name: mongodb channels: - conda-forge dependencies: - pip - pymongoEnvironment Setup

(base) ashish@ashish-Lenovo-ideapad-130-15IKB:~/Desktop$ conda env create -f env.yml Collecting package metadata (repodata.json): done Solving environment: done ==> WARNING: A newer version of conda exists. <== current version: 4.12.0 latest version: 4.14.0 Please update conda by running $ conda update -n base -c defaults conda Downloading and Extracting Packages openssl-3.0.5 | 2.8 MB | ### | 100% python-3.10.6 | 29.0 MB | ### | 100% setuptools-65.3.0 | 782 KB | ### | 100% pymongo-4.2.0 | 1.3 MB | ### | 100% Preparing transaction: done Verifying transaction: done Executing transaction: done # # To activate this environment, use # # $ conda activate mongodb # # To deactivate an active environment, use # # $ conda deactivate - - - - - - - - - - (base) ashish@ashish-Lenovo-ideapad-130-15IKB:~/Desktop$ conda activate mongodb (mongodb) ashish@ashish-Lenovo-ideapad-130-15IKB:~/Desktop$ conda install ipykernel -c conda-forge Collecting package metadata (current_repodata.json): done Solving environment: done ==> WARNING: A newer version of conda exists. <== current version: 4.12.0 latest version: 4.14.0 Please update conda by running $ conda update -n base -c defaults conda ## Package Plan ## environment location: /home/ashish/anaconda3/envs/mongodb added / updated specs: - ipykernel The following packages will be downloaded: package | build ---------------------------|----------------- executing-1.0.0 | pyhd8ed1ab_0 19 KB conda-forge ipykernel-6.15.2 | pyh210e3f2_0 96 KB conda-forge ipython-8.4.0 | pyh41d4057_1 552 KB conda-forge jupyter_client-7.3.5 | pyhd8ed1ab_0 91 KB conda-forge psutil-5.9.2 | py310h5764c6d_0 350 KB conda-forge stack_data-0.5.0 | pyhd8ed1ab_0 24 KB conda-forge ------------------------------------------------------------ Total: 1.1 MB The following NEW packages will be INSTALLED: asttokens conda-forge/noarch::asttokens-2.0.8-pyhd8ed1ab_0 backcall conda-forge/noarch::backcall-0.2.0-pyh9f0ad1d_0 backports conda-forge/noarch::backports-1.0-py_2 backports.functoo~ conda-forge/noarch::backports.functools_lru_cache-1.6.4-pyhd8ed1ab_0 debugpy conda-forge/linux-64::debugpy-1.6.3-py310hd8f1fbe_0 decorator conda-forge/noarch::decorator-5.1.1-pyhd8ed1ab_0 entrypoints conda-forge/noarch::entrypoints-0.4-pyhd8ed1ab_0 executing conda-forge/noarch::executing-1.0.0-pyhd8ed1ab_0 ipykernel conda-forge/noarch::ipykernel-6.15.2-pyh210e3f2_0 ipython conda-forge/noarch::ipython-8.4.0-pyh41d4057_1 jedi conda-forge/noarch::jedi-0.18.1-pyhd8ed1ab_2 jupyter_client conda-forge/noarch::jupyter_client-7.3.5-pyhd8ed1ab_0 jupyter_core conda-forge/linux-64::jupyter_core-4.11.1-py310hff52083_0 libsodium conda-forge/linux-64::libsodium-1.0.18-h36c2ea0_1 matplotlib-inline conda-forge/noarch::matplotlib-inline-0.1.6-pyhd8ed1ab_0 nest-asyncio conda-forge/noarch::nest-asyncio-1.5.5-pyhd8ed1ab_0 packaging conda-forge/noarch::packaging-21.3-pyhd8ed1ab_0 parso conda-forge/noarch::parso-0.8.3-pyhd8ed1ab_0 pexpect conda-forge/noarch::pexpect-4.8.0-pyh9f0ad1d_2 pickleshare conda-forge/noarch::pickleshare-0.7.5-py_1003 prompt-toolkit conda-forge/noarch::prompt-toolkit-3.0.30-pyha770c72_0 psutil conda-forge/linux-64::psutil-5.9.2-py310h5764c6d_0 ptyprocess conda-forge/noarch::ptyprocess-0.7.0-pyhd3deb0d_0 pure_eval conda-forge/noarch::pure_eval-0.2.2-pyhd8ed1ab_0 pygments conda-forge/noarch::pygments-2.13.0-pyhd8ed1ab_0 pyparsing conda-forge/noarch::pyparsing-3.0.9-pyhd8ed1ab_0 python-dateutil conda-forge/noarch::python-dateutil-2.8.2-pyhd8ed1ab_0 pyzmq conda-forge/linux-64::pyzmq-23.2.1-py310h330234f_0 six conda-forge/noarch::six-1.16.0-pyh6c4a22f_0 stack_data conda-forge/noarch::stack_data-0.5.0-pyhd8ed1ab_0 tornado conda-forge/linux-64::tornado-6.2-py310h5764c6d_0 traitlets conda-forge/noarch::traitlets-5.3.0-pyhd8ed1ab_0 wcwidth conda-forge/noarch::wcwidth-0.2.5-pyh9f0ad1d_2 zeromq conda-forge/linux-64::zeromq-4.3.4-h9c3ff4c_1 Proceed ([y]/n)? y Downloading and Extracting Packages stack_data-0.5.0 | 24 KB | ### | 100% jupyter_client-7.3.5 | 91 KB | ### | 100% executing-1.0.0 | 19 KB | ### | 100% ipython-8.4.0 | 552 KB | ### | 100% ipykernel-6.15.2 | 96 KB | ### | 100% psutil-5.9.2 | 350 KB | ### | 100% Preparing transaction: done Verifying transaction: done Executing transaction: done - - - - - - - - - - (mongodb) ashish@ashish-Lenovo-ideapad-130-15IKB:~/Desktop$ conda install jupyterlab -c conda-forge Collecting package metadata (current_repodata.json): done Solving environment: done ==> WARNING: A newer version of conda exists. <== current version: 4.12.0 latest version: 4.14.0 Please update conda by running $ conda update -n base -c defaults conda ## Package Plan ## environment location: /home/ashish/anaconda3/envs/mongodb added / updated specs: - jupyterlab The following packages will be downloaded: package | build ---------------------------|----------------- anyio-3.6.1 | pyhd8ed1ab_1 83 KB conda-forge babel-2.10.3 | pyhd8ed1ab_0 6.7 MB conda-forge cryptography-37.0.1 | py310h9ce1e76_0 1.5 MB json5-0.9.5 | pyh9f0ad1d_0 20 KB conda-forge jsonschema-4.15.0 | pyhd8ed1ab_0 64 KB conda-forge jupyter_server-1.18.1 | pyhd8ed1ab_0 232 KB conda-forge jupyterlab-3.4.5 | pyhd8ed1ab_0 5.8 MB conda-forge jupyterlab_server-2.15.1 | pyhd8ed1ab_0 49 KB conda-forge nbclassic-0.4.3 | pyhd8ed1ab_0 7.5 MB conda-forge notebook-shim-0.1.0 | pyhd8ed1ab_0 15 KB conda-forge pysocks-1.7.1 | pyha2e5f31_6 19 KB conda-forge requests-2.28.1 | pyhd8ed1ab_1 53 KB conda-forge sniffio-1.3.0 | pyhd8ed1ab_0 14 KB conda-forge websocket-client-1.4.1 | pyhd8ed1ab_0 42 KB conda-forge ------------------------------------------------------------ Total: 22.2 MB The following NEW packages will be INSTALLED: anyio conda-forge/noarch::anyio-3.6.1-pyhd8ed1ab_1 argon2-cffi conda-forge/noarch::argon2-cffi-21.3.0-pyhd8ed1ab_0 argon2-cffi-bindi~ conda-forge/linux-64::argon2-cffi-bindings-21.2.0-py310h5764c6d_2 attrs conda-forge/noarch::attrs-22.1.0-pyh71513ae_1 babel conda-forge/noarch::babel-2.10.3-pyhd8ed1ab_0 beautifulsoup4 conda-forge/noarch::beautifulsoup4-4.11.1-pyha770c72_0 bleach conda-forge/noarch::bleach-5.0.1-pyhd8ed1ab_0 brotlipy conda-forge/linux-64::brotlipy-0.7.0-py310h5764c6d_1004 certifi conda-forge/noarch::certifi-2022.6.15-pyhd8ed1ab_1 cffi conda-forge/linux-64::cffi-1.15.1-py310h255011f_0 charset-normalizer conda-forge/noarch::charset-normalizer-2.1.1-pyhd8ed1ab_0 cryptography pkgs/main/linux-64::cryptography-37.0.1-py310h9ce1e76_0 defusedxml conda-forge/noarch::defusedxml-0.7.1-pyhd8ed1ab_0 flit-core conda-forge/noarch::flit-core-3.7.1-pyhd8ed1ab_0 icu conda-forge/linux-64::icu-70.1-h27087fc_0 idna conda-forge/noarch::idna-3.3-pyhd8ed1ab_0 importlib-metadata conda-forge/linux-64::importlib-metadata-4.11.4-py310hff52083_0 importlib_metadata conda-forge/noarch::importlib_metadata-4.11.4-hd8ed1ab_0 importlib_resourc~ conda-forge/noarch::importlib_resources-5.9.0-pyhd8ed1ab_0 ipython_genutils conda-forge/noarch::ipython_genutils-0.2.0-py_1 jinja2 conda-forge/noarch::jinja2-3.1.2-pyhd8ed1ab_1 json5 conda-forge/noarch::json5-0.9.5-pyh9f0ad1d_0 jsonschema conda-forge/noarch::jsonschema-4.15.0-pyhd8ed1ab_0 jupyter_server conda-forge/noarch::jupyter_server-1.18.1-pyhd8ed1ab_0 jupyterlab conda-forge/noarch::jupyterlab-3.4.5-pyhd8ed1ab_0 jupyterlab_pygmen~ conda-forge/noarch::jupyterlab_pygments-0.2.2-pyhd8ed1ab_0 jupyterlab_server conda-forge/noarch::jupyterlab_server-2.15.1-pyhd8ed1ab_0 libiconv conda-forge/linux-64::libiconv-1.16-h516909a_0 libxml2 conda-forge/linux-64::libxml2-2.9.14-h22db469_4 libxslt conda-forge/linux-64::libxslt-1.1.35-h8affb1d_0 lxml conda-forge/linux-64::lxml-4.9.1-py310h5764c6d_0 markupsafe conda-forge/linux-64::markupsafe-2.1.1-py310h5764c6d_1 mistune conda-forge/noarch::mistune-2.0.4-pyhd8ed1ab_0 nbclassic conda-forge/noarch::nbclassic-0.4.3-pyhd8ed1ab_0 nbclient conda-forge/noarch::nbclient-0.6.7-pyhd8ed1ab_0 nbconvert conda-forge/noarch::nbconvert-7.0.0-pyhd8ed1ab_0 nbconvert-core conda-forge/noarch::nbconvert-core-7.0.0-pyhd8ed1ab_0 nbconvert-pandoc conda-forge/noarch::nbconvert-pandoc-7.0.0-pyhd8ed1ab_0 nbformat conda-forge/noarch::nbformat-5.4.0-pyhd8ed1ab_0 notebook conda-forge/noarch::notebook-6.4.12-pyha770c72_0 notebook-shim conda-forge/noarch::notebook-shim-0.1.0-pyhd8ed1ab_0 pandoc conda-forge/linux-64::pandoc-2.19.2-ha770c72_0 pandocfilters conda-forge/noarch::pandocfilters-1.5.0-pyhd8ed1ab_0 pkgutil-resolve-n~ conda-forge/noarch::pkgutil-resolve-name-1.3.10-pyhd8ed1ab_0 prometheus_client conda-forge/noarch::prometheus_client-0.14.1-pyhd8ed1ab_0 pycparser conda-forge/noarch::pycparser-2.21-pyhd8ed1ab_0 pyopenssl conda-forge/noarch::pyopenssl-22.0.0-pyhd8ed1ab_0 pyrsistent conda-forge/linux-64::pyrsistent-0.18.1-py310h5764c6d_1 pysocks conda-forge/noarch::pysocks-1.7.1-pyha2e5f31_6 python-fastjsonsc~ conda-forge/noarch::python-fastjsonschema-2.16.1-pyhd8ed1ab_0 pytz conda-forge/noarch::pytz-2022.2.1-pyhd8ed1ab_0 requests conda-forge/noarch::requests-2.28.1-pyhd8ed1ab_1 send2trash conda-forge/noarch::send2trash-1.8.0-pyhd8ed1ab_0 sniffio conda-forge/noarch::sniffio-1.3.0-pyhd8ed1ab_0 soupsieve conda-forge/noarch::soupsieve-2.3.2.post1-pyhd8ed1ab_0 terminado conda-forge/linux-64::terminado-0.15.0-py310hff52083_0 tinycss2 conda-forge/noarch::tinycss2-1.1.1-pyhd8ed1ab_0 typing_extensions conda-forge/noarch::typing_extensions-4.3.0-pyha770c72_0 urllib3 conda-forge/noarch::urllib3-1.26.11-pyhd8ed1ab_0 webencodings conda-forge/noarch::webencodings-0.5.1-py_1 websocket-client conda-forge/noarch::websocket-client-1.4.1-pyhd8ed1ab_0 zipp conda-forge/noarch::zipp-3.8.1-pyhd8ed1ab_0 Proceed ([y]/n)? y Downloading and Extracting Packages websocket-client-1.4 | 42 KB | ### | 100% nbclassic-0.4.3 | 7.5 MB | ### | 100% anyio-3.6.1 | 83 KB | ### | 100% sniffio-1.3.0 | 14 KB | ### | 100% pysocks-1.7.1 | 19 KB | ### | 100% babel-2.10.3 | 6.7 MB | ### | 100% notebook-shim-0.1.0 | 15 KB | ### | 100% jupyterlab_server-2. | 49 KB | ### | 100% json5-0.9.5 | 20 KB | ### | 100% jupyterlab-3.4.5 | 5.8 MB | ### | 100% jsonschema-4.15.0 | 64 KB | ### | 100% jupyter_server-1.18. | 232 KB | ### | 100% requests-2.28.1 | 53 KB | ### | 100% cryptography-37.0.1 | 1.5 MB | ### | 100% Preparing transaction: done Verifying transaction: done Executing transaction: done (mongodb) ashish@ashish-Lenovo-ideapad-130-15IKB:~/Desktop$Installing kernel for Jupyter Lab

(mongodb) ashish@ashish-Lenovo-ideapad-130-15IKB:~/Desktop$ python -m ipykernel install --user --name mongodb Installed kernelspec mongodb in /home/ashish/.local/share/jupyter/kernels/mongodb (mongodb) ashish@ashish-Lenovo-ideapad-130-15IKB:~/Desktop$Testing Python Environment

import pymongo client = pymongo.MongoClient("mongodb+srv://$USERNAME:$PASSWORD@abc.xyz.mongodb.net/?retryWrites=true&w=majority") db = client.test --------------------------------------------------------------------------- ConfigurationError Traceback (most recent call last) Input In [1], in <cell line: 2>() 1 import pymongo ----> 2 client = pymongo.MongoClient("mongodb+srv://$USERNAME:$PASSWORD@abc.xyz.mongodb.net/?retryWrites=true&w=majority") 3 db = client.test File ~/anaconda3/envs/mongodb/lib/python3.10/site-packages/pymongo/mongo_client.py:726, in MongoClient.__init__(self, host, port, document_class, tz_aware, connect, type_registry, **kwargs) 722 if timeout is not None: 723 timeout = common.validate_timeout_or_none_or_zero( 724 keyword_opts.cased_key("connecttimeoutms"), timeout 725 ) --> 726 res = uri_parser.parse_uri( 727 entity, 728 port, 729 validate=True, 730 warn=True, 731 normalize=False, 732 connect_timeout=timeout, 733 srv_service_name=srv_service_name, 734 srv_max_hosts=srv_max_hosts, 735 ) 736 seeds.update(res["nodelist"]) 737 username = res["username"] or username File ~/anaconda3/envs/mongodb/lib/python3.10/site-packages/pymongo/uri_parser.py:469, in parse_uri(uri, default_port, validate, warn, normalize, connect_timeout, srv_service_name, srv_max_hosts) 467 if not _HAVE_DNSPYTHON: 468 python_path = sys.executable or "python" --> 469 raise ConfigurationError( 470 'The "dnspython" module must be ' 471 "installed to use mongodb+srv:// URIs. " 472 "To fix this error install pymongo with the srv extra:\n " 473 '%s -m pip install "pymongo[srv]"' % (python_path) 474 ) 475 is_srv = True 476 scheme_free = uri[SRV_SCHEME_LEN:] ConfigurationError: The "dnspython" module must be installed to use mongodb+srv:// URIs. To fix this error install pymongo with the srv extra: /home/ashish/anaconda3/envs/mongodb/bin/python -m pip install "pymongo[srv]"Fix:

(base) ashish@ashish-Lenovo-ideapad-130-15IKB:~/Desktop$ conda activate mongodb (mongodb) ashish@ashish-Lenovo-ideapad-130-15IKB:~/Desktop$ python -m pip install "pymongo[srv]" Requirement already satisfied: pymongo[srv] in /home/ashish/anaconda3/envs/mongodb/lib/python3.10/site-packages (4.2.0) Collecting dnspython<3.0.0,>=1.16.0 Downloading dnspython-2.2.1-py3-none-any.whl (269 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 269.1/269.1 kB 123.6 kB/s eta 0:00:00 Installing collected packages: dnspython Successfully installed dnspython-2.2.1 (mongodb) ashish@ashish-Lenovo-ideapad-130-15IKB:~/Desktop$ISSUE WITH SPECIAL CHARACTERS IN PASSWORD

import pymongo client = pymongo.MongoClient("mongodb+srv://$USERNAME:$PASSWORD@abc.xyz.mongodb.net/?retryWrites=true&w=majority") db = client.test --------------------------------------------------------------------------- InvalidURI Traceback (most recent call last) Input In [1], in <cell line: 2>() 1 import pymongo ----> 2 client = pymongo.MongoClient("mongodb+srv://$USERNAME:$PASSWORD@abc.xyz.mongodb.net/?retryWrites=true&w=majority") 3 db = client.test File ~/anaconda3/envs/mongodb/lib/python3.10/site-packages/pymongo/mongo_client.py:726, in MongoClient.__init__(self, host, port, document_class, tz_aware, connect, type_registry, **kwargs) 722 if timeout is not None: 723 timeout = common.validate_timeout_or_none_or_zero( 724 keyword_opts.cased_key("connecttimeoutms"), timeout 725 ) --> 726 res = uri_parser.parse_uri( 727 entity, 728 port, 729 validate=True, 730 warn=True, 731 normalize=False, 732 connect_timeout=timeout, 733 srv_service_name=srv_service_name, 734 srv_max_hosts=srv_max_hosts, 735 ) 736 seeds.update(res["nodelist"]) 737 username = res["username"] or username File ~/anaconda3/envs/mongodb/lib/python3.10/site-packages/pymongo/uri_parser.py:516, in parse_uri(uri, default_port, validate, warn, normalize, connect_timeout, srv_service_name, srv_max_hosts) 514 if "@" in host_part: 515 userinfo, _, hosts = host_part.rpartition("@") --> 516 user, passwd = parse_userinfo(userinfo) 517 else: 518 hosts = host_part File ~/anaconda3/envs/mongodb/lib/python3.10/site-packages/pymongo/uri_parser.py:71, in parse_userinfo(userinfo) 60 """Validates the format of user information in a MongoDB URI. 61 Reserved characters that are gen-delimiters (":", "/", "?", "#", "[", 62 "]", "@") as per RFC 3986 must be escaped. (...) 68 - `userinfo`: A string of the form <username>:<password> 69 """ 70 if "@" in userinfo or userinfo.count(":") > 1 or _unquoted_percent(userinfo): ---> 71 raise InvalidURI( 72 "Username and password must be escaped according to " 73 "RFC 3986, use urllib.parse.quote_plus" 74 ) 76 user, _, passwd = userinfo.partition(":") 77 # No password is expected with GSSAPI authentication. InvalidURI: Username and password must be escaped according to RFC 3986, use urllib.parse.quote_plusAll Issues Resolved. See Successful Code Run Below:

import pymongo import urllib uri = "mongodb+srv://$USERNAME:" + urllib.parse.quote_plus("$PASSWORD") + "@abc.xyz.mongodb.net/?retryWrites=true&w=majority" client = pymongo.MongoClient(uri) print(client) MongoClient(host=['ac-af4wahu-shard-00-02.xyz.mongodb.net:27017', 'ac-af4wahu-shard-00-01.xyz.mongodb.net:27017', 'ac-af4wahu-shard-00-00.xyz.mongodb.net:27017'], document_class=dict, tz_aware=False, connect=True, retrywrites=True, w='majority', authsource='admin', replicaset='atlas-3xaqbc-shard-0', tls=True) db = client['db1'] collection = db['ccn1'] print(collection.count_documents({})) # Output: 1 print(collection.find({})) <pymongo.cursor.Cursor at 0x7fd632c38850> import pprint for doc in collection.find(): pprint.pprint(doc) {'_id': ObjectId('6315c3a74afb509774a88467'), 'address': 'Delhi', 'name': 'Ashish Jain'}

Saturday, February 5, 2022

Create Table Statement and Constraints Assignment

Table Creation and Data insertion: Create following Tables with required constraints (as mentioned) using SQL DDL.

Table: Student

| sid

(int) Primary Key |

sname

Varying Char (10) |

sbranch

Char(5) |

sage

(int) (not greater than 25) |

| 1001 | Kamal | IT | 18 |

| 1004 | Yogesh | IT | 17 |

| 1011 | Mani |

CSE

|

20

|

|

1022

|

Farooq

|

EEE

|

21 |

|

1027

|

Girija

|

CSE |

24

|

|

1030

|

Mousmi

|

CSE

|

22

|

|

1036

|

Rajanish

|

EEE

|

21

|

|

1052

|

Praneet

|

EEE

|

21

|

|

1059

|

Joseph

|

CSE | 17 |

| 1063 | Lahiri | IT | 21 |

Table: Course

|

cid

(int) PK |

Cname

(chars 4 and unique) |

units

(int) |

| 10 | OS | 3 |

| 20 | DBMS | 4 |

| 30 | CNW | 5 |

| 40 | PHY | 3 |

Table: StudentCourse

|

stid

PK1 and FK to sid of student |

cno

PK2 and FK to cid of course |

| 1001 | 20 |

| 1001 | 30 |

| 1004 | 10 |

|

1004

|

20

|

|

1004

|

30

|

|

1004

|

40

|

|

1027

|

20

|

|

1027

|

30 |

| 1036 | 10 |

| 1036 | 30 |

| 1036 | 20 |

| 1059 | 10 |

| 1063 | 20 |

Solution

Tags: Database,Technology,DDL: Data Definition Language

- create - alter - dropDML: Data Manipulation Language

- select - insert - update - deleteMicrosoft SQL Server

How to add a "Check Constraint" on a column:

CREATE TABLE CountryList ( Id INT IDENTITY PRIMARY KEY, CountryName VARCHAR(255) NOT NULL, CountryPopulation INT CHECK(CountryPopulation > 0) ) ALTER TABLE student ADD CONSTRAINT CheckSage CHECK (sage > 25);Adding a "Foreign Key"

CREATE TABLE Orders ( OrderID int NOT NULL, OrderNumber int NOT NULL, PersonID int, PRIMARY KEY (OrderID), FOREIGN KEY (PersonID) REFERENCES Persons(PersonID) );Creating a 'Composite Primary Key'

Create table StudentCourse ( stid integer FOREIGN Key REFERENCES Student (sid) , cno integer Foreign Key References Course (cid), PRIMARY KEY (stid, cno) );A mistake that students make...

Create table StudentCourse ( stid integer Primary Key FOREIGN Key REFERENCES Student (sid), cno integer PRIMARY key Foreign Key References Course (cid) ); Msg 8110, Level 16, State 0, Line 1 Cannot add multiple PRIMARY KEY constraints to table 'StudentCourse'.Oracle DB

SQL> create table student_ashish_12345 (sid int primary key, sname varchar2(10), sbranch char(5), sage int constraint stud_sage_ck check (sage <= 25)); SQL> create table course_ashish_12345 (cid int primary key, cname char(4) unique, units int); SQL> create table studentcourse_ashish_12345 (stid int, cno int, constraint sc_pk primary key(stid, cno), constraint sc_fk1 foreign key (stid) references bits_student (sid), constraint sc_fk2 foreign key (cno) references bits_course (cid)); SQL> select table_name from tabs where table_name like '%ASHISH_12345';

Tuesday, January 4, 2022

Wednesday, December 22, 2021

Basics of Database Management System (by Yaju Gupta)

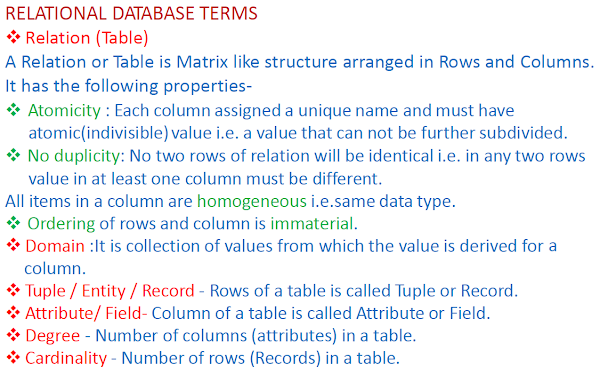

Tags: Technology,Database,Contents

1. Data and Database 2. Database Schema and Instance of DB 3. Data View & Data Independence 4. Data base Management System 5. Purpose of Designing DBMS 6. Evolution of DBMS 7. Database Languages 8. Database User's & Its Administration 9. Structure of Database System and 10. Functionality of Various Components ~~~Data & Database :

Data : Collection of Facts and Statistics together regarding an Object or Case or Scenario. Information : Meaningful data. Database : A place of storing or maintaining data. Statistics : Statistical process output, that deal with finding new things/facts from existing one based on analyzing collections, organizations and presentations.Database Schema & Instance

DB Schema : Overall structure of database. Defined using Data Definition Language by Database Administrator . Instance of DB : Database state at a particular time. That can change with time. For Example :- Medical History database. ~~~Data View : Look and structure at different levels

Physical Level : How it is stored in file on Hard disk (Sequence of Blocks / Bytes). Logical Level : Overall logical structure of database, defined by administrator using Data Definition Language (DDL). View or User Level : The format in which user access the data , only of their concern. In terms of Records / Objects / View. Data Independence : Changes in the schema of database at any level , doesn't affect on the schema of any other level ~~~Database Management System

Collection of Inter-related data and set of programs which are used to access these data. Database is a part of DBMS.Purpose of Designing DBMS

To maintain a Information System for an enterprise . To provide secure and efficient access and management of data, and To provide end user with abstract view of data. In other word they don't need to know how to access data and how it is stored internally. ~~~Evolution of DBMS

To overcome the problems that occurs while maintaining information System (Database) of an Enterprise in terms of collection of Files (File Processing System). Disadvantages of using File Processing System : Data Access Problem Data Isolation Problem Data Redundancy & Inconsistency Problem Data Atomicity (Transaction Management) Problem Concurrent Access Anomalies Security Implementation Problem and Data Integrity Maintenance Problem (Domain, Entity, Validity and Referential Integrity) File Processing System : In FPS all the data related to an organization / enterprise are stored in different files based on format of data. To access different types of data from different files, there is a need of separate data access programs. As the size of data increases (In parallel additional data access programs are required) their management becomes difficult. ~~~ Database Languages : The languages which are used to access and manipulate data of database ( Database Interaction languages ) For Example : SQL ( Structured Query Language ), QUEL, Data log and QBE (Query by Example)Different Types of Database Languages

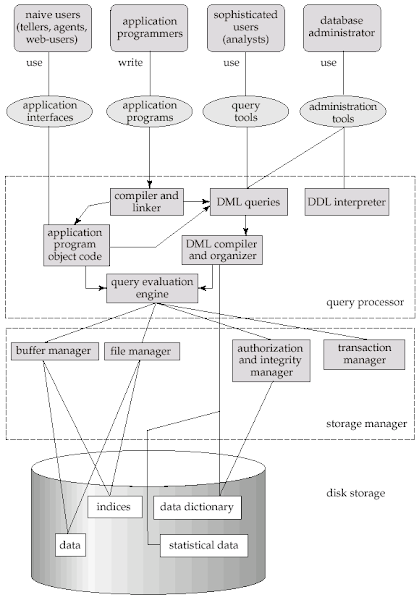

Query Lang. : Used to retrieve information from database Data Definition Lang. : Used to define logical structure of database (DDL) Data Manipulation Lang. : Used to update data in database (DML: Procedural and Non Procedural) Data Control Lang. : Used for Transaction Management (DCL) ~~~ Database User's : Based on the way of interaction with database, all users are classified into following four categories: Application User : Through an Application Sophisticated User : Direct using Query Language Naive User : Through Predefined Application Database Administrator : Through DDL Database Administration : To control the access of data and the programs to access data by different type of users. Functions of DB Administrator : Define & Change DB Logical Structure Describe & Change Storage structure of data Creation of Data access programs Granting authorization to user to access data ~~~Structure of DBMS

~~~Components of DBMS

Query Processor Components Storage Manager Components ( Operating System Functionality ) Disk Storage ( Where actual data and data access programs are stored ) Query Processor Components : DML Compiler , Embedded Pre DML Compiler , DDL Interpreter and Query Engine . Storage Manager Components : Buffer Manager , File Manager , Transaction Manager and Authorization and Integrity Manager . Disk Storage Contents : Data , Indices , Data Dictionary and Statistical Data . ~~~Functionality of Various Components :

Query Processor Components : Embedded Pre DML Compiler : To compile DML statements in Host Language Procedure Call . DML Compiler : To break a DML statement into small lower level instructions to be executed by Query Engine. DDL Interpreter : To break DDL statements into small lower level instructions . Query Engine : It execute lower level instructions by interacting on with various storage manager components depending on the task to be performed . Storage Manager Components : File Manager : Used to allocate space and define the structure of data to be stored on disk. Buffer Manager : Used to transfer data in between main memory and disk and decide data to be placed on Cache. Transaction Manager : To ensure proper execution of a Transaction ( Atomicity, Consistency, Isolation and Durability ) Authorization & Integrity Manager : To ensure correctness and completeness of data. And also to check that it can be accessed only by authorized user's. Disk Storage Contents : Data : Collection of Facts related to an Enterprise / Organization . Statistical Data : New data learned from existing data through statistical process ( Analysis of Collections , Organizations and Presentation of existing data sets ) . Used by DML Compiler for Query Optimization . Indices : Maintain for fast access of data, or to make search fast for required data . Data Dictionary : Hold the overall logical structure of database which is defined by database administrator using DDL . Credits: Yajuvendra Gupta

Sunday, June 14, 2020

Taking table data from Excel to SQL based database

It is a common usecase when we have receive data in Excel sheet (a .XLS or .XLSX file) and we have to take it to an RDBMS such as Oracle Database or PostGRE SQL database. One way to do this transfer of data is: we first convert table data into SQL queries and then run those queries in the SQL interface of the database. This is what our Excel sheet looks like: In the cell [Row 2, Column 7], we insert the following function call to "CONCATENATE": =CONCATENATE("insert into employees values(",RC[-6],",'",RC[-5],"','",RC[-4],"',to_date('",RC[-3],"','MM/DD/YYYY'),'",RC[-2],"','",RC[-1],"');") This fills our cell [Row 2, Column 7] with an 'INSERT' SQL statement. insert into employees values(1 ,'Davolio ','Nancy ',to_date('12/8/1968 ','MM/DD/YYYY'),'EmpID1.pic ','Education includes a BA in psychology from Colorado State University. She also completed (The Art of the Cold Call). Nancy is a member of 'Toastmasters International'. '); Copying this in the entire 'column 7' produces the SQL statements we want. Before running the INSERT statements, we need to have the table "employees" in our database. The 'CREATE TABLE' statement for the "employees" table is: create table employees (EmployeeID number(5) primary key, LastName varchar2(80), FirstName varchar2(80), BirthDate date, Photo varchar2(80), Notes varchar2(4000)); Writing this 'CREATE TABLE' statement requires that we know the datatypes we want for our columns. Now we can populate our RDBMS database using the generated 'INSERT' statements. The Excel file used for this demo can be found here: Google Drive

Subscribe to:

Posts (Atom)